Professional Documents

Culture Documents

Kinectdoc

Uploaded by

rizkhan430Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Kinectdoc

Uploaded by

rizkhan430Copyright:

Available Formats

Kinect Sensor

I. INTRODUCTION

.

BACKGROUND

A sensor (also called detector) is a converter that measures a physical quantity and converts it into a signal which can be read by an observer or by an (today mostly electronic) instrument. Sensors are used in everyday objects such as touchsensitive elevator buttons (tactile sensor) and lamps which dim or brighten by touching the base. There are also innumerable applications for sensors of which most people are never aware. Applications include cars, machines, aerospace, medicine, manufacturing and robotics. A sensor is a device which receives and responds to a signal. A sensor's sensitivity indicates how much the sensor's output changes when the measured quantity changes. Technological progress allows more and more sensors to be manufactured on a microscopic scale as microsensor. In most cases, a microsensor reaches a significantly higher speed and sensitivity compared with macroscopic approaches. Sensors can be used in many devices and electronic instruments. Many electronic devices make use of sensors for obtaining data. A motion detector is one such device. A motion detector is a device for motion detection. That is, it is a device that contains a physical mechanism or electronic sensor that quantifies motion that can be either integrated with or connected to other devices that alert the user of the presence of a moving object within the field of view. They form a vital component of comprehensive security systems, for both homes and businesses. An electronic motion detector contains a motion sensor that transforms the detection of motion into an electric signal. This can be achieved by measuring optical or acoustical changes in the field of view. Most motion detectors can detect up to 15 25 meters (5080ft). A motion detector may be connected to a burglar alarm that is used to alert the home owner or security service after it detects motion. Such a detector may also trigger a red light camera or outdoor lighting.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

The technology behind Kinect was invented in 2005 by Zeev Zalevsky, Alexander Shpunt, Aviad Maizels and Javier Garcia. Since Kinect is mainly used for motion detection it is necessary to have a basic overview of motion detection. Motion detection is a process of confirming a change in position of an object relative to its surroundings or the change in the surroundings relative to an object. This detection can be achieved by both mechanical and electronic methods. In addition to discrete, on or off motion detection, it can also consist of magnitude detection that can measure and quantify the strength or speed of this motion or the object that created it.[1]When motion detection is accomplished by natural organisms, it is called motion perception.Motion can be detected by: sound (acoustic sensors), opacity (optical and infrared sensors and video image processors), geomagnetism (magnetic sensors, magnetometers), reflection of transmitted energy (infrared laser radar, ultrasonic sensors, and microwave radar sensors), electromagnetic induction (inductive-loop detectors), and vibration (triboelectric, seismic, and inertia-switch sensors). Acoustic sensors are based on: electrets effect, inductive coupling, capacitive and fiber coupling, triboelectric effect, piezoelectric effect, optic transmission. Radar intrusion sensors have the lowest rate of false alarms The Kinect sensor is a motion sensing input device. Based around a webcam-style addon peripheral, it enables users to control and interact with the host device without the need to touch a game controller, through a natural user interface using gestures and spoken commands. The principal methods by which motion can be electronically identified are optical detection and acoustical detection. Infrared light or laser technology may be used for optical detection. Since Kinect is an electronic sensor it makes use of such methods for detecting motion. The Kinect sensor also performs other functions such as voice recognition, facial recognition, skeletal tracking along with motion detection.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

II. LITERATURE SURVEY

Robust interactive human body tracking has applications including gaming, humancomputer interaction, security, telepresence, and even health-care. The task has recently been greatly simplied by the introduction of real-time depth cameras. However, even the best existing systems still exhibit limitations. In particular, until the launch of Kinect , none ran at interactive rates on consumer hardware while handling a full range of human body shapes and sizes undergoing general body motions. Some systems achieve high speeds by tracking from frame to frame but struggle to re-initialize quickly and so are not robust. Kinect itself was first announced on June 1, 2009 at E3 2009 under the code name "Project Natal. Although the sensor unit was originally planned to contain a microprocessor that would perform operations such as the system's skeletal mapping, it was revealed in January 2010 that the sensor would no longer feature a dedicated processor. Instead, processing would be handled by one of the processor cores of the host device for example Xbox 360's Xenon CPU. Kinect builds on software technology developed internally by Rare, a subsidiary of Microsoft Game Studios owned by Microsoft, and on range camera technology by Israeli developer PrimeSenses, which developed a system that can interpret specific gestures, making completely hands-free control of electronic devices possible by using an infrared projector and camera and a special microchip to track the movement of objects and individuals in three dimension. This 3D scanner system called Light Coding employs a variant of image-based 3D reconstruction. The Kinect sensor is a horizontal bar connected to a small base with a motorized pivot and is designed to be positioned lengthwise above or below the video display. The device features an "RGB camera, depth sensor and multi-array microphone running proprietary software", which provide full-body 3D motion capture, facial recognition

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

and voice recognition capabilities. At launch, voice recognition was only made available in Japan, the United Kingdom, Canada and the United States. Mainland Europe received the feature later in spring 2011. Currently voice recognition is supported in Australia, Canada, France, Germany, Ireland, Italy, Japan, Mexico, New Zealand, United Kingdom and United States. The Kinect sensor's microphone array enables the Xbox 360 to conduct acoustic source localization and ambient noise suppression, allowing for things such as headset-free party chat over Xbox Live. The depth sensor consists of an infrared laser projector combined with a monochrome CMOS sensor, which captures video data in 3D under any ambient light conditions. The sensing range of the depth sensor is adjustable, and the Kinect software is capable of automatically calibrating the sensor based on gameplay and the player's physical environment, accommodating for the presence of furniture or other obstacles. Described by Microsoft personnel as the primary innovation of Kinect,[37][38][39] the software technology enables advanced gesture recognition, facial recognition and voice recognition.[40] According to information supplied to retailers, Kinect is capable of simultaneously tracking up to six people, including two active players for motion analysis with a feature extraction of 20 joints per player.[41] However, PrimeSense has stated that the number of people the device can "see" (but not process as players) is only limited by how many will fit in the field-of-view of the camera.[42]

This infrared image shows the laser grid Kinect uses to calculate depth

The depth map is visualized here using color gradients from white (near) to blue (far) Reverse engineering has determined that the Kinect sensor outputs video at a frame rate of 30 Hz. The RGB video stream uses 8-bit VGA resolution (640 480 pixels) with a Bayer color filter, while the monochrome depth sensing video stream is in VGA resolution (640 480 pixels) with 11-bit depth, which provides 2,048 levels of sensitivity. The Kinect sensor has a practical ranging limit of 1.23.5 m (3.911 ft) distance when used with the Xbox software. The area required to play Kinect is roughly 6 m2, although the sensor can maintain tracking through an extended range of approximately 0.76 m (2.320 ft). The sensor has an angular field of view of 57

HKBKCE- EEE DEPT- 2012

22

Kinect Sensor

horizontally and 43 vertically, while the motorized pivot is capable of tilting the sensor up to 27 either up or down. The horizontal field of the Kinect sensor at the minimum viewing distance of ~0.8 m (2.6 ft) is therefore ~87 cm (34 in), and the vertical field is ~63 cm (25 in), resulting in a resolution of just over 1.3 mm (0.051 in) per pixel. The microphone array features four microphone capsules[44] and operates with each channel processing 16-bit audio at a sampling rate of 16 kHz. Because the Kinect sensor's motorized tilt mechanism requires more power than the host's USB ports can supply, the device makes use of a proprietary connector combining USB communication with additional power. Redesigned Xbox 360 S models include a special AUX port for accommodating the connector,while older models require a special power supply cable (included with the sensor) that splits the connection into separate USB and power connections; power is supplied from the mains by way of an AC adapter.

III. THE KINECT SENSOR

INTRODUCTION

Kinect is a motion sensing input device by Microsoft. Based around a webcam-style add-on peripheral for the Xbox 360 console, it enables users to control and interact with the Xbox 360 without the need to touch a game controller, through a natural user interface using gestures and spoken commands.[

KINECT SENSOR COMPONENTS

A look at the critical parts that make up the Kinect

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

FIG1.1 KINECT SENSOR COMPONENTS The Kinect sensor includes the following components: 1. 3-D depth sensors Three-dimensional sensors track your body within the play space. 2. RGB camera An RGB (red, green, blue) camera helps identify you and takes in-game pictures and videos. 3. Multiple microphones An array of microphones along the bottom, front edge of the Kinect sensor is used for speech recognition and chat. 4. Motorized tilt A mechanical drive in the base of the Kinect sensor automatically tilts the sensor head up and down when needed.

1.

3-D DEPTH SENSOR

The Prime Sense 3D Sensor sees and tracks user movements within an environment. Depth cameras offer several advantages over traditional Intensity sensors, working in low light levels, giving a calibrated scale estimate, being color and texture invariant, and Resolving silhouette ambiguities in pose. They also greatly simplify the task of background subtraction. The main parts of 3-D Depth sensor are 1. IR Projector(Infrared waves) 2. Standard CMOS Sensor

HKBKCE- EEE DEPT- 2012

22

Kinect Sensor

1.3.1 IR LIGHT SOURCE

1. The depth sensor consists of an infrared laser projector combined with a

monochrome CMOS sensor, which captures video data in 3D under any ambient light conditions. 2. IR Projector OG12 / 0956 / D306 / JG05A is used in Kinect. The PS1080 controls the IR light source in order to project the scene with an IR Light Coding Image(speckle pattern). A standard CMOS image sensor receives the projected IR light and transfers the IR Light coding image to the PS1080. The PS1080 processes the IR image and produces an accurate per-frame depth image of the scene.

FIG 1.2 3-D DEPTH SENSOR

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

1.3.2 STANDARD CMOS IMAGE SENSOR

Kinect uses an IR CMOS [Complementary metaloxidesemiconductor] sensor (Microsoft / X853750001 / VCA379C7130).It is an active pixel sensor. Active-pixel sensor (APS) is an image sensor consisting of an integrated circuit containing an array of pixel sensors, each pixel containing a photodetector and an active amplifier. The speckle patterns which are projected onto the screen are made such that each speckle is distinguishable from others. The CMOS sensor captures the pattern of spots deformed by the geometry of the scene. The internal processor computes the depth from the shift between the reference frame and the current image.

1.3.3 PS1080 A2 PROCESSOR

THE PS1080 SOC HOUSES EXTREMELY PARALLEL COMPUTATIONAL LOGIC, WHICH RECEIVES A LIGHT CODING INFRARED PATTERN AS AN INPUT, AND PRODUCES A VGA(visual graphics array)-SIZE DEPTH IMAGE OF THE SCENE

FIG1.3 BLOCK DIAGRAM OF PS1080-A2

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

The PS1080 SoC(System on a chip) is a multi-sense system, providing a synchronized depth image, color image and audio stream. The PS1080 includes a USB 2.0 interface which is used to pass all data to the host. The PS1080 makes no assumptions about the host device CPU all depth acquisition algorithms run on the PS1080, with only a minimal USB communication layer running on the host. This feature provides depth acquisition capabilities even to computationally limited host devices.

2. RGB CAMERA

Kinect uses a RGB CMOS camera (VNA38209015). This video camera delivers the three basic color components. As part of the Kinect sensor, the RGB camera helps enable facial recognition and more.

3. MULTIPLE MICROPHONES

There are a total of four downward facing microphones: three on the right side and one on the left side.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

Microsoft determined that the best orientation for optimal sound gathering would be downward facing. In order to properly recognize voice commands, the Kinect must perform an audio calibration for the room that its in. Microphones are used mainly for voice recognition and chat.

4. MOTORIZED TILT

Kinect has a tilt motor, which tilts the device according to the movements of the user. The Kinect doesnt have any robust motor. The motor is located in the base and has three fragile plastic gears.

IV. PRINCIPLES USED IN KINECT

STRUCTURED LIGHT IMAGING

Structured light is the process of projecting a known pattern of pixels (often grids or horizontal bars) on to a scene. The way that these deform when striking surfaces allows vision systems to calculate the depth and surface information of the objects in the scene. The Kinect-Camera is the first consumer-grade application to make use of structured light imaging. It uses a pattern of projected infrared-Points to generate a dense 3DImage. Projecting a narrow band of light onto a three-dimensionally shaped surface produces a line of illumination that appears distorted from other perspectives than that of the

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

projector, and can be used for an exact geometric reconstruction of the surface shape (light section). A structured-light 3D scanner is a device for measuring the three-dimensional shape of an object using projected light patterns and a camera system. The Kinect sensor is one such electronic device.

Structured light imaging

SPECKLE PATTERN

When a surface is illuminated by a light wave, according to diffraction theory, each point on an illuminated surface acts as a source of secondary spherical waves. The light at any point in the scattered light field is made up of waves which have been scattered from each point on the illuminated surface. If the surface is rough enough to create path-length differences exceeding one wavelength, giving rise to phase changes greater than 2, the amplitude, and hence the intensity, of the resultant light varies randomly. This phenomenon is made use of in Kinect

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

The technology used in the Kinect sensor for acquiring depth image is called Light Coding.Light Coding works by coding the scene with near IR-light invisible to the naked eye. . A speckle pattern is produced by the IR projector in Kinect. A speckle pattern is a random intensity pattern produced by the mutual interference of a set of wave fronts. The patterns are made so that each Speckle of light is distinguishable from the others. The Kinect CMOS infrared sensor reads the coded light back from the scene.PS1080-A2 chip is connected to the CMOS image sensor and executes a sophisticated parallel computational algorithm to decipher the received light coding and produce a depth image of the scene. The solution is immune to ambient light.

TRIANGULATION

Triangulation is sometimes also referred to as reconstruction. In computer

vision triangulation refers to the process of determining a point in 3D space given its projections onto two, or more, images. n the following, it is assumed that triangulation is made on corresponding image points from two views generated by pinhole cameras. Generalization from these assumptions is discussed here.

Fig 1.4 Triangulation The image above illustrates the epipolar geometry of a pair of stereo cameras of pinhole model. A point x in 3D space is projected onto the respective image plane along a line (green) which goes through the camera's focal point, corresponding image points and . If and and , resulting in the two

is given and the geometry of the

two cameras are known, the two projection lines can be determined and it must be the

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

case that they intersect at point x. Using basic linear algebra that intersection point can be determined in a straightforward way. This technique is employed in Kinect to calculate the depth of objects and obtain a 3D reconstruction. Triangulation is performed for every speckle between a virtual image and the observed pattern by the PS1080-A2 chip in Kinect. The Triangulation process for calculating the depth is as shown below: 1. Light patterns are projected onto the scene

Fig1.5 2. The patterns are made such that each speckle is distinguishable from another.

Fig1.6 3. A reference image at known depth is captured using the camera

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

Fig1.7

4. The shift x is proportional to the depth of the object

Fig1.8

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

V. FUNCTIONS OF KINECT: 1. MOTION SENSING

Depth imaging employed in Kinect sensor helps in calculating the depth of object. PrimeSenses SoC chip is connected to the CMOS image sensor, and executes a sophisticated parallel computational algorithm to decipher the received light coding and produce a depth image of the scene. The solution is immune to ambient light. Every single motion of the body is an input which creates seemingly endless combinations of actions. Knowing this, the Kinect has not been programmed for seemingly endless combination of pre-established actions and reactions in the software. Instead, it would "teach" the itself how to react based on how humans learn: by classifying the gestures of people in the real world. To start the teaching process, Kinect has massive amounts of data from motion-capture in real-life scenarios. The data is processed using a machine-learning algorithm. The data of models representing people of different ages, body types, genders and clothing is mapped in the Kinect. By comparing the obtained image with the mapped image the Kinect is able to recognize gestures and actions of the user.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

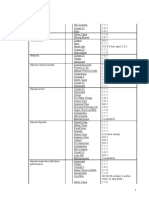

2. VOICE RECOGNITION

Users can directly talk with the Kinect sensor and give voice commands. Speech recognition is supported in the following locales and languages: Locale Australia Canada Canada France Germany Ireland Italy Japan Mexico New Zealand Spain United States Language English English French French German English Italian Japanese Spanish English Spanish English

United Kingdom English

3. FACIAL RECOGNITION

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

Kinect supports facial recognition. Every time a user wants to log in to his account using Kinect he need not type a username and password, but the Kinect sensor just recognizes his face and logs him in. Kinect needs a clear view of the face of the user and also needs to see the whole body to log in. Kinect sensor improves facial recognition each time it is run. Kinect can identify four different players at a time using its facial recognition system.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

VI. WORKING OF KINECT:

Block diagram of Kinect

1.

MOTION SENSING:

Motion detection is a process of confirming a change in position of an object relative to its surroundings or the change in the surroundings relative to an object. This detection can be achieved by both mechanical and electronic methods. Kinect uses an Electronic method to sense motion.

1. The infrared laser projector in the depth sensor projects a fixed pattern of spots

in a grid fashion onto the scene. The speckles size and shape depends on distance and orientation with respect to the sensor. The Kinect uses three different sizes of speckles for three different Regions. First Region: Allows obtaining a high accurate depth surface for near objects aprox (0.8 1.2 m)

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

Second Region: Allows obtaining medium accurate depth surface aprox. (1.2 2.0 m). Third Region: Allows obtaining a low accurate depth surface in far objects aprox (2.0 3.5 m).

This technique used in Kinect is called Light Coding. 1. The light at any point in the scattered light field is made up of waves which have been scattered from each point on the illuminated surface. If the surface is rough enough to create path-length differences it gives rise to phase changes, hence the intensity of the resultant light varies randomly. 2. CMOS IR camera observes the scene. The main function of the CMOS image sensor is to convert the light into electrons. PrimeSenses SoC chip is connected to the CMOS image sensor, and executes a sophisticated parallel computational algorithm to decipher the received light coding and produce a depth image of the scene. 3. The PS1080-A2 chip performs a process called triangulation. Triangulation is also called reconstruction. Reference images at known depth are compared with the received light coding. The shift in the position of the object with respect to the reference image is proportional to the depth of the object.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

4. The PS1080-A2 chip then computes a 3D map using the calibrated speckle pattern. Since Kinect is a real time device after computing the 3d map at one instance, it takes in another image of the next instance and computes the shift in the speckle pattern and reiterates the 3D map again and again. 5. The RGB camera takes color images of the scene working simultaneously with the 3D depth sensor. Using this color image the PS1080-A2 chip performs a process called registration. The Registration processs resulting images are pixel-aligned, which means that every pixel in the color image is aligned to a pixel in the depth image. This process creates more accurate sensory information. 6. The technique used in Kinect to understand the Human body and recognize gestures is called Skeletal Tracking. The software is able to teach the system to classify the skeletal movements, emphasizing the joints and distances between those joints. The data obtained through skeletal tracking is made use for gesture recognition.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

7. The software technology used in Kinect enables advanced gesture recognition,

facial recognition and voice recognition. The human body is capable of an enormous range of poses which are difficult to simulate. Instead, Kinect has a large database of motion capture (mocap) of human actions. Thus it is easily able to span the wide variety of poses people would make in an entertainment scenario. The database consists of approximately 500k frames in a few hundred sequences of driving, dancing, kicking, running, navigating menus, etc. Comparing the data obtained from skeletal tracking with this database Kinect is able to recognize various gestures.

2. VOICE RECOGNITION

1. The Kinect has four microphones, all microphones are downward facing. It has been found that the best orientation for optimal sound gathering would be downward facing.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

2. The Kinect sensor is capable of taking voice commands from the user. it recognize voices from far away with an open mic without the luxury of push-totalk telling it when to listen for voice cues. 3. The trick used by Kinect is beam forming, so it can focus on specific points in the room to listen. At the same time, the audio processor uses the echo profile of the room to perform multichannel echo cancellation, so that the noise coming out of the TV doesnt interfere with the users voice commands. 4. Kinect voice commands, start by saying the word Xbox. Once the user says Xbox, a microphone appears at the bottom of the screen indicating that the microphone is ready for taking commands. 5. Kinect supports standard commands. For example, if the user watching a movie ,if the user wants to fast-forward he has to say Xbox fast forward" to move forward through the movie. 6. Using the Microsoft speech recognition software development kit(SDK), users can program the Kinect sensor to respond to user defined voice commands.

2. FACIAL RECOGNITION

Fig Structured light projected on the scene for facial recognition

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

1. Kinect uses 3 dimensional face recognition. This technique uses 3D sensors to capture information about the shape of a face. This information is then used to identify distinctive features on the surface of a face, such as the contour of the eye sockets, nose, and chin. 2. One advantage of 3D facial recognition is that it is not affected by changes in lighting like other techniques. It can also identify a face from a range of viewing angles, including a profile view.

3. Three-dimensional data points from a face vastly improve the precision of

facial recognition. The sensors work by projecting structured light onto the face. Up to a dozen or more of these image sensors can be placed on the same CMOS chip -- each sensor captures a different part of the spectrum.

4. The sensor is then able to ascertain a users identity by comparing the

incoming data with the databse. 5. Kinect sensor improves facial recognition each time it is used.

VII. APPLICATIONS OF KINECT 1. GAMING

1. kinect COMES AS a add-on peripheral for the Xbox 360 video game

console and Windows PCs. it enables users to control and interact with the Xbox 360 without the need to touch a game controller, through a natural user interface using gestures and spoken commands. The project is aimed at broadening the Xbox 360's audience beyond its typical gamer base.

2. There are more than 80 video games which make use of Kinect sensor

many of which mandatorily require the device. Games that require Kinect have a purple sticker on them with a white silhouette of the Kinect sensor and "Requires Kinect Sensor" underneath in white text. [86] Games that have optional Kinect support (meaning that Kinect is

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

not necessary to play the game or that there are optional Kinect minigames included) feature a standard green Xbox 360 case with a purple bar underneath the header, a silhouette of the Kinect sensor and "Better with Kinect Sensor" next to it in white text.[

2. HOME ENETRTAINMENT

1. Applications

with

Kinect

support

include ESPN, Zune

Marketplace, Netflix, Hulu Plus and Last.fm

2. Among the applications for Kinect is Video Kinect, which

enables voice chat or video chat with other Xbox 360 users or users of Windows Live Messenger. The application can use Kinect tracking functionality and the Kinect sensor's motorized pivot to keep users in frame even as they move around.

3. THIRD PARTY APPLICATIONS

Microsoft has released a non-commercial Kinect software development kit (SDK) for Windows. Using this SDK numerous developers are researching possible applications of Kinect that go beyond the system's intended purpose of playing games. Here are a few examples.

1. One Developer combined Kinect with the iRobot Create to map a room

in 3D and have the robot respond to human gestures,iRobot Create is a hobbyist robot manufactured by iRobot inc.

2. Another application of Kinect was developed by a team working on a

JavaScript extension for Google Chrome called depthJS that allows users to control the browser with hand gestures. 3. Another team has shown an application that allows Kinect users to play a virtual piano by tapping their fingers on an empty desk.

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

4. Companies So touch and Evoluce have developed presentation software

for Kinect that can be controlled by hand gestures; among its features is a multi-touch zoom mode.[

5. Researchers at the University of Minnesota have used Kinect to measure

a range of disorder symptoms in children, creating new ways of objective evaluation to detect such conditions as autism, attention-deficit disorder and obsessive-compulsive disorder.

6. Several groups have reported using the Kinect for intraoperative, review

of medical imaging, allowing the surgeon to access the information without contamination. This technique is already in use at Sunnybrook Health Sciences Centre in Toronto, where doctors use it to guide imaging during cancer surgery.

7. Another application includes combining the Kinect sensor with a belt

that vibrates whenever the Kinect sensor senses an obstruction. This system can be worn by Blind people to avoid obstacles.

8. A Topshop store in Moscow set up a Kinect kiosk that could overlay a

collection of dresses onto the live video feed of customers. Through automatic tracking, position and rotation of the virtual dress were updated even as customers turned around to see the back of the outfit.

VIII. CONCLUSION

Kinect sensor has a natural user interface using which a variety of devices can be controlled just by gestures and voice commands. Kinect can be a tremendous amount of fun for casual players, and the creative, controller-free concept is undeniably appealing. 8 million units of the Kinect sensor had been shipped by January 2012. Having sold 8 million units in its first 60 days on the market, Kinect has claimed the Guinness World Record of being the "fastest selling consumer electronics device".

22

HKBKCE- EEE DEPT- 2012

Kinect Sensor

BIBLOGRAPHY

[1] [2]

http://en.wikipedia.org/wiki/Kinect[online]

Real-Time Human Pose Recognition in Parts from Single Depth Images. Jamie Shotton Andrew Fitzgibbon Mat Cook Toby Sharp Mark Finocchio Richard Moore Alex Kipman Andrew Blake Microsoft Research Cambridge & Xbox Incubation

[3] [5] [6] [7] [8] [9] [10] [13] [14]

OPEN KINECT http://openkinect.org/wiki/Main_Page

http://support.xbox.com/en-US/kinect/voice/control-your-xbox-360with-your-voice

The Kinect Sensorhttp://electronics.howstuffworks.com/gadgets/hightech-gadgets/facial-recognition1.htm http://campar.in.tum.de/twiki/pub/Chair/TeachingSs11Kinect/2011DSensors_LabCourse_Kinect.pdf . http://en.wikipedia.org/wiki/Three-dimensional_face_recognition http://www.ifixit.com/Teardown/Microsoft-Kinect-Teardown/4066/2 http://en.wikipedia.org/wiki/Structured_Light_3D_Scanner http://en.wikipedia.org/wiki/Structured_light

Human Detection Using Depth Information by Kinect Lu Xia, Chia-Chih Chen and J. K. Aggarwal The University of Texas at Austin Department of Electrical and Computer Engineering

[15]

Kinect-Projector Calibration CS 498 Computational Photography Final Project Rajinder Sodhi, Brett Jones

22

HKBKCE- EEE DEPT- 2012

You might also like

- Technology KinetDocument3 pagesTechnology KinetsherrysherryNo ratings yet

- Presented By: Jatin S Valecha 1NH08IS036Document16 pagesPresented By: Jatin S Valecha 1NH08IS036Jatin ValechaNo ratings yet

- Gesture Based Human Computer Interaction: Presented by Kanwal KianiDocument16 pagesGesture Based Human Computer Interaction: Presented by Kanwal KianiSyeda Abrish FatimaNo ratings yet

- Kanwal Haq Abeera Tariq SeherDocument23 pagesKanwal Haq Abeera Tariq SeherKanwal HaqNo ratings yet

- The Use of Kinect Sensor To Control Manipulator With Electro-Hydraulic Servodrives - 481 - 486Document6 pagesThe Use of Kinect Sensor To Control Manipulator With Electro-Hydraulic Servodrives - 481 - 486GIYEONGNo ratings yet

- Controller Free KinesisDocument14 pagesController Free KinesisBhavna BhaswatiNo ratings yet

- Head and Hand Detection Using Kinect Camera 360Document7 pagesHead and Hand Detection Using Kinect Camera 360White Globe Publications (IJORCS)No ratings yet

- KINECTDocument20 pagesKINECTtitu1993No ratings yet

- Virtual Health Care Robot Using Kinect SensorDocument9 pagesVirtual Health Care Robot Using Kinect SensorIJRASETPublicationsNo ratings yet

- Microsoft Kinect SensorDocument17 pagesMicrosoft Kinect SensorSumit Kushwaha100% (1)

- Hand Gesture Recognition System For Image Process IpDocument4 pagesHand Gesture Recognition System For Image Process IpAneesh KumarNo ratings yet

- Agents and Ubiquitous Computing in Microsoft Kinect Controller Free InteractionDocument9 pagesAgents and Ubiquitous Computing in Microsoft Kinect Controller Free Interactionsunilkar_itNo ratings yet

- Touchless TechnologyDocument9 pagesTouchless TechnologyKrishna KarkiNo ratings yet

- XBOX 360 SystemDocument4 pagesXBOX 360 SystemMAHER MOHAMEDNo ratings yet

- Third-Party Development: Mit Irobot Create Google ChromeDocument4 pagesThird-Party Development: Mit Irobot Create Google ChromesherrysherryNo ratings yet

- Motion SensorDocument32 pagesMotion Sensorayushi100% (1)

- Enhanced Computer Vision With Microsoft Kinect Sensor: A ReviewDocument17 pagesEnhanced Computer Vision With Microsoft Kinect Sensor: A ReviewYuvi EmpireNo ratings yet

- Hand Gesture Mouse Recognition Implementation To Replace Hardware Mouse Using AIDocument6 pagesHand Gesture Mouse Recognition Implementation To Replace Hardware Mouse Using AIGaurav MunjewarNo ratings yet

- Vision and Distance Integrated Sensor (Kinect) For An Autonomous RobotDocument9 pagesVision and Distance Integrated Sensor (Kinect) For An Autonomous RobotRaul AlexNo ratings yet

- Development of Human Motion Guided Robotic Arm Using Kinect V2 Sensor and MatlabDocument9 pagesDevelopment of Human Motion Guided Robotic Arm Using Kinect V2 Sensor and Matlabjoni setiyawan saputraNo ratings yet

- Eye Tracking LibreDocument8 pagesEye Tracking LibrePranjal MethiNo ratings yet

- GW PaperDocument4 pagesGW PaperadulphusjuhnosNo ratings yet

- Introduction To Kinect in Customer ExperienceDocument4 pagesIntroduction To Kinect in Customer ExperienceNirmalNo ratings yet

- AbstractDocument2 pagesAbstractSaurav SinghNo ratings yet

- Fingerprint Recognisation Technology (F.R.T)Document37 pagesFingerprint Recognisation Technology (F.R.T)Gemechu AyanaNo ratings yet

- Real Time Skeleton Tracking Based Human Recognition System Using Kinect and ArduinoDocument6 pagesReal Time Skeleton Tracking Based Human Recognition System Using Kinect and ArduinoAdi TiawarmanNo ratings yet

- Microsoft Kinect Sensor PDFDocument7 pagesMicrosoft Kinect Sensor PDFAndré QuirinoNo ratings yet

- Motion Control of Robot by Using Kinect SensorDocument6 pagesMotion Control of Robot by Using Kinect SensorEbubeNo ratings yet

- An Example With Microsoft Kinect: City Modeling With Kinect: Mehmet Bilban, Yusuf Uzun, Hüseyin ArıkanDocument4 pagesAn Example With Microsoft Kinect: City Modeling With Kinect: Mehmet Bilban, Yusuf Uzun, Hüseyin ArıkanmediakromNo ratings yet

- The Kinect Sensor in Robotics Education: Michal Tölgyessy, Peter HubinskýDocument4 pagesThe Kinect Sensor in Robotics Education: Michal Tölgyessy, Peter HubinskýShubhankar NayakNo ratings yet

- FulltextDocument4 pagesFulltextqudrat zohirovNo ratings yet

- Touch Less TechDocument29 pagesTouch Less TechJAYASREE K.KNo ratings yet

- Touchless Touchscreen TechnologyDocument28 pagesTouchless Touchscreen TechnologyMalleshwariNo ratings yet

- Evaluation of The Kinect™ Sensor For 3-D Kinematic Measurement in The WorkplaceDocument5 pagesEvaluation of The Kinect™ Sensor For 3-D Kinematic Measurement in The WorkplaceergoshaunNo ratings yet

- VPI Player: An Approach For Virtual Percussion Instrument Using KinectDocument4 pagesVPI Player: An Approach For Virtual Percussion Instrument Using KinectInnovative Research PublicationsNo ratings yet

- Hand Gesture Controlling SystemDocument8 pagesHand Gesture Controlling SystemIJRASETPublicationsNo ratings yet

- Touchless Touchscreen TechnologyDocument28 pagesTouchless Touchscreen TechnologyPravallika Potnuru100% (2)

- Captura de MovimientoDocument6 pagesCaptura de MovimientoWilliam GalindoNo ratings yet

- Hao 2016Document5 pagesHao 2016pennyNo ratings yet

- Sms SynopsisDocument6 pagesSms SynopsisBharathi RajuNo ratings yet

- A Real-Time Calibration Method of Kinect Recognition Range Extension For Media ArtDocument22 pagesA Real-Time Calibration Method of Kinect Recognition Range Extension For Media ArtjjNo ratings yet

- Gesture Controlled Video Playback: Overview of The ProjectDocument4 pagesGesture Controlled Video Playback: Overview of The ProjectMatthew BattleNo ratings yet

- Smartsensors: Evolution, Technology & Applications: Technical SeminarDocument13 pagesSmartsensors: Evolution, Technology & Applications: Technical Seminarsanatkumar1990No ratings yet

- Leap Motion TechnologyDocument18 pagesLeap Motion TechnologyNingammaNo ratings yet

- Space MouseDocument6 pagesSpace Mouseanubha goyalNo ratings yet

- Touch Less Touch Screen Technology: Rohith 18311A12M1Document21 pagesTouch Less Touch Screen Technology: Rohith 18311A12M1Rishi BNo ratings yet

- VTS Final (Ready)Document23 pagesVTS Final (Ready)Praveen KumarNo ratings yet

- Touchless Touchscreen: - Saptarshi DeyDocument17 pagesTouchless Touchscreen: - Saptarshi Deynaman jaiswalNo ratings yet

- Mouse Movement Through Finger by Image Grabbing Using Sixth Sense TechnologyDocument5 pagesMouse Movement Through Finger by Image Grabbing Using Sixth Sense TechnologyDevanga HinduNo ratings yet

- Human Follower Robot Using KinectDocument3 pagesHuman Follower Robot Using KinectZelalem kibru WoldieNo ratings yet

- Measurement Strategy For Tracking A Tennis Ball Using Microsoft Kinect Like TechnologyDocument9 pagesMeasurement Strategy For Tracking A Tennis Ball Using Microsoft Kinect Like TechnologyPratik AcharyaNo ratings yet

- Ijert Ijert: Advanced Mouse Cursor Control and Speech Recognition ModuleDocument5 pagesIjert Ijert: Advanced Mouse Cursor Control and Speech Recognition ModuleaarthiNo ratings yet

- Leap Motion Technology: Presented By, Sreeraj C.S. (625) Vivek S.Kumar (632) Guided By, Prof. Dr. Sheeja M.KDocument28 pagesLeap Motion Technology: Presented By, Sreeraj C.S. (625) Vivek S.Kumar (632) Guided By, Prof. Dr. Sheeja M.KHADIQ NKNo ratings yet

- Gesture Reality: Motivation For The ProjectDocument4 pagesGesture Reality: Motivation For The ProjectMelvin DavisNo ratings yet

- Human Following Robot Based On Control of Particle Distribution With Integrated Range SensorsDocument6 pagesHuman Following Robot Based On Control of Particle Distribution With Integrated Range SensorsSAGAR JHA 14BEC1074No ratings yet

- Kinect FullDocument33 pagesKinect FullVipin Mohan R NairNo ratings yet

- Blue EyesDocument6 pagesBlue EyesManvendra Singh NiranjanNo ratings yet

- Digital Video Processing for Engineers: A Foundation for Embedded Systems DesignFrom EverandDigital Video Processing for Engineers: A Foundation for Embedded Systems DesignNo ratings yet

- VAM Must Sumitomo 1209 PDFDocument4 pagesVAM Must Sumitomo 1209 PDFnwohapeterNo ratings yet

- 10 Q - Switching & Mode LockingDocument21 pages10 Q - Switching & Mode Lockingkaushik42080% (1)

- Estimation of Fire Loads For An Educational Building - A Case StudyDocument4 pagesEstimation of Fire Loads For An Educational Building - A Case StudyEditor IJSETNo ratings yet

- Monthly Exam Part I Aurora English Course 1 (KD 1, KD2, PKD3)Document20 pagesMonthly Exam Part I Aurora English Course 1 (KD 1, KD2, PKD3)winda septiaraNo ratings yet

- Polynomial Transformations of Tschirnhaus, Bring and Jerrard4s++Document5 pagesPolynomial Transformations of Tschirnhaus, Bring and Jerrard4s++wlsvieiraNo ratings yet

- Art and Geography: Patterns in The HimalayaDocument30 pagesArt and Geography: Patterns in The HimalayaBen WilliamsNo ratings yet

- The Explanation of The Fundamentals of Islamic BeliefDocument95 pagesThe Explanation of The Fundamentals of Islamic BeliefbooksofthesalafNo ratings yet

- DIY Paper Sculpture: The PrincipleDocument20 pagesDIY Paper Sculpture: The PrincipleEditorial MosheraNo ratings yet

- FENA-01 - 11 - 21 - Ethernet Adapter - User's Manual - Rev BDocument388 pagesFENA-01 - 11 - 21 - Ethernet Adapter - User's Manual - Rev BQUOC LENo ratings yet

- Afectiuni Si SimptomeDocument22 pagesAfectiuni Si SimptomeIOANA_ROX_DRNo ratings yet

- Phineas Gage: From The Passage of An Iron Rod Through The Head"Document1 pagePhineas Gage: From The Passage of An Iron Rod Through The Head"GlupiaSprawaNo ratings yet

- Mobile Communication Networks: Exercices 4Document2 pagesMobile Communication Networks: Exercices 4Shirley RodriguesNo ratings yet

- EY Enhanced Oil RecoveryDocument24 pagesEY Enhanced Oil RecoveryDario Pederiva100% (1)

- Bluforest, Inc. (OTC: BLUF) InvestigationDocument5 pagesBluforest, Inc. (OTC: BLUF) Investigationfraudinstitute100% (1)

- Faujifood Pakistan PortfolioDocument21 pagesFaujifood Pakistan PortfolioPradeep AbeynayakeNo ratings yet

- DHT, VGOHT - Catloading Diagram - Oct2005Document3 pagesDHT, VGOHT - Catloading Diagram - Oct2005Bikas SahaNo ratings yet

- Article Unleashing The Power of Your StoryDocument17 pagesArticle Unleashing The Power of Your StoryAnkit ChhabraNo ratings yet

- University of Engineering and Technology TaxilaDocument5 pagesUniversity of Engineering and Technology TaxilagndfgNo ratings yet

- Wjec Biology SpectificaionDocument93 pagesWjec Biology SpectificaionLucy EvrettNo ratings yet

- High Performance Dialysis GuideDocument28 pagesHigh Performance Dialysis GuideRoxana ElenaNo ratings yet

- From Science To God by Peter RussellDocument6 pagesFrom Science To God by Peter RussellFilho adulto pais alcolatrasNo ratings yet

- Food - Forage - Potential - and - Carrying - Capacity - Rusa Kemampo - MedKonDocument9 pagesFood - Forage - Potential - and - Carrying - Capacity - Rusa Kemampo - MedKonRotten AnarchistNo ratings yet

- Mechanics of MaterialsDocument11 pagesMechanics of MaterialsPeter MwangiNo ratings yet

- Suneet Narayan Singh (Updated CV), NDocument4 pagesSuneet Narayan Singh (Updated CV), Nnishant gajeraNo ratings yet

- Important Notice 38-2021 Dated 24-03-2021 Available Seats Foreign National Spon INI CET PG Courses July 2021Document3 pagesImportant Notice 38-2021 Dated 24-03-2021 Available Seats Foreign National Spon INI CET PG Courses July 2021Priyobrata KonjengbamNo ratings yet

- EB-300 310 Service ManualDocument32 pagesEB-300 310 Service ManualVictor ArizagaNo ratings yet

- Food Poisoning: VocabularyDocument9 pagesFood Poisoning: VocabularyHANG WEI MENG MoeNo ratings yet

- Jib Crane Assembly ManualDocument76 pagesJib Crane Assembly ManualRobert Cumpa100% (1)

- Smart Watch User Manual: Please Read The Manual Before UseDocument9 pagesSmart Watch User Manual: Please Read The Manual Before Useeliaszarmi100% (3)

- Tamil NaduDocument64 pagesTamil Nadushanpaga priyaNo ratings yet