Professional Documents

Culture Documents

Model Selection and Multiple Hypothesis Testing

Uploaded by

Kelvin GuuCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Model Selection and Multiple Hypothesis Testing

Uploaded by

Kelvin GuuCopyright:

Available Formats

Model selection and multiple hypothesis testing

Kelvin Gu

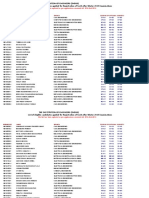

Contents

1 Model selection 1

1.1 Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 RSS-d.o.f. decomposition of the PE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.4 RSS-d.o.f. decomposition in action . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.5 Bias-variance decomposition of the risk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.6 Bias-variance decomposition in action . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.7 Two reasons why the methods above arent ideal . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.8 Bayesian Info Criterion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.9 Steins unbiased risk estimate (SURE) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.10 SURE in action . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2 Multiple hypothesis testing 6

2.1 The setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.2 Why do we need it? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.3 Controlling FDR using Benjamini Hochberg (BH) . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.4 Proof of BH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

3 References 6

1 Model selection

1.1 Setup

Suppose we know X = x. We want to predict the value of Y

Dene the prediction error to be PE = (Y f (X))

2

We want to choose some function f that minimizes the objective E [PE | X = x]

the optimal solution is (x) = E [Y | X = x]

As a proxy for minimizing E

_

(Y f (X))

2

| X = x

_

, well minimize the risk: R = E

_

((X) f (X))

2

_

note that

E {E [PE | X = x]} = E [PE] = E

_

((X) + (X) f (X))

2

_

= E

_

((X) f (X))

2

_

+E

_

(X)

2

_

= R +V ar (Y )

so, the risk R is a reasonable proxy to optimize

V ar (Y ) is unavoidable

For notational convenience, well call = f (X) and = (X)

1

Kelvin Gu

1.2 Motivation

Why cant we just use cross-validation for all tasks?

The problem:

Suppose were doing ordinary least squares with p = 30 predictors (inputs)

we want to select a subset of the p predictors with smallest EPE

for each subset of predictors, we t the model and then test on some held-out test set.

there are

_

p

2

_

= 435 models of size 2, there are

_

p

15

_

= 155117520 models of size 15.

even if most of the size 15 models are terrible, after 155117520 opportunities, youll probably nd

one that ts the test data better than any of the size 2 models.

This is second-order overtting.

let M

15

be the set of all size 15 models

E

_

min

mM15

PE (m)

_

. .

cross validation thinks you get this

min

mM

E [PE (m)]

. .

you actually get this

even if you have the computation power to try all models, its still a bad idea (without

some modication)

How will we address this?

nd better ways to estimate PE, and add an additional penalty to account for the overtting

problem presented above

it turns out that we need a penalty which depends not only on model size p, but also data size n

Other ways to address this:

just avoid searching over high-dimensional model space in the rst place (e.g. ridge regression

and LASSO both oer just a single parameter to vary)

1.3 RSS-d.o.f. decomposition of the PE

We just saw that expected prediction error could be decomposed as: E [PE] = R + V ar (Y ). Here is

another decomposition.

Let (X, Y ) be the training data, and let (X, Y

) be the test data.

Note that X is the same in both cases but Y is not!

this proof doesnt work if the X is dierent in training and test

To make matters simple, just assume X, Y R. It easily generalizes to the vector case.

Our prediction for Y

is , which is a function of (X, Y ) because we trained on (X, Y ). To emphasize

this, well write =

X,Y

.

The prediction error (PE) is (

X,Y

Y

)

2

E

_

(

XY

Y

)

2

_

. .

E[PE]

= E

_

(

XY

Y )

2

_

. .

E[RSS]

+2Cov (

XY

, Y )

. .

d.o.f.

Kelvin Gu

Proof:

E

_

(

XY

Y )

2

_

= E

_

(

XY

+ Y )

2

_

E

_

(

XY

Y )

2

_

. .

E[RSS]

= E

_

(

XY

)

2

_

+E

_

( Y )

2

_

. .

E[PE]

2E [(

XY

) (Y )]

. .

d.o.f.

The second term is E [PE] because:

E [PE] = E

_

(

XY

Y

)

2

_

= E

_

(

XY

+ Y

)

2

_

= E

_

(

XY

)

2

+ 2 (

XY

) ( Y

) + ( Y

)

2

_

= E

_

(

XY

)

2

_

+E

_

( Y

)

2

_

A key thing to note is that E [(

XY

) ( Y

)] = E [

XY

] E [ Y

] = 0

expectation factorizes because Y

and (X, Y ) are independent.

1.4 RSS-d.o.f. decomposition in action

Suppose were tting a linear model = HY . Then we can compute Tr (Cov ( , Y ))

Tr (Cov (HY, Y )) = Tr (HCov (Y, Y ))

= Tr (H)

if H = X

_

X

T

X

_

1

X

T

and =

2

I, we can make this even more explicit

Tr (H) =

2

rank (X)

Y

2

+ 2

2

rank (X) is called the C

p

statistic

We will see that model selection using C

p

has the same problems as cross validation.

1.5 Bias-variance decomposition of the risk

Returning to E [PE] = R +V ar (Y )

We can decompose the risk term R term further:

R = E

_

( )

2

_

= E

_

( E +E )

2

_

= E

_

( E )

2

+ 2 ( E ) (E ) + (E )

2

_

= E

_

( E )

2

_

+ (E )

2

= V ar ( ) +Bias ( )

2

same trick: expand and kill the cross term: E [( E ) (E )] = 0 (E )

Intuition: as you increase model size, bias tends to go down but variance goes up (overtting)

Kelvin Gu

1.6 Bias-variance decomposition in action

Denoising problem:

we observe y N (, I)

we think has sparsity, so we try to recover it by solving: min y y

2

+ 2

2

y

0

Note that the penalty 2

2

y

0

is just the C

p

penalty, 2

2

rank (X), when X = I

bias-variance tradeo

consider each y

i

separately

either you set y

i

= y

i

and pay 2

2

(bias)

or you set y

i

= 0 and pay y

2

i

(variance)

the solution is hard-thresholding

y

i

=

_

0 |y

i

|

2

y

i

|y

i

| >

2

We can solve this problem even though it has an L0 penalty. You would think this procedure should

be good, right? Nope. It tends to set too many entries to y

i

.

We will see that model selection using a constant penalty to the L

0

norm suers from the

same problem as C

p

and cross-validation

1.7 Two reasons why the methods above arent ideal

(Continuing the denoising problem)

1. Other estimators can achieve better prediction error

suppose the real = 0

then our risk is: E

Y

2

=

E

_

y

2

i

I

|yi|>

2

_

0.57p

2

consider James Stein estimator

whereas a dierent model selection procedure, such as James Stein, has risk 2

2

JS

=

_

1

p2

Y

2

_

Y

The following bound on the risk of

JS

is oered without proof:

E

JS

2

2

_

p

p 2

1 +

2

p2

_

plugging in

2

= 0, we get a bound of 2

2

. Doesnt even depend on p!

2. They arent consistent (this is a n argument)

suppose we get more iid observations y

i

N (, I), i = 1, . . . , n

suppose that has sparsity k

Now we want to minimize C

p

=

n

i=1

y

i

y

2

+ 2

2

y

0

Consistency would require that P (C

p

(k

) < C

p

(k)) 1 for all k = k

Were going to vary the parameter k and compute C

p

(k) for each k

Kelvin Gu

C

p

(k) =

n

i=1

y

i

y

2

+ 2

2

y

0

=

n

i=1

_

_

k

j=1

_

y

ij

y

j

_

2

+

p

j=k+1

y

2

ij

_

_

+ 2

2

k

=

_

_

k

j=1

n

i=1

_

y

ij

y

j

_

2

+

p

j=k+1

n

i=1

y

2

ij

_

_

+ 2

2

k

=

_

_

k

j=1

_

n

i=1

y

2

ij

ny

2

j

_

+

p

j=k+1

n

i=1

y

2

ij

_

_

+ 2

2

k

=

_

_

p

j=1

n

i=1

y

2

ij

n

k

j=1

y

2

j

_

_

+ 2

2

k

= n

k

j=1

y

2

j

+ 2

2

k +

n

i=1

y

i

2

. .

constant for all k

wlog, consider k

> k.

C

p

(k

) C

p

(k) = 2

2

(k

k)

_

_

|k

k|

j

ny

2

j

_

_

each y

j

N

_

j

,

1

n

_

so ny

j

2

1

and

|k

k|

j

ny

2

j

2

|k

k|

this expression doesnt depend on n anymore, so theres always positive probability that P (C

p

(k

) > C

p

(k))

this proof seems slightly shy, because for each model size k, Im arbitrarily picking the rst k

entries to threshold

but you can replace all the sums up to k with sums over any size k subset

now compute C

p

for all subsets, and youll get the same result

in contrast, the Bayesian information criterion works

1.8 Bayesian Info Criterion

We have a collection of models to select from {M

i

}, indexed by i

Model M

i

has parameter vector

i

associated with it. Let |

i

| denote the dimension of

i

.

Pick model with highest marginal probability: P (y | M

i

) =

f (y |

i

) g

i

(

i

) d

i

log P (y | M

i

) log L

i

(y)

|i|

2

log n

derivation steps:

take log (exp ()) of integrand

Taylor expand around MLE

recognize Gaussian integral

take logs

deal with Hessian term using SLLN

Kelvin Gu

1.9 Steins unbiased risk estimate (SURE)

(just mentioning, not giving details here)

suppose X is a vector with mean and variance

2

I

we want to estimate = X +g (X) where g must be almost dierentiable

then

R = E

2

= n

2

+E

_

g (X)

2

+ 2

2

divg (X)

where divg (X) =

Xi

g

i

(X) = Tr

_

g

X

_

(trace of Jacobian)

1.10 SURE in action

leads to James Stein estimator

2 Multiple hypothesis testing

2.1 The setup

we have H

i

, i = 1, . . . , n hypotheses to test

we want to control the quality of our conclusions, using one of these metrics:

FWER: P (we make at least one false rejection)

FDR: E

_

# of false rejections

# of total rejections

_

2.2 Why do we need it?

Suppose you dont even care about making scientic conclusions. You just want to do good prediction.

You can think of model selection as a way to induce sparsity.

(Candes calls it testimation)

Back to the thresholding example

2.3 Controlling FDR using Benjamini Hochberg (BH)

if we have time

2.4 Proof of BH

if we have time

3 References

http://nscs00.ucmerced.edu/~nkumar4/BhatKumarBIC.pdf

The STATS 300 sequence, with thanks to Profs. Candes, Siegmund and Romano!

You might also like

- Generating Sentences by Editing Prototypes (Without Slide Builds, Presenter Notes)Document53 pagesGenerating Sentences by Editing Prototypes (Without Slide Builds, Presenter Notes)Kelvin GuuNo ratings yet

- Generating Sentences by Editing Prototypes (Without Presenter Notes)Document272 pagesGenerating Sentences by Editing Prototypes (Without Presenter Notes)Kelvin GuuNo ratings yet

- Generating Sentences by Editing Prototypes (Full Version)Document272 pagesGenerating Sentences by Editing Prototypes (Full Version)Kelvin GuuNo ratings yet

- Traversing Knowledge Graphs in Vector Space (Full)Document149 pagesTraversing Knowledge Graphs in Vector Space (Full)Kelvin Guu100% (1)

- Traversing Knowledge Graphs in Vector SpaceDocument149 pagesTraversing Knowledge Graphs in Vector SpaceKelvin GuuNo ratings yet

- Random Matrix Theory, Part IIDocument7 pagesRandom Matrix Theory, Part IIKelvin GuuNo ratings yet

- AdemboDocument384 pagesAdemboKelvin GuuNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- List of Eligible Candidates Applied For Registration of Secb After Winter 2015 Examinations The Institution of Engineers (India)Document9 pagesList of Eligible Candidates Applied For Registration of Secb After Winter 2015 Examinations The Institution of Engineers (India)Sateesh NayaniNo ratings yet

- Elec4602 NotesDocument34 pagesElec4602 NotesDavid VangNo ratings yet

- 25 Most Frequently Asked DSA Questions in MAANGDocument17 pages25 Most Frequently Asked DSA Questions in MAANGPranjalNo ratings yet

- Forex - Nnet Vs RegDocument6 pagesForex - Nnet Vs RegAnshik BansalNo ratings yet

- FO1Document5 pagesFO1YunanNo ratings yet

- Synopsis On Cyber Cafe Management SystemDocument22 pagesSynopsis On Cyber Cafe Management Systemyadavdhaval9No ratings yet

- Effects of Nimodipine, Vinpocetine and Their Combination On Isoproterenol-Induced Myocardial Infarction in RatsDocument9 pagesEffects of Nimodipine, Vinpocetine and Their Combination On Isoproterenol-Induced Myocardial Infarction in RatsBilal AbbasNo ratings yet

- Name Source Description Syntax Par, Frequency, Basis)Document12 pagesName Source Description Syntax Par, Frequency, Basis)alsaban_7No ratings yet

- Manual EAP1.5-2kg 040Document21 pagesManual EAP1.5-2kg 040mykeenzo5658No ratings yet

- Ska611hdgdc (210) (12BB) (2384×1303×35) (680 700)Document2 pagesSka611hdgdc (210) (12BB) (2384×1303×35) (680 700)Marko Maky ZivkovicNo ratings yet

- TXP TrainingDocument88 pagesTXP Trainingsina20795100% (1)

- Lecture 2 - Kinematics Fundamentals - Part ADocument30 pagesLecture 2 - Kinematics Fundamentals - Part ASuaid Tariq BalghariNo ratings yet

- MetaLINK Info r456Document5 pagesMetaLINK Info r456Milan AntovicNo ratings yet

- Mark SchemeDocument12 pagesMark SchemeNdanji SiameNo ratings yet

- Plain Bearings Made From Engineering PlasticsDocument44 pagesPlain Bearings Made From Engineering PlasticsJani LahdelmaNo ratings yet

- (Artigo) - Non-Oriented Electrical Steel Sheets - D. S. PETROVI PDFDocument9 pages(Artigo) - Non-Oriented Electrical Steel Sheets - D. S. PETROVI PDFcandongueiroNo ratings yet

- 034 PhotogrammetryDocument19 pages034 Photogrammetryparadoja_hiperbolicaNo ratings yet

- Intel Processor Diagnostic Tool HelpDocument44 pagesIntel Processor Diagnostic Tool HelprullfebriNo ratings yet

- M.SC - Physics 3rd Sem FinalDocument12 pagesM.SC - Physics 3rd Sem FinalKhileswar ChandiNo ratings yet

- GAS-RELEASE CALCULATORDocument3 pagesGAS-RELEASE CALCULATOREduardo Paulini VillanuevaNo ratings yet

- Propulsion ResistanceDocument14 pagesPropulsion ResistanceEduardo LopesNo ratings yet

- G5 Fi 125 (Sr25aa) PDFDocument122 pagesG5 Fi 125 (Sr25aa) PDF陳建璋No ratings yet

- Vitamins With Minerals Oral PowderDocument8 pagesVitamins With Minerals Oral PowderWH PANDWNo ratings yet

- Hikmayanto Hartawan PurchDocument12 pagesHikmayanto Hartawan PurchelinNo ratings yet

- Perfect Fourths GuitarDocument8 pagesPerfect Fourths Guitarmetaperl6453100% (1)

- I) All Questions Are Compulsory. Ii) Figure To The Right Indicate Full Marks. Iii) Assume Suitable Data Wherever NecessaryDocument1 pageI) All Questions Are Compulsory. Ii) Figure To The Right Indicate Full Marks. Iii) Assume Suitable Data Wherever Necessarythamizharasi arulNo ratings yet

- SP 5500 V5.1 1.0Document17 pagesSP 5500 V5.1 1.0Rama Tenis CopecNo ratings yet

- The Structure of MatterDocument3 pagesThe Structure of MatterFull StudyNo ratings yet

- EE - Revision Through Question - GATE - 2020 PDFDocument138 pagesEE - Revision Through Question - GATE - 2020 PDFRamesh KumarNo ratings yet