Professional Documents

Culture Documents

Image Fusion Using Various Transforms: IPASJ International Journal of Computer Science (IIJCS)

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Image Fusion Using Various Transforms: IPASJ International Journal of Computer Science (IIJCS)

Copyright:

Available Formats

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 51

ABSTRACT

Image fusion is the method of combining significant information from two or more images into a single image. In this work,

image fusion using mean and variance is explored in transform domain. Discrete Cosine Transform (DCT), Discrete Wavelet

Transform (DWT) and Non Subsampled Contourlet Transform (NSCT) are used for image fusion. Feature Similarity (FSIM)

index is used to test the quality of the fused image. Experimental results indicate that the proposed methods are producing

better results than other methods available in the literature.

Keywords: Mean, Variance, DCT, DWT, NSCT

1. INTRODUCTION

Image fusion has become a regulation which demands more general formal solutions to different applications. Some

applications require both high spatial and high spectral content in a single image. However, the instruments are not

able of providing such content. One potential solution for this is image fusion. Image fusion methods can be

implemented in two domains - spatial domain and transform domain. The fusion methods such as averaging, high pass

filtering contrast, and variance based methods fall under spatial domain. The disadvantage with spatial methods is that

they produce spatial deformation in the fused image. This problem is well addressed in frequency domain. Image fusion

can be implemented in spatial domain by manipulating the pixel intensities. It can also be implemented by

manipulating the coefficients of transformed image using various transforms, viz., Discrete Fourier Transform (DFT),

Discrete Sine Transform (DST), Discrete Cosine Transform (DCT), Discrete Wavelet Transform (DWT) and Non

Subsampled Contourlet Transform (NSCT) etc.

DCT has attracted widespread interest as a method of information coding [14 ]. ISO/CCITT Joint Photographic Experts

Group (JPEG) has selected DCT for its baseline coding technique . DCT provides better energy compaction for natural

images than other block based transforms. DWT has attracted widespread interest as a method of information coding

[1,6]. The ISO/CCITT Joint Photographic Experts Group (JPEG2000) has selected the DWT for its baseline coding

technique. DWT is used because of its ability to solve the blocking artifacts introduced by DHT and DCT based image

processing applications. The idea of wavelet transform is to convolve the image to be analyzed with several oscillatory

filter kernels representing different frequency bands, respectively. Hence, image is split into different frequency bands

by the DWT. The image is split into four bands if the level of decomposition is one. These bands are denoted by LL,

LH, HL, and HH. L indicates low pass filter and H indicates high pass filter. Low pass information is preserved in

the LL band. Hence, this band contains more redundancy than other bands. CT uses double filter bank structures for

obtaining sparse expansion of typical images having smooth contours [4,5 ]. In this double filter bank structure,

Laplacian Pyramid (LP) is used to capture the point discontinuities, and Directional Filter Bank (DFB) is used to link

these point discontinuities into linear structures. The NSCT expansion is collected of basis images leaning at various

directions in multiple scales, with bendable aspect ratios.

In this work DCT, DWT, and NSCT are considered to test image fusion algorithms. DCT, DWT, and NSCT are

reviewed in section 2. Proposed fusion algorithms are presented in section 3. Experimental results are discussed in

section 4. Concluding remarks are presented in section 5.

Image Fusion using various Transforms

G.Ramesh Babu

1

and K.Veera Swamy

2

1

P.G.Student, ECE Department, QIS College of Engineering &Technology,Ongole

2

Professor, ECE Department, QIS College of Engineering &Technology,Ongole

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 52

2. TRANSFORMS

a. 2D-DCT

An image block with a more changes in intensity values has a very random looking resulting DCT coefficient matrix,

while an image matrix of just no change in intensity values, has a resulting matrix of a large value for the first element

and zeroes for the other elements. The first element in DCT coefficient matrix[14] represents DC value of image

matrix. DCT is calculated for an image of size nxn is given below:

( ) ( ) ( ) ( )

( ) ( )

(

+

(

+

=

=

n

v y

n

u x

y x g v u

n

v u G

n

x

n

y

2

1 2

cos

2

1 2

cos ,

2

1

,

1

0

1

0

(1)

where

where,

( )

>

=

=

0 1

0

2

1

u

u

u

( )

>

=

=

0 1

0

2

1

v

v

v

IDCT is calculated using equation (2)

IDCT The inversis given as below.

( ) ( ) ( ) ( )

( ) ( )

(

+

(

+

=

=

n

v y

n

u x

v u G v u

n

y x g

n

u

n

v

2

1 2

cos

2

1 2

cos ,

2

1

,

1

0

1

0

(2)

DCT gets its name from the fact that the basis matrix (

D

A ) is obtained as a function of cosines.

D

A of any order is

generated by using the equation (3).

( )

( )

( )

= =

+

= =

+

=

. 1 ,..., 1 , 0 , 1 ,..., 2 , 1

2

1 2

cos

2

1 ,..., 1 , 0 , 0

2

1 2

cos

1

,

n j n i

n

i j

n

n j i

n

i j

n

j i A

D

(3)

b. 2D-DWT

In DWT[1,6,7], an image can be processed by passing it through an analysis filter bank followed by a decimation

operation [ ]. This analysis filter bank, which consists of a low pass and a high pass filter at each decomposition stage,

is commonly used in image compression. When a image passes through these filters, it is split into two bands. The low

pass filter, which corresponds to an averaging operation, extracts the coarse information of the image. The high pass

filter, which corresponds to a differencing operation, extracts the detail information of the image. The output of the

filtering operations is then decimated by two. The mother wavelet is defined as

( ) |

.

|

\

|

=

a

b x

a

x

b a

1

,

(4)

where, a is scale coefficient and b is shift coefficient. All other basis functions are variations of the mother wavelet.

In two dimension DWT, a two dimensional scaling function, ( ) y x, and three two dimensional wavelets,

( ) ( ), , , , y x y x

V H

and ( ) y x

D

, , are required. Each is the product of a one dimensional scaling function and

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 53

corresponding wavelet . Excluding products that produce one dimensional results, like ( ) ( ) x x , the four

remaining products produce the separable scaling function

( ) ( ) ( ) y x y x = , (5)

and separable, directional sensitive wavelets

( ) ( ) ( ) y x y x

H

= , (6)

( ) ( ) ( ) y x y x

V

= , (7)

( ) ( ) ( ) y x y x

D

= , (8)

These wavelets measure functional variations, intensity or gray level variations for images, along different directions.

H

measures variations along columns (horizontal edges),

V

corresponds to variations along rows (vertical edges),

and

D

corresponds to variations along diagonals. Scaled and translated basis functions are as follows:

( ) ( ) m y m x y x

j j

j

m m j

= 2 , 2 2 ,

2

, ,

(9)

( ) { } D V H i m y m x

j j i

j

m m j

i

, , , 2 , 2 2

2

, , = = . (10)

where, index i identifies the directional wavelets. j is scaling function. DWT of image ( ) y x g , of size M X M

is then,

( ) ( ) ( ) y x y x g

MM

m m j

m m j

M

x

M

y

, ,

1

, ,

, .

1

0

1

0

0

0

=

= + (11)

( ) ( ) ( ) y x y x g

MM

m m j

i

m m j

M

x

M

y

i

, ,

1

, ,

, .

1

0

1

0

=

= + (12)

Inverse DWT is calculated using equations (11) and (12). Inverse DWT calculation is given in equation (13).

( ) ( ) ( )

( ) ( ) y x m m j

MM

y x m m j

MM

y x g

i

m m j

D V H i j j m m

i

m m j

m m

, , ,

1

, , ,

1

,

, ,

, ,

, , 0

0

0

=

+ +

+ =

(13)

The decomposition based on the scale is given in the Figure 1.

Figure 1 Decomposition in DWT domain

Low pass (L) filtered coefficients are stored on the left part of the matrix and high pass (H) filtered on the right.

Because of the decimation, the total size of the transformed image is same as the original image. The image is split into

four bands denoted by LL, HL, LH and HH after one level decomposition and as shown in Figure 2.

( ) m m j

H

, ,

+

( ) m m j

V

, ,

+

( ) m m j

D

, ,

+

( ) m m j , ,

+

( ) m m j , , 1 + +

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 54

Figure 2 Pyramidal decomposition

In the second level, the LL band is further decomposed into four bands. The same procedure is continued for further

decomposition levels. This process of filtering the image is called pyramidal decomposition of image. DWT performs

multiresolution image analysis [2,3 ]. Wavelet decomposition at second level is shown in Figure 3.

Figure 3 Pyramidal structure of wavelet decomposition

c. NSCT

The NSCT[10,11,12] is a shift-invariant version of the CT. The CT[4,5,8,9] employs the LP for multi-scale

decomposition, and the DFB for directional decomposition. To avoid aliasing of the CT the NSCT minimizes the down

sampling and the up sampling .It works using pyramids filter banks (NSPFBs) and the nonsubsampled directional filter

banks (NSDFBs). The NSPFB, employed by the NSCT, is a two-channel nonsubsampled filter bank (NFB). The filters

for next stage are obtained by upsampling the filters of the previous stage with the sampling matrix

L

2

2

L

H

2

2

L

2

H

2

H

Original

Image

LL

LH

HL

HH

Horizontal Vertical

LH2 HH2

HL2 LL2

HL1

LH1

HH1

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 55

(

= =

2 0

0 2

2I D

This gives the multi-scale property without the need of additional filter design. Illustrates the proposed NSPFB

decomposition with J=3 stages. The ideal frequency support of the lowpass filter at the j-th stage is the region [-( /2

j

),

( /2

j

)]

2

. Accordingly, the ideal support of the equivalent highpass filter is the complement of the lowpass, namely, the

region [-( /2

j-1

),( /2

j-1

)]

2

\[-( /2

j

), ( /2

j

)]

2

and the equivalent filters of a J -level cascading NSPFB is given by

(14)

Where H

0

(z) and H

1

(z) denote the lowpass filter and the corresponding highpass filter at the first stage, respectively.

After J-level NSCT decomposition, one lowpass subband image and

=

J

j

l

j

1

2 (l

j

denotes the direction decomposition

level at the j-th scale) bandpass directional subband images are obtained, all of which have the same size as the input

image.

3. PROPOSED FUSION ALGORITHMS

Block diagram for fusion process is shown in Figure 4.

Figure 4 Fusion process

3.1 Fusion algorithm using 2D-DCT and Mean

1. Decompose source images into sub- blocks

2. Apply 2D-DCT for each sub-block using equation (1)

3. Compute mean for each sub-block

4. Compare mean values of each image sub-blocks

5. Choose the sub-block which has high value of mean

6. For all these sub-blocks compute 2D-IDCT using equation (2) to get the fused image

3.2 Fusion algorithm using 2D-DCT and Variance

1. Decompose source images into sub- blocks

+ =

s s

=

[

[

1 , ) (

1 ), ( ) (

) (

2

0

2

0

2

0

2

0

2

1

1

j n z H

j n z H z H

z H

n

j

I

n

j

I I

eq

n

j

j n

Source Image 1

Source Image 2

Transform

Transform

Fusion decision

map

Inverse

Transform

Fused

Image

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 56

2. Apply 2D-DCT for each sub-block using equation (1)

3. Compute variance for each sub-block

4. Compare variance values of each image sub-blocks

5. Choose the sub-block which has high value of variance

6. For all these sub-blocks compute 2D-IDCT using equation (2) to get the fused image

3.3 Fusion algorithm using 2D-DWT and Mean

1. Decompose source images into sub- blocks

2. Apply 2D-DWT for each sub-block using equations (11) and (12)

3. Compute mean for each sub-block

4. Compare mean values of each image sub-blocks

5. Choose the sub-block which has high value of mean

6. For all these sub-blocks compute 2D-IDWT using equation (13) to get the fused image

3.4 Fusion algorithm using 2D-DWT and Variance

1. Decompose source images into sub- blocks

2. Apply 2D-DWT for each sub-block using equations (11) and (12)

3. Compute variance for each sub-block

4. Compare variance values of each image sub-blocks

5. Choose the sub-block which has high value of variance

6. For all these sub-blocks compute 2D-IDWT using equation (13) to get the fused image

3.5 Fusion algorithm using NSCT and mean

1. Decompose source images into sub- blocks

2. Apply NSCT for each sub-block using procedure explained in section 2.3

3. Compute mean for each sub-block

4. Compare mean values of each image sub-blocks

5. Choose the sub-block which has high value of mean

6. For all these sub-blocks compute INSCT to get the fused image

3.6 Fusion algorithm using NSCT and Variance

1. Decompose source images into sub- blocks

2. Apply NSCT for each sub-block explained in section 2.3

3. Compute variance for each sub-block

4. Compare variance values of each image sub-blocks

5. Choose the sub-block which has high value of variance

6. For all these sub-blocks compute INSCT to get the fused image

4. EXPERIMENTAL RESULTS

Experiments are performed on clock image. Here, two metrics are used to test the performance of the proposed

algorithms. One metric is Peak Signal to Noise Ratio(PSNR) and another metric is Feature Similarity (FSIM) [ 13]

index . An often used global objective quality measure is the mean square error (MSE) defined as

| |

2

1

0

1

0

'

) , ( ) , (

*

1

=

=

=

n

x

m

y

y x g y x g

m n

MSE (15)

where, n and m is number of rows and columns in the image respectively. ) , ( y x g and

'

) , ( y x g are the pixel

values in the original and reconstructed image. The Peak Signal to Noise Ratio (PSNR in dB) [14] is calculated as

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 57

MSE

PSNR

2

10

255

log 10 =

(16)

where, the usable gray level values range from 0 to 255. Feature Similarity (FSIM) index can be measured as

O e

O e

=

x

m

x

m L

x PC

x PC x S

FSIM

) ( .

) ( ). (

(17)

where means the whole image spatial domain,

m

PC means Phase congruent structure,

L

S is the similarity depends

upon the gradient measure. Fused images are shown in Figure 5. The haar wavelet decomposition is used in the 2D-

DWT-based. In the NSCT-based decomposition two filters (directional filter and pyramidal filter) are used.

( (a a) ) ( (b b) ) ( (c c) )

Figure 5 The clock source images (size of 128128) and fusion results of different algorithms: (a) fused image using

2-D DCT method (b) fused image using 2-D DWT method (c) fused image using NSCT method

Experimental results are tabulated in Table 1. Results indicate that the DWT and NSCT are outperforming with

variance based fusion than other methods. However, DCT is giving better results with mean based fusion.

Table 1: Performance of proposed fusion algorithms

Method

FSIM for

Mean

FSIM for

Variance

PSNR for

Mean

PSNR for

Variance

2D-DCT

0.9595

0.9662

30.8623

30.3379

2D-DWT 0.9503 0.9703 30.5118 34.0510

NSCT 0.9405 0.9703 29.8426 34.0510

Experiments are also performed with medical images also. Results are shown in Figure 6.

Figure 6 (a) CT image (b) MRI image (c) fused image using NSCT method

5. CONCLUSIONS

In this paper mean and variance based fusion algorithms in DCT , DWT , and NSCT domains are proposed and

discussed. Variance+DWT and variance+NSCT stood better than other possibilities. Further, blocking artifacts are

eliminated in DWT and NSCT methods. Results are good with multi focus and medical images. These techniques will

IPASJ International Journal of Computer Science(IIJCS)

Web Site: http://www.ipasj.org/IIJCS/IIJCS.htm

A Publisher for Research Motivation ........ Email: editoriijcs@ipasj.org

Volume 2, Issue 1, January 2014 ISSN 2321-5992

Volume 2 Issue 1 January 2014 Page 58

be useful for Doctors for easy diagnosis. The same techniques can be extended to all other transforms available in the

literature.

Acknowledgements

Authors would like to thank AICTE authorities for providing facilities to carry this work under Research Promotion

Scheme. Authors utilized the equipment and software purchased under RPS(Ref.No.:20/AICTE/RIFD/RPS(Policy-

III)55/2012-13) .

References

[1] G. Pajares, J.M. de la Cruz, A wavelet-based image fusion tutorial, Pattern Recognition 37 (9) (2004) 18551872.

[2] H.A. Eltoukhy, S. Kavusi, A computationally efficient algorithm for multi-focus image reconstruction,

Proceedings of SPIE Electronic Imaging (June 2003) 332341.

[3] Z. Zhang, R.S. Blum, A categorization of multiscale-decomposition based image fusion schemes with a

performance study for a digital camera application, Proceedings of IEEE 87 (8) (1999) 13151326.

[4] P.J. Burt, E.H. Adelson, The Laplacian pyramid as a compact image code, IEEE Transactions on Communications

31 (4) (1983) 532540.

[5] A. Toet, Image fusion by a ratio of low-pass pyramid, Pattern Recognition Letters 9 (4) (1989) 245253.

[6] H. Li, B.S. Manjunath, S.K. Mitra, Multisensor image fusion using the wavelet transform, Graphical Models and

Image Processing 57 (3) (1995) 235245.

[7] I. De, B. Chanda, A simple and efficient algorithm for multifocus image fusion using morphological wavelets,

Signal Processing 86 (5) (2006) 924936.

[8] M.N. Do, M. Vetterli, The contourlet transform: an efficient directional multiresolution image representation,

IEEE Transactions on Image Processing 14 (12) (2005) 20912106.

[9] R.H. Bamberger, M.J.T. Smith, A filter bank for the directional decomposition of images: theory and design,

IEEE Transactions on Signal Processing 40 (4) (1992) 882893.

[10] A.L. da Cunha, J.P. Zhou, M.N. Do, The nonsubsampled contourlet transform: theory, design, and applications,

IEEE Transactions on Image Processing 15 (10) (2006) 30893101.

[11] A.L. da Cunha, J.P. Zhou, M.N. Do, Nonsubsampled contourlet transform: filter design and application in

denoising, in: IEEE International Conference on Image Processing, Genoa, Italy, 1114 September, 2005,

pp.749752.

[12] J.P. Zhou, A.L. da Cunha, M.N. Do, Nonsubsampled contourlet transform: construction and application in

enhancement, in: IEEE International Conference on Image Processing, Genoa, Italy, 1114 September, 2005,

pp.469476.

[13] Lian Zhang and X.Mou, FSIM: A Feature Similarity Index for Image Quality Assesment, IEEE Transactions on

Image Processing , 20 (8) (2011) 23782386.

[14] G.K. Wallace, The JPEG still picture compression standard, IEEE Transactions on Consumer Electronics 38 (1)

(1992) xviii-xxxiv.

AUTHORS

G.Ramesh Babu received the B.Tech degree in Electronics and communication engineering from Prakasam

Engineering College in 2011. He joined M.Tech through Gate Score. Now, He is pursuing M.Tech in

Digital Electronics and Communication Systems in QIS College of Engineering and Technology, Ongole.

K.Veera Swamy received his M.Tech and Ph.D from JNTU, Hyderabad. He has 16 years teaching

experience. He published 49 research papers in International, National journals and presented in reputed

conferences (International Journals-12, National Journals-01, International conferences-21, National

Conferences-15). One of the reviewers for the prestigious Journals IET Image Processing, UK and

International Journal of Computer Theory and Engineering, IACSIT. He executed MODROBS projects

worth Rs.14.5 Lakhs in the area of Antennas. Presently, he is executing Research Project worth Rs 12.5 Lakhs in the

area Development of medical image segmentation algorithms for easy diagnosis sponsored by AICTE.

You might also like

- Performance Analysis of Daubechies Wavelet in Image Deblurring and DenoisingDocument5 pagesPerformance Analysis of Daubechies Wavelet in Image Deblurring and DenoisingIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Comparative Performance Analysis of A High Speed 2-D Discrete Wavelet Transform Using Three Different ArchitecturesDocument4 pagesComparative Performance Analysis of A High Speed 2-D Discrete Wavelet Transform Using Three Different ArchitecturesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Digital Image Processing NotesDocument53 pagesDigital Image Processing NotesnaninanyeshNo ratings yet

- Dyadic Curvelet Transform (DClet) Image Noise ReductionDocument2 pagesDyadic Curvelet Transform (DClet) Image Noise ReductionOmer Aziz MadniNo ratings yet

- Double-Density Discrete Wavelet Transform Based Texture ClassificationDocument4 pagesDouble-Density Discrete Wavelet Transform Based Texture ClassificationAnkita SomanNo ratings yet

- Image Compression 2011Document52 pagesImage Compression 2011sprynavidNo ratings yet

- Feature Based Watermarking Algorithm For Image Authentication Using D4 Wavelet TransformDocument12 pagesFeature Based Watermarking Algorithm For Image Authentication Using D4 Wavelet TransformsipijNo ratings yet

- Digital Image ProcessingDocument24 pagesDigital Image Processingkainsu12100% (1)

- DWT-DCT Iris Recognition Using SOM Neural NetworkDocument7 pagesDWT-DCT Iris Recognition Using SOM Neural Networkprofessor_manojNo ratings yet

- Robust Watermarking in Multiresolution: Walsh-Hadamard TransformDocument6 pagesRobust Watermarking in Multiresolution: Walsh-Hadamard TransformGaurav BhatnagarNo ratings yet

- Digital Image ProcessingDocument27 pagesDigital Image Processingainugiri91% (11)

- Sift PreprintDocument28 pagesSift PreprintRicardo Gómez GómezNo ratings yet

- WT Lecture 8Document15 pagesWT Lecture 8Pushpa Mohan RajNo ratings yet

- Color Video Denoising Using 3D Framelet TransformDocument8 pagesColor Video Denoising Using 3D Framelet TransformInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Gwo DWT SnehaDocument9 pagesGwo DWT SnehaMahendra KumarNo ratings yet

- Lec8 - Transform Coding (JPG)Document39 pagesLec8 - Transform Coding (JPG)Ali AhmedNo ratings yet

- Introduction To Wavelet Transform and Image CompressionDocument47 pagesIntroduction To Wavelet Transform and Image CompressionShivappa SiddannavarNo ratings yet

- A Novel Approach Towards X-Ray Bone Image Segmentation Using Discrete Step AlgorithmDocument5 pagesA Novel Approach Towards X-Ray Bone Image Segmentation Using Discrete Step AlgorithmInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- ComplexDocument17 pagesComplexgajanansidNo ratings yet

- Identification of Fingerprint Using Discrete Wavelet Packet TransformDocument7 pagesIdentification of Fingerprint Using Discrete Wavelet Packet Transformcristian flore arreolaNo ratings yet

- Venkatrayappa2015 Chapter RSD-HoGANewImageDescriptorDocument10 pagesVenkatrayappa2015 Chapter RSD-HoGANewImageDescriptorSakhi ShokouhNo ratings yet

- Variational Techniques For Image Denoising: A ReviewDocument5 pagesVariational Techniques For Image Denoising: A Reviewgoel61411No ratings yet

- Analysis of Interest Points of Curvelet Coefficients Contributions of Microscopic Images and Improvement of EdgesDocument9 pagesAnalysis of Interest Points of Curvelet Coefficients Contributions of Microscopic Images and Improvement of EdgessipijNo ratings yet

- Mini Project: Fpga Implementation of 2D DCTDocument16 pagesMini Project: Fpga Implementation of 2D DCTmanju_gjNo ratings yet

- A Semi-Blind Reference Watermarking Scheme Using DWT-DCT-SVD For Copyright ProtectionDocument14 pagesA Semi-Blind Reference Watermarking Scheme Using DWT-DCT-SVD For Copyright ProtectionAnonymous Gl4IRRjzNNo ratings yet

- Lecture Notes, 2D Wavelets and DDWTDocument12 pagesLecture Notes, 2D Wavelets and DDWTsarin.gaganNo ratings yet

- Paper 422Document9 pagesPaper 422KEREN EVANGELINE I (RA1913011011002)No ratings yet

- Noise Reduction and Object Enhancement in Passive Millimeter Wave Concealed Weapon DetectionDocument4 pagesNoise Reduction and Object Enhancement in Passive Millimeter Wave Concealed Weapon DetectionhukumaramNo ratings yet

- Wavelet TransformationDocument6 pagesWavelet Transformationnaved762_45308454No ratings yet

- Edge Detection-Application of (First and Second) Order Derivative in Image ProcessingDocument11 pagesEdge Detection-Application of (First and Second) Order Derivative in Image ProcessingBhuviNo ratings yet

- Chapter 8-b Lossy Compression AlgorithmsDocument18 pagesChapter 8-b Lossy Compression AlgorithmsfarshoukhNo ratings yet

- A Proposed Hybrid Algorithm For Video Denoisng Using Multiple Dimensions of Fast Discrete Wavelet TransformDocument8 pagesA Proposed Hybrid Algorithm For Video Denoisng Using Multiple Dimensions of Fast Discrete Wavelet TransformInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Lecture 2: Image Processing Review, Neighbors, Connected Components, and DistanceDocument7 pagesLecture 2: Image Processing Review, Neighbors, Connected Components, and DistanceWilliam MartinezNo ratings yet

- Lecture 2. DIP PDFDocument56 pagesLecture 2. DIP PDFMaral TgsNo ratings yet

- Texture enhancement using Riesz fractional differential operatorsDocument18 pagesTexture enhancement using Riesz fractional differential operatorssantanu3198No ratings yet

- An Efficient Image Denoising Method Using SVM ClassificationDocument5 pagesAn Efficient Image Denoising Method Using SVM ClassificationGRENZE Scientific SocietyNo ratings yet

- EMBC 2022 v2Document5 pagesEMBC 2022 v2WA FANo ratings yet

- Article 2d VMD EmmcvprDocument13 pagesArticle 2d VMD EmmcvprJean-ChristopheCexusNo ratings yet

- DIP S8ECE MODULE5 Part4Document22 pagesDIP S8ECE MODULE5 Part4Neeraja JohnNo ratings yet

- SMQTDocument4 pagesSMQTNageswariah.MNo ratings yet

- A Security Enhanced Robust Steganography Algorithm For Data HidingDocument9 pagesA Security Enhanced Robust Steganography Algorithm For Data HidingKristen TurnerNo ratings yet

- Design Label Sorting Algorithm Program as Batik Pattern DrawerDocument11 pagesDesign Label Sorting Algorithm Program as Batik Pattern DrawerRahmita Dwi kurniaNo ratings yet

- An Algorithm For Image Clustering and Compression: T Urk Demird Ok Um Fabrikası A.S . Boz Uy Uk Bilecik-TURKEYDocument13 pagesAn Algorithm For Image Clustering and Compression: T Urk Demird Ok Um Fabrikası A.S . Boz Uy Uk Bilecik-TURKEYEliza CristinaNo ratings yet

- Hierarchical Vertebral Body Segmentation Using Graph Cuts and Statistical Shape ModellingDocument5 pagesHierarchical Vertebral Body Segmentation Using Graph Cuts and Statistical Shape ModellingijtetjournalNo ratings yet

- ANA Projects ListDocument44 pagesANA Projects ListRaul GarciaNo ratings yet

- IJCSEITR - An Effective Watermarking Scheme For 3D Medical Images PDFDocument6 pagesIJCSEITR - An Effective Watermarking Scheme For 3D Medical Images PDFAnonymous Qm973oAb3100% (1)

- New Approach To Edge Detection On Different Level of Wavelet Decomposition Vladimir Maksimovi C, Branimir Jak Si C, Mile Petrovi C Petar Spalevi C, Stefan Pani CDocument24 pagesNew Approach To Edge Detection On Different Level of Wavelet Decomposition Vladimir Maksimovi C, Branimir Jak Si C, Mile Petrovi C Petar Spalevi C, Stefan Pani CСтефан ПанићNo ratings yet

- Chapter 03Document10 pagesChapter 03Abdullah RafsanNo ratings yet

- Phuong Phap SIFTDocument27 pagesPhuong Phap SIFTtaulau1234No ratings yet

- Fingerprint Images Enhancement Using Diffusion Tensor: Ferielromdhane@yahoo - FR Benzartif@Document6 pagesFingerprint Images Enhancement Using Diffusion Tensor: Ferielromdhane@yahoo - FR Benzartif@Abir BaigNo ratings yet

- Paper Title: Author Name AffiliationDocument6 pagesPaper Title: Author Name AffiliationPritam PatilNo ratings yet

- The DWT for Image CompressionDocument31 pagesThe DWT for Image CompressionEmir BuzaNo ratings yet

- Image Denoising With Block-Matching and 3D FilteringDocument12 pagesImage Denoising With Block-Matching and 3D FilteringSalman JavaidNo ratings yet

- Image Compression Using The Discrete Cosine Transform: Andrew B. Watson, NASA Ames Research CenterDocument8 pagesImage Compression Using The Discrete Cosine Transform: Andrew B. Watson, NASA Ames Research Centerom007No ratings yet

- Three-Dimensional Particle Image Velocimetry: Error Analysis of Stereoscopic TechniquesDocument8 pagesThree-Dimensional Particle Image Velocimetry: Error Analysis of Stereoscopic TechniquesmzabetianNo ratings yet

- IAETSD-JARAS-Singular Value Decomposition Using Bayes Shrink in Wavelet Domain ForDocument5 pagesIAETSD-JARAS-Singular Value Decomposition Using Bayes Shrink in Wavelet Domain ForiaetsdiaetsdNo ratings yet

- Rescue AsdDocument8 pagesRescue Asdkoti_naidu69No ratings yet

- Scale Invariant Feature Transform (SIFT) : CS 763 Ajit RajwadeDocument52 pagesScale Invariant Feature Transform (SIFT) : CS 763 Ajit RajwadeashuraNo ratings yet

- Automatic Detection of Welding Defects Using Deep PDFDocument11 pagesAutomatic Detection of Welding Defects Using Deep PDFahmadNo ratings yet

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDocument7 pagesTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterDocument7 pagesExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterDocument7 pagesExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDocument6 pagesAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDocument7 pagesTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Customer Satisfaction A Pillar of Total Quality ManagementDocument9 pagesCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDocument6 pagesAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDocument12 pagesStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Customer Satisfaction A Pillar of Total Quality ManagementDocument9 pagesCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Staycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityDocument10 pagesStaycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Soil Stabilization of Road by Using Spent WashDocument7 pagesSoil Stabilization of Road by Using Spent WashInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Mexican Innovation System: A System's Dynamics PerspectiveDocument12 pagesThe Mexican Innovation System: A System's Dynamics PerspectiveInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Impact of Effective Communication To Enhance Management SkillsDocument6 pagesThe Impact of Effective Communication To Enhance Management SkillsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDocument12 pagesStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Digital Record For Privacy and Security in Internet of ThingsDocument10 pagesA Digital Record For Privacy and Security in Internet of ThingsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Design and Detection of Fruits and Vegetable Spoiled Detetction SystemDocument8 pagesDesign and Detection of Fruits and Vegetable Spoiled Detetction SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)Document10 pagesA Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Performance of Short Transmission Line Using Mathematical MethodDocument8 pagesPerformance of Short Transmission Line Using Mathematical MethodInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Synthetic Datasets For Myocardial Infarction Based On Actual DatasetsDocument9 pagesSynthetic Datasets For Myocardial Infarction Based On Actual DatasetsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Advanced Load Flow Study and Stability Analysis of A Real Time SystemDocument8 pagesAdvanced Load Flow Study and Stability Analysis of A Real Time SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Challenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19Document10 pagesChallenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Secured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpDocument12 pagesSecured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Impact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryDocument8 pagesImpact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Deep Learning Based Assistant For The Visually ImpairedDocument11 pagesA Deep Learning Based Assistant For The Visually ImpairedInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Anchoring of Inflation Expectations and Monetary Policy Transparency in IndiaDocument9 pagesAnchoring of Inflation Expectations and Monetary Policy Transparency in IndiaInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Predicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsDocument10 pagesPredicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- GLFW OpenGL TriangleDocument4 pagesGLFW OpenGL TriangleiDenisNo ratings yet

- Introduction To Medical Imaging SystemsDocument13 pagesIntroduction To Medical Imaging SystemsYousef Ahmad2No ratings yet

- Dpreview GLOSSARYDocument76 pagesDpreview GLOSSARYlupaescu-2No ratings yet

- P TextDocument3,085 pagesP TextSean HarlowNo ratings yet

- Wa0005Document16 pagesWa0005Dharaneeswar Reddy VasanthuNo ratings yet

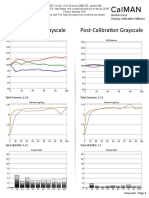

- Vizio E55-C2 CNET Review Calibration ResultsDocument3 pagesVizio E55-C2 CNET Review Calibration ResultsDavid KatzmaierNo ratings yet

- OpenGL Examples: Load Objects, Textures & ShadersDocument73 pagesOpenGL Examples: Load Objects, Textures & ShadersAbdul Razaq AnjumNo ratings yet

- Experiment 1 Lab Report (Ece)Document3 pagesExperiment 1 Lab Report (Ece)prasannanjaliNo ratings yet

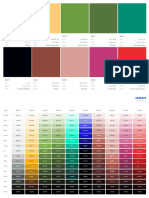

- Color palette with 10 colors, RGB, CMYK and name valuesDocument4 pagesColor palette with 10 colors, RGB, CMYK and name valuesValentina TorresNo ratings yet

- Online Error Reporting For Managing Quality Control Within RadiologyDocument6 pagesOnline Error Reporting For Managing Quality Control Within RadiologyAyman AliNo ratings yet

- Comparison of Digital and Conventional Radiography SystemsDocument2 pagesComparison of Digital and Conventional Radiography Systemsmdoni12No ratings yet

- DIP Notes Unit 5Document30 pagesDIP Notes Unit 5boddumeghana2220No ratings yet

- 'Exploring Color Theory PDFDocument12 pages'Exploring Color Theory PDFJacobo ZaharNo ratings yet

- H61M DS2 Rev.4.0 BitmapDocument2 pagesH61M DS2 Rev.4.0 BitmapHoàng Quốc Trung100% (2)

- Image Analysis With Ebimage: Gregoire Pau, Embl Heidelberg Gregoire - Pau@Embl - deDocument20 pagesImage Analysis With Ebimage: Gregoire Pau, Embl Heidelberg Gregoire - Pau@Embl - deRony Castro AlvarezNo ratings yet

- Session 1Document23 pagesSession 1Noorullah ShariffNo ratings yet

- Cs602 Assignment SolutionDocument5 pagesCs602 Assignment Solutioncs619finalproject.comNo ratings yet

- Full Pantone Solid Coated Color ChartDocument21 pagesFull Pantone Solid Coated Color Chartapi-243811262No ratings yet

- Anon 01Document1,470 pagesAnon 01Sara ChaudhuriNo ratings yet

- RGBD-D Image Analysis and Processing PDFDocument522 pagesRGBD-D Image Analysis and Processing PDFmateo garrido-lecaNo ratings yet

- Refrence Image Captured Image: Figure 5.1 Sequence DiagramDocument6 pagesRefrence Image Captured Image: Figure 5.1 Sequence Diagramvivekanand_bonalNo ratings yet

- MARIKO - S21 Morgan & Taylor - Order Form V1Document36 pagesMARIKO - S21 Morgan & Taylor - Order Form V1Hoa PhạmNo ratings yet

- A Binary Holiday ActivityDocument6 pagesA Binary Holiday ActivityJJ FajardoNo ratings yet

- Vizio M65-D0 (Sample 2) CNET Review Calibration ReportDocument3 pagesVizio M65-D0 (Sample 2) CNET Review Calibration ReportDavid KatzmaierNo ratings yet

- Source CodeDocument5 pagesSource Codeinfo.glcom5161No ratings yet

- CVISION PDFCompressor Evaluation for PDF Compression & OCRDocument119 pagesCVISION PDFCompressor Evaluation for PDF Compression & OCREdgar AlejandroNo ratings yet

- DS7201 Advanced Digital Image Processing University Question Paper Nov Dec 2017Document2 pagesDS7201 Advanced Digital Image Processing University Question Paper Nov Dec 2017kamalanathanNo ratings yet

- The Technology Behind The Elemental Demo (Unreal Engine 4)Document71 pagesThe Technology Behind The Elemental Demo (Unreal Engine 4)MatrixNeoHacker100% (1)

- Image Processing Using MATLABDocument46 pagesImage Processing Using MATLABamadeus99100% (1)

- Pricelist HippocampusDocument20 pagesPricelist HippocampusFannNo ratings yet