Professional Documents

Culture Documents

Eight Principles Guide Software Testing

Uploaded by

Bahadur Asher0 ratings0% found this document useful (0 votes)

86 views4 pagesFew Principles of Software Testing

Original Title

The Eight Basic Principles of Testing

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentFew Principles of Software Testing

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

86 views4 pagesEight Principles Guide Software Testing

Uploaded by

Bahadur AsherFew Principles of Software Testing

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 4

THE EIGHT BASIC PRINCIPLES OF TESTING

Following are eight basic principles of testing:

Define the expected output or result.

1. Do not test your own programs.

2. Inspect the results of each test completely.

3. Include test cases for invalid or unexpected conditions.

4. Test the program to see if it does what it is not supposed to do as well as what it is supposed

to do.

5. Avoid disposable test cases unless the program itself is disposable.

6. Do not plan tests assuming that no errors will be found.

7. The probability of locating more errors in any one module is directly proportional to the

number of errors already found in that module.

Let us look at each of these points.

1) DEFINE THE EXPECTED OUTPUT OR RESULT

More often that not, the tester approaches a test case without a set of predefined and

expected results. The danger in this lies in the tendency of the eye to see what it wants to see.

Without knowing the expected result, erroneous output can easily be overlooked. This problem

can be avoided by carefully pre-defining all expected results for each of the test cases. Sounds

obvious? You would be surprised how many people miss this pint while doing the self-

assessment test.

2) DO NOT TEST YOUR OWN PROGRAMS

Programming is a constructive activity. To suddenly reverse constructive thinking and begin the

destructive process of testing is a difficult task. The publishing business has been applying this

idea for years. Writers do not edit their own material for the simple reason that the work is

their baby and editing out pieces of their work can be a very depressing job. The attitudinal l

problem is not the only consideration for this principle. System errors can be caused by an

incomplete or faulty understanding of the original design specifications; it is likely that the

programmer would carry these misunderstandings into the test phase.

3) INSPECT THE RESULTS OF EACH TEST COMPLETELY

As obvious as it sounds, this simple principle is often overlooked. In many test cases, an after-

the-fact review of earlier test results shows that errors were present but overlooked because

no one took the time to study the results.

4) INCLUDE TEST CASES FOR INVALID OR UNEXPECTED CONDITIONS

Programs already in production often cause errors when used in some new or novel fashion.

This stems from the natural tendency to concentrate on valid and expected input conditions

during a testing cycle. When we use invalid or unexpected input conditions, the likelihood of

boosting the error detection rate is significantly increased.

5) TEST THE PROGRAM TO SEE IF IT DOES WHAT IT IS NOT SUPPOSED TO DO AS WELL AS

WHAT IT IS SUPPOSED TO DO

It is not enough to check if the test produced the expected output. New systems, and especially

new modifications, often produce unintended side effects such as unwanted disk files or

destroyed records. A thorough examination of data structures, reports, and other output can

often show that a program is doing what is not supposed to do and therefore still contains

errors.

6) AVOID DISPOSABLE TEST CASES UNLESS THE PROGRAM ITSELF IS DISPOSABLE

Test cases should be documented so they can be reproduced. With a non-structured approach

to testing, test cases are often created on-the-fly. The tester sits at a terminal, generates test

input, and submits them to the program. The test data simply disappears when the test is

complete. Reproducible test cases become important later when a program is revised, due to

the discovery of bugs or because the user requests new options. In such cases, the revised

program can be put through the same extensive tests that were used for the original version.

Without saved test cases, the temptation is strong to test only the logic handled by the

modifications. This is unsatisfactory because changes which fix one problem often create a host

of other apparently unrelated problems elsewhere in the system. As considerable time and

effort are spent in creating meaningful tests, tests which are not documented or cannot be

duplicated should be avoided.

7) DO NOT PLAN TESTS ASSUMING THAT NO ERRORS WILL BE FOUND

Testing should be viewed as a process that locates errors and not one that proves the program

works correctly. The reasons for this were discussed earlier.

8) THE PROBABILITY OF LOCATING MORE ERRORS IN ANY ONE MODULE IS DIRECTLY

PROPORTIONAL TO THE NUMBER OF ERRORS ALREADY FOUND IN THAT MODULE

At first glance, this may seem surprising. However, it has been shown that if certain modules or

sections of code contain a high number of errors, subsequent testing will discover more errors

in that particular section that in other sections. Consider a program that consists of two

modules, A and B. If testing reveals five errors in module A and only one error in module B,

module A will likely display more errors that module B in any subsequent tests. Why is this so?

There is no definitive explanation, but it is probably due to the fact that the error-prone module

is inherently complex or was badly programmed. By identifying the most bug-prone modules,

the tester can concentrate efforts there and achieve a higher rate of error detection that if all

portions of the system were given equal attention. Extensive testing of the system after

modifications have been made is referred to as regression testing.

OUTSOURCED SOFTWARE testing process, if not subject to the basic principles, can land the

tester into trouble. Haphazard testing results in chaos and incorrect judgement. Here are some

of the software testing rules which when abided, can result in overall success:

1) Definition of expected results

Mostly, the testers do not have their results defined and do not chalk out the most

expected outcome. Without knowing this, errors in the output might miss the testers eye.

Pre-defining the expected results can solve this problem for each of the test cases. Though

this might sound ridiculous, many of the testers are known to skip this step and land

eventually in no-mans land.

2) Dont test your own programmes

Software programming is actually a creative process while testing is a negative and destructive

process. To ward off creativity and then focus on the negative process of testing, is a difficult

switch. This writers analogy is similar too, as writers do not edit their own written material but

get it edited through an editor.

Additionally, it might happen that the programmer has had a faulty understanding of the

software design. In this case, it is most likely that the programmer would initiate the same

application with a faulty outlook leading to incorrect results.

3) Check each and every test thoroughly

In many test cases, a hindsight review of earlier test results in software testing service shows

that errors which were present were often overlooked because the results were not thoroughly

studied.

4) Test cases should be used for unexpected conditions

Programmes which are already under production when used in an innovative fashion cause a

great deal of errors and bugs because most testers test the applications using valid and

expected input conditions only. Using invalid or unexpected input conditions, the chances of

detecting errors increase manifold.

5) Test the programme for what it is expected to do and what it is not

Along with the test of whether the programme is giving the desired results, software testing

services should also include testing of unintended side-effects. These include unwanted disk

files or tarnished records. Examination of data structures and reports will reveal that the output

can show what it is not supposed to do though it does what it is expected to do.

6) Software testing should be done with the intent of finding errors

Software testing services include testing processes that locate errors and not with the intent of

rendering the program as perfect. The probability of locating more errors is directly

proportional to the number of errors already found.

You might also like

- S2/S11 Software update instructionDocument55 pagesS2/S11 Software update instructionEliezer0% (1)

- ISTQB Chapter 1 NotesDocument8 pagesISTQB Chapter 1 NotesViolet100% (3)

- IV-I SOFTWARE TESTING Complete NotesDocument107 pagesIV-I SOFTWARE TESTING Complete NotesAaron GurramNo ratings yet

- Heidenhain ManualDocument725 pagesHeidenhain ManualRaulEstal100% (2)

- W3Schools Quiz Results - PythonDocument14 pagesW3Schools Quiz Results - PythonRicardo Deferrari0% (1)

- Linux Kernel Debugging Going Beyond Printk MessagesDocument65 pagesLinux Kernel Debugging Going Beyond Printk MessagesالمتمردفكرياNo ratings yet

- SW TestingDocument8 pagesSW TestingSantosh KumarNo ratings yet

- Software TestingDocument13 pagesSoftware TestingNishaNo ratings yet

- UNIT-4: Software TestingDocument157 pagesUNIT-4: Software TestingAkash PalNo ratings yet

- Software TestingDocument312 pagesSoftware TestingAman SaxenaNo ratings yet

- SW TestingDocument112 pagesSW Testingyours_raj143No ratings yet

- Software Testing: A Guide to Testing Mobile Apps, Websites, and GamesFrom EverandSoftware Testing: A Guide to Testing Mobile Apps, Websites, and GamesRating: 4.5 out of 5 stars4.5/5 (3)

- Software Testing Notes PDFDocument193 pagesSoftware Testing Notes PDFzameerhasan123No ratings yet

- The Definition of TestingDocument4 pagesThe Definition of Testingapi-3738458100% (1)

- 10 Commandments of Software TestingDocument5 pages10 Commandments of Software Testingvickram_chandu9526No ratings yet

- Introduction to Software TestingDocument4 pagesIntroduction to Software TestingDorin DamașcanNo ratings yet

- Testing Shows Presence of Defects: But Cannot Prove That There Are No DefectsDocument4 pagesTesting Shows Presence of Defects: But Cannot Prove That There Are No Defectsvenkat manojNo ratings yet

- ISTQB Certified Tester Foundation Level FundamentalsDocument39 pagesISTQB Certified Tester Foundation Level FundamentalsBrandon SteelNo ratings yet

- Systematic Software TestingDocument17 pagesSystematic Software TestingShay GinsbourgNo ratings yet

- 301 Software EngineeringDocument39 pages301 Software EngineeringItachi UchihaNo ratings yet

- Functional Testing Interview QuestionsDocument18 pagesFunctional Testing Interview QuestionsPrashant GuptaNo ratings yet

- Software Testing in 4 StepsDocument29 pagesSoftware Testing in 4 StepsPulkit JainNo ratings yet

- Software Testing: Time: 2 HRS.) (Marks: 75Document19 pagesSoftware Testing: Time: 2 HRS.) (Marks: 75PraWin KharateNo ratings yet

- 7 Principles of TestingDocument1 page7 Principles of TestingThirunavukkarasuKumarasamyNo ratings yet

- Software Testing Fundamentals and PrinciplesDocument6 pagesSoftware Testing Fundamentals and PrinciplesamirNo ratings yet

- Report For Cse 343 LpuDocument25 pagesReport For Cse 343 LpuShoaib AkhterNo ratings yet

- Lecture 3.2.5 Software Testing PrinciplesDocument12 pagesLecture 3.2.5 Software Testing PrinciplesSahil GoyalNo ratings yet

- Software TestingDocument19 pagesSoftware Testingalister collinsNo ratings yet

- Testing vs. Inspection Research PaperDocument21 pagesTesting vs. Inspection Research PaperNaila KhanNo ratings yet

- Unit 1Document44 pagesUnit 1niharika niharikaNo ratings yet

- SQT Testing Seven PrinciplesDocument3 pagesSQT Testing Seven PrinciplesRomaz RiazNo ratings yet

- CCIS 102: Bachelor of Science in Information SystemDocument13 pagesCCIS 102: Bachelor of Science in Information SystemRogelio Briñas Dacara Jr.No ratings yet

- Chapter 2 - 7 Principles of Software TestingDocument5 pagesChapter 2 - 7 Principles of Software TestingAamir AfzalNo ratings yet

- Testing: Testing Is A Process Used To Help Identify The Correctness, Completeness and Quality ofDocument25 pagesTesting: Testing Is A Process Used To Help Identify The Correctness, Completeness and Quality ofdfdfdNo ratings yet

- Software Testing Is A Process Used To Identify The CorrectnessDocument3 pagesSoftware Testing Is A Process Used To Identify The CorrectnesscmarrivadaNo ratings yet

- General Testing PrinciplesDocument21 pagesGeneral Testing PrinciplesDhirajlal ChawadaNo ratings yet

- Software TestingDocument8 pagesSoftware TestingNnosie Khumalo Lwandile SaezoNo ratings yet

- ST - Module 1: What Is Software TestingDocument30 pagesST - Module 1: What Is Software Testing1NH20CS182 Rakshith M PNo ratings yet

- ST - Lecture3 - Principles of TestingDocument20 pagesST - Lecture3 - Principles of TestingZain ShahidNo ratings yet

- Software testing principles for finding bugs earlyDocument2 pagesSoftware testing principles for finding bugs earlyLarry HumphreyNo ratings yet

- 1-Introduction To TestingDocument8 pages1-Introduction To Testingkoushik reddyNo ratings yet

- PrinciplesDocument3 pagesPrinciplesGaurangNo ratings yet

- Test Generation Using Model Checking: Hung Tran 931470260 Prof Marsha ChechikDocument13 pagesTest Generation Using Model Checking: Hung Tran 931470260 Prof Marsha ChechikquigonjeffNo ratings yet

- Fundamentals of Testing: Delivery, Service or Result 2. Exhaustive Testing Is Impossible!Document4 pagesFundamentals of Testing: Delivery, Service or Result 2. Exhaustive Testing Is Impossible!Ajay kumarreddyNo ratings yet

- Software Testing Implementation ReportDocument14 pagesSoftware Testing Implementation ReportaddssdfaNo ratings yet

- STM NotesDocument131 pagesSTM NotesGeeta DesaiNo ratings yet

- SoftwareTesting Lect 1.1Document188 pagesSoftwareTesting Lect 1.1Kunal AhireNo ratings yet

- ISTQTB BH0 010 Study MaterialDocument68 pagesISTQTB BH0 010 Study MaterialiuliaibNo ratings yet

- What Is Testing?: Unit-IDocument34 pagesWhat Is Testing?: Unit-Ijagannadhv varmaNo ratings yet

- Testing Overview Purpose of TestingDocument7 pagesTesting Overview Purpose of TestingAmit RathiNo ratings yet

- White Paper on Defect Clustering and Pesticide ParadoxDocument10 pagesWhite Paper on Defect Clustering and Pesticide ParadoxShruti0805No ratings yet

- Software Testing: BooksDocument18 pagesSoftware Testing: BooksusitggsipuNo ratings yet

- Testing FactsDocument5 pagesTesting FactsVasuGNo ratings yet

- Unit 1Document19 pagesUnit 1DineshNo ratings yet

- 7 Principles of Software TestingDocument2 pages7 Principles of Software Testingsangram tangdeNo ratings yet

- Software Testing PrinciplesDocument2 pagesSoftware Testing PrinciplesRISHIT VARMANo ratings yet

- ISTQB_M1Document25 pagesISTQB_M1jkaugust12No ratings yet

- 12.3 Testing & MaintenanceDocument9 pages12.3 Testing & MaintenancechexNo ratings yet

- Purpose of Testing and Key Testing ConceptsDocument21 pagesPurpose of Testing and Key Testing ConceptsG Naga MamathaNo ratings yet

- Software Testing Methodologies ExplainedDocument32 pagesSoftware Testing Methodologies ExplainedSiva Teja NadellaNo ratings yet

- Software Testing MethodsDocument29 pagesSoftware Testing Methodsazlan09-1No ratings yet

- Testing Taxonomy and TypesDocument28 pagesTesting Taxonomy and TypesTanish PantNo ratings yet

- Testing PresentationDocument39 pagesTesting PresentationMiguel Angel AguilarNo ratings yet

- Lany BookDocument1 pageLany BookBahadur AsherNo ratings yet

- Sparkling Kaleidoscope Card - Technique Friday With ElsDocument3 pagesSparkling Kaleidoscope Card - Technique Friday With ElsBahadur AsherNo ratings yet

- Sparkling Kaleidoscope Card - Technique Friday With ElsDocument3 pagesSparkling Kaleidoscope Card - Technique Friday With ElsBahadur AsherNo ratings yet

- Stand Up Valentine's Day Card - Technique FridayDocument3 pagesStand Up Valentine's Day Card - Technique FridayBahadur AsherNo ratings yet

- HTC One ME User Guide WWE PDFDocument203 pagesHTC One ME User Guide WWE PDFBahadur AsherNo ratings yet

- Luxe 2016 Arabic English Diary CatalogueDocument32 pagesLuxe 2016 Arabic English Diary CatalogueBahadur AsherNo ratings yet

- Paper Size DetailsDocument3 pagesPaper Size DetailsBahadur AsherNo ratings yet

- Popmake EagleDocument1 pagePopmake EagleMohd FaizNo ratings yet

- Luxe Trend CatalogueDocument36 pagesLuxe Trend CatalogueBahadur AsherNo ratings yet

- New Floor Displays For Clairefontaine NotebooksDocument1 pageNew Floor Displays For Clairefontaine NotebooksBahadur AsherNo ratings yet

- Fiorentina 2015Document52 pagesFiorentina 2015Bahadur AsherNo ratings yet

- Luxe Trend CatalogueDocument36 pagesLuxe Trend CatalogueBahadur AsherNo ratings yet

- Travelogue JournalsDocument1 pageTravelogue JournalsBahadur AsherNo ratings yet

- Oxford Product Guide 2015Document11 pagesOxford Product Guide 2015Bahadur AsherNo ratings yet

- Exaclair2016 31Document1 pageExaclair2016 31Bahadur AsherNo ratings yet

- Shuter HomeDocument44 pagesShuter HomeBahadur AsherNo ratings yet

- Collection "1951": Staplebound NotebooksDocument1 pageCollection "1951": Staplebound NotebooksBahadur AsherNo ratings yet

- New Photoshop CC Feature'sDocument18 pagesNew Photoshop CC Feature'sBahadur AsherNo ratings yet

- Exaclair2016 28Document1 pageExaclair2016 28Bahadur AsherNo ratings yet

- Storyboard Format Ver 1Document7 pagesStoryboard Format Ver 1Bahadur AsherNo ratings yet

- Storyboard Format Ver 1Document7 pagesStoryboard Format Ver 1Bahadur AsherNo ratings yet

- Classic Notebooks: Staplebound - Sold Assorted ColorsDocument1 pageClassic Notebooks: Staplebound - Sold Assorted ColorsBahadur AsherNo ratings yet

- Photoshop TutorialDocument16 pagesPhotoshop TutorialBahadur AsherNo ratings yet

- Understanding Depth of FieldDocument4 pagesUnderstanding Depth of FieldBahadur AsherNo ratings yet

- Software TestingDocument3 pagesSoftware TestingBahadur AsherNo ratings yet

- New Photoshop CC FeaturesDocument2 pagesNew Photoshop CC FeaturesBahadur AsherNo ratings yet

- 2010 - Advertising in Contemporary SocietyDocument59 pages2010 - Advertising in Contemporary SocietyBahadur AsherNo ratings yet

- An Introduction To Consumer BehaviorDocument18 pagesAn Introduction To Consumer BehaviorBahadur AsherNo ratings yet

- Pattern in IllustratorDocument9 pagesPattern in IllustratorBahadur AsherNo ratings yet

- ISSD User-Guide PDFDocument4 pagesISSD User-Guide PDFRavindu JayalathNo ratings yet

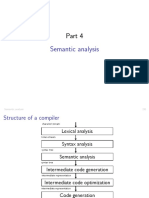

- Semantic Analysis 231Document53 pagesSemantic Analysis 231Nenu NeneNo ratings yet

- Tutorials Advance Computer Architecture Level 300 Coltech2 - 121547Document4 pagesTutorials Advance Computer Architecture Level 300 Coltech2 - 121547JoeybirlemNo ratings yet

- W.E.F Academic Year 2012-13 G' SchemeDocument5 pagesW.E.F Academic Year 2012-13 G' Schemeavani292005No ratings yet

- Bypass AVDocument1 pageBypass AVlczancanellaNo ratings yet

- Industrie 4.0 Enabling Technologies(2015)Document6 pagesIndustrie 4.0 Enabling Technologies(2015)lazarNo ratings yet

- Electrical Engineering Department ACADEMIC SESSION: - Dec40073-Database SystemDocument26 pagesElectrical Engineering Department ACADEMIC SESSION: - Dec40073-Database Systemiskandardaniel0063No ratings yet

- Bilal Khan ResumeDocument1 pageBilal Khan ResumenajmussaqibmahboobiNo ratings yet

- Attivo Networks-Deception MythsDocument9 pagesAttivo Networks-Deception MythsOscar Gopro SEISNo ratings yet

- Z-Notation Abstract Model SpecificationDocument59 pagesZ-Notation Abstract Model Specificationmohsin aliNo ratings yet

- Simulation of TP3 FAOT piéce 2 design studyDocument4 pagesSimulation of TP3 FAOT piéce 2 design studyGOUAL SaraNo ratings yet

- If, Functions, Variables.Document23 pagesIf, Functions, Variables.Luke LippincottNo ratings yet

- Checklist M.ticketDocument6 pagesChecklist M.ticketRenatas ParojusNo ratings yet

- Theme Installation StepsDocument10 pagesTheme Installation Steps.No ratings yet

- CSE325 - A1 - G2 - Lecture2 NotesDocument39 pagesCSE325 - A1 - G2 - Lecture2 Notesislam2059No ratings yet

- Netgate 7100 1U BASE Pfsense+ Security Gateway ApplianceDocument9 pagesNetgate 7100 1U BASE Pfsense+ Security Gateway ApplianceJuntosCrecemosNo ratings yet

- Vmware Workspace One: Solution OverviewDocument3 pagesVmware Workspace One: Solution Overviewapurb tewaryNo ratings yet

- ArchiMate Part 2 - 2Document8 pagesArchiMate Part 2 - 2saiaymanbensadiNo ratings yet

- Website Assignment RDocument4 pagesWebsite Assignment Rapi-568464744No ratings yet

- LogDocument46 pagesLogstefani putriNo ratings yet

- 1st Year Sample ProjectDocument56 pages1st Year Sample ProjectshraddhaNo ratings yet

- Changing A Worker S Legal Employer in HCM CloudDocument58 pagesChanging A Worker S Legal Employer in HCM CloudsachanpreetiNo ratings yet

- Rmprepusb: V2.1.647 (Please See Website For)Document28 pagesRmprepusb: V2.1.647 (Please See Website For)Frank WestmeyerNo ratings yet

- That's Right, Well Done! You Sure Know How To Keep Your Online Identity SafeDocument5 pagesThat's Right, Well Done! You Sure Know How To Keep Your Online Identity SafeNatalia ShammaNo ratings yet

- Java MCQ'SDocument161 pagesJava MCQ'Schivukula Vamshi KrishnaNo ratings yet

- UCP FOIT OS Mutex SemaphoresDocument9 pagesUCP FOIT OS Mutex SemaphoresInfinite LoopNo ratings yet