Professional Documents

Culture Documents

Section 3 Systems of Eqs

Uploaded by

Kayla DollenteCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Section 3 Systems of Eqs

Uploaded by

Kayla DollenteCopyright:

Available Formats

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

SYSTEMS OF EQUATIONS (C&C 4th PT 3 [Chs. 9-12])

[A] {x} = {c}

Systems of Equations (C&C 4th, PT 3.1, p. 217)

Determine x1,x2,x3,,xn such that

f1(x1,x2,x3,,xn) = 0

f2(x1,x2,x3,,xn) = 0

fn(x1,x2,x3,,xn) = 0

Linear Algebraic Equations

a11x1 + a12x2 + a13x3 + + a1nxn = b1

a21x1 + a22x2 + a23x3 + + a2nxn = b2

an1x1 + an2x2 + an3x3 + + anxn = bn

where all aij's and bi's are constants.

In matrix form: (C& C 4th, PT3.2.1, p.220 and PT3.2.3, p. 226)

a11

a

21

a 31

a n1

or simply

a12

a 22

a 32

a13

a 23

a 33

M

a n 2 a n3

nxn

K

K

K

K

a1n

a 2n

a 3n

a nn

x 1

x

2

x 3

M

x n

nx1

b1

b 2

= b3

M

b n

nx1

[A]{x} = {b}

Applications of Solutions of Linear Systems of Equations

Steady-state reactor in Chemical Engineering (12.1)

Static structural analysis

(12.2)

Potentials and currents in electrical networks (12.3)

Spring - mass models

(12.4)

Solution of partial differential Equations

(29-32)

Heat and fluid flow

Pollutants in environment

Weather

Stress analysis [material science]

Inverting matrices

Multivariate Newton-Raphson for

nonlinear systems of equations

(9.6)

Developing approximations:

Least squares

(17)

Spline functions

(18.6)

Statistics

page 3-1 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Categories of Solution Methods:

1. Direct

- Gaussian Elimination

- Gauss - Jordon

- LU Decomposition (Doolittle and Cholesky)

2. Iterative

- Jacobi Method

- Gauss - Seidel Method

- Conjugate Gradient Method

Outline of our studies and corresponding sections in C&C

Gaussian Elimination

Algorithm

9.2

Problems

9.3

Pivoting

9.4.2

Scaling

9.4.3

Operation Counts (flops)

9.2

LU Decomposition

10.1

Matrix inversion (Gauss-Jordan)

10.2

Banded Systems

11.1

Study of Linear Systems

Ill-conditioned systems of equations

9.3

Error Analysis, Matrix norms

10.3

Iterative solutions (Gauss-Seidel)

11.2

Examples

12

Naive Gaussian Elimination: (C& C 4th, 9.2, p. 238)

Solve [A]{x} = {c} for {x}, with [A] n x n and {x}, {c} Rn

Basic Approach:

1.Forward Elimination: Take multiples of rows away from subsequent

rows to zero out columns such that [A] is converted to [U]

2.Back Substitution: Beginning with [U], back-substitute to solve for the xi's

Details:

1. Forward Elimination (Row Manipulation)

a. Form augmented matrix [A|c]

a11 a12 L

a 21 a 22 L

M M O

a n1 a n2 L

a1n x1

a 2n x 2

M M

a nn x n

b1

a11 a12

b2

a 21 a 22

M M

M

b

a

a

n

n2

n1

L

L

O

L

b. By elementary row manipulations, reduce [A | b] to [U | b']

where U is an upper triangular matrix:

DO i = 1, n-1

DO k = i+1, n

Row(k) = Row(k) - (aki/aii) * Row(i)

ENDDO

ENDDO

a1n b1

a 2n b 2

M M

a nn b n

page 3-2 of 3-27

ENGRD 241 Lecture Notes

page 3-3 of 3-27

Section 3: Systems of Equations

Naive Gaussian Elimination (cont'd):

2. Back Substitution

Solve the upper triangular system [U]{x} = {c'}

u11

0

u13 K

u 23 K

u 33 K

O

0

u12

u 22

u1n

u1n

u 3n

u nn

x1

x

2

x 3

O

x n

b1

b

2

b3

O

bn

xn = bn / u n n

DO i = n1, 1, (1)

bi xi =

u x

j i 1

ij

uii

ENDDO

Naive Gaussian Elimination: Example

Consider the system of equations

1

50 1 2 x1

1 40 4

= 2

x 2

2 6 30 x 3 3

To 2 significant figures, the exact solution is:

0.016

= 0.041

0.091

x true

We will use 2 decimal digit arithmetic with rounding.

Start with the augmented matrix:

50

1

40

6

4

30

1

2

Multiply the first row by 1/50 and add to second

row.

Multiply the first row by 2/50 and add to third

row:

Multiply the second row by 6/40 and add to third

row:

50 1 2

0 40 4

0 6 30

2

3

50

0

1

2

2.7

40

0

4

29

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Naive Gaussian Elimination: Example (cont'd):

Now backsolve:

50 1 2

0 40 4

0 0 29

2

2.7

x3 = 2.7 = 0.093

29

(vs. 0.091, t = 2.2%)

x2 = (2 4x 3 ) = 0.040

40

(vs. 0.041, t = 2.5%)

x1 = (1 2x 3 x 2 ) = 0.016

50

(vs. 0.016, t = 0%)

Consider an alternative solution interchanging rows:

2 6 30 3

50 1 2 1

1 40 4 2

After forward elimination, we obtain:

2

6

30

3

0 150 750 74

0

0

200 19

Now backsolve:

x3 = 0.095

(vs. 0.091, t = 4.4%)

x2 = 0.020 (vs. 0.041, t = 50%)

x1 = 0.000 (vs. 0.016, t = 100%)

Apparently, the order of the equations matters!

What happened ???

When we used 50 x1 + 1 x2 + 2 x3 = 1 to solve for x1, there was little

change in other equations.

When we used 2 x1 + 6 x2 + 30 x3 = 3 to solve for x1 it made BIG

changes in the other equations. Some coefficients for other equations

were lost!

This second equation has little to do with x1.

It has mainly to do with x3.

As a result we obtained LARGE numbers in the table, significant

roundoff error occurred and information was lost.

If scaling factors | aji / aii | are

diminished.

1 then the impact of roundoff errors is

page 3-4 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Naive Gaussian Elimination: Example (cont'd)

Effect of diagonal dominance:

As a first approximation roots are:

x b /a

i

i ii

Consider the previous examples:

0.016

x true = 0.041

0.091

50 1 2

1 40 4

2 6 30

2

3

x1 1/50 =0.02

x 2 2/40 =0.05

x 3 3/30 =0.10

2 6 30

50 1 2

1 40 4

1

2

x1 3/2 = 1.50

x 2 1/1 = 1.00

x 3 2/4 = 0.50

Goals

1. Best accuracy (i.e. minimize error)

2. Parsimony (i.e. minimize effort)

Possible Problems:

A. A zero on diagonal term

B. Many floating point operations (flops) cause numerical precision

problems and propagation of errors.

C. System may be ill-conditioning, i.e. det[A] 0

D. No solution or an infinite # of solutions, i.e. det[A] = 0

Possible Remedies:

A. Carry more significant figures (double precision)

B. Pivot when the diagonal is close to zero.

C. Scaling to reduce round-off error.

PIVOTING (C&C 4th, 9.4.2, p. 250)

A. Row pivoting (Partial Pivoting) In any good routine; at each step i, find

maxk | aki | for k = i+1, i+2, ..., n

Move corresponding row to the pivot position .

(i) Avoids zero aii

(ii) Keeps numbers in table small

and minimizes round-off,

(ii) Uses an equation with large | aki | to find xi.

Maintains diagonal dominance.

page 3-5 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Row pivoting does not affect the order of the variables.

Included in any good Gaussian Elimination routine.

PIVOTING (cont'd)

B. Column pivoting Reorder remaining variables xj; for j = i, . . . , n so get largest | aij |

Column pivoting changes the order of the unknowns, xi, and thus

leads to complexity in the algorithm. Not usually done.

C. Complete or Full pivoting

Performing both row and column pivoting.

How to fool pivoting example: one equation has very large coefficients.

Multiply third equation by 100. After pivoting will obtain:

200 600 3000 300

1

2

1

50

1

40

4

2

Forward elimination, yields:

200 600 3000

0 150 750

0

0

200

300

74

19

Backsolution yields:

x3 = 0.095

x2 = 0.020

(vs. 0.091, t = 4.4%)

x1 = 0.000

(vs. 0.016, t = 100%)

(vs. 0.041, t = 50%)

The order of the rows is still poor!!

SCALING (C&C 4th, 9.4.3, p. 252)

A. Express all equations in comparable units so all elements of [A]

are about the same size;

B. If that fails, and maxj |aij| varies widely across rows,

Replace each row by:

a ij

a ij

max j a ij

This makes the largest coefficient of each equation equal to 1 and

the largest element of [A] equal to +1 or -1.

NOTE: Routines generally do not scale automatically; scaling can cause

round-off error too!

SOLUTIONS:

page 3-6 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

don't actually scale, but use imaginary scaling factors to determine

what pivoting is necessary.

scale only by powers of 2: no roundoff or division required.

How to fool scaling:

A poor choice of units can undermine the value of scaling. Begin with our

original example.

50 1 2

1 40 4

2 6 30

1

2

3

If the units of x1 were expressed in g instead of mg the matrix might read:

50000 1 2 1

1000 40 4 2

2000 6 30 3

Scaling yields:

1 0.00002 0.00001 0.00001

0.04

0.004

0.002

1

1 0.003

0.015

0.0015

Which equation is used to determine x1???

Why bother to scale?

OPERATION COUNTING (C&C 4th, 9.2.1, p.242)

Number of multiplies and divides often determines the CPU time.

One floating point multiply/divide and associated adds/subtracts is called

a FLOP: FLoating point OPeration.

Some Useful Identities for counting FLOPS:

m

1)

cf (i) = c

i 1

m

3)

i=1

f(i)

2)

f (i) + g(i) =

i 1

m

i=1

1= 1 + 1 + K

i=1

5)

+1=m

4)

f(i) +

i=1

1 = m - k + 1

i=k

m(m+1)

m2

i= 1 + 2 + 3 + 4 + K + m =

=

+ O(m)

2

2

g(i)

i=1

page 3-7 of 3-27

ENGRD 241 Lecture Notes

m

6)

i2 = 12 + 22 + K

Section 3: Systems of Equations

+ m2 =

i=1

m(m+1)(2m+1)

m3

=

+ O(m 2 )

6

3

O(mn) means "terms of order mn and lower."

Simple Examples of Operation Counting:

1. DO i = 1 to n

Y(i) = X(i)/i 1

ENDDO

In each iteration X(i)/i 1 represents 1 FLOP because it requires one

division & one subtraction.

The DO loop extends over i from 1 to n iterations:

n

1 n

FLOPS

i 1

2. DO i = 1 to n

Y(i) = X(i) X(i) + 1

DO j = i to n

Z(j) = [ Y(j) / X(i) ] Y(j) + X(i)

ENDDO

ENDDO

With nested loops, always start from the innermost loop.

[Y(j)/X(i)] * Y(j) + X(i) represents 2 FLOPS

n

ji

ji

2 = 21 = 2(n i + 1) FLOPS

For the outer i-loop:

X(i)X(i) + 1 represents 1 FLOP

n

i 1

i 1

i 1

[1 2(n i 1)] = (3 + 2n) 1 2 i

= (3 + 2n)n

2n ( n 1)

2

2

2

= 3n + 2n n n

=

2

2

n + 2n = n + O(n)

page 3-8 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Operation Counting for Gaussian Elimination

Forward Elimination:

DO k = 1 to n1

DO i = k+1 to n

r = A(i,k)/A(k,k)

DO j = k+1 to n

A(i,j)=A(i,j) r *A(k,j)

ENDDO

C(i) = C(i) r *C(k)

ENDDO

ENDDO

Back Substitution:

X(n) = C(n)/A(n,n)

DO i = n1 to 1 by 1

SUM = 0

DO j = i+1 to n

SUM = SUM + A(i,j)*X(j)

ENDDO

X(i) = [C(i) SUM]/A(i,i)

ENDDO

Forward Elimination

n

Inner loop

1 = n (k+1) + 1 = n k

jk 1

Second loop =

[2 (n k)]

i k 1

= [(2 + n) k] (n k)

2

2

= (n + 2n) 2(n + 1)k + k

n 1

Outer loop

[(n 2 2n) 2(n 1)k k 2 ]

k 1

n 1

n 1

n 1

k 1

k 1

k 1

= (n +2n) 1 2(n+1) k +

2

= (n2+2n)(n-1) 2(n+1)

(n 1)( n )(2n 1)

6

3

= n + O(n2)

(n 1)(n)

+

2

k2

page 3-9 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Back Substitution

n

Inner Loop

1 = n (i +1) + 1 = n i

ji 1

n 1

Outer Loop

i 1

1 (n i) = (1+n)

n 1

n 1

i 1

i 1

1 i

2

(n 1)n

= n + O(n)

2

2

Total flops = Forward Elimination + Back Substitution

= (1+n) (n1)

n3/3 + O (n2)

n3/3 + O (n2)

n2/2 + O (n)

To convert (A,b) to (U,b') requires n3/3, plus terms of order n2 and

smaller, flops.

To back solve requires: 1 + 2 + 3 + 4 + . . . + n = n (n+1) / 2 flops;

Grand Total: the entire effort requires n3/3 + O(n2) flops altogether.

Gauss-Jordon Elimination (C&C 4th 9.7, p. 259)

Diagonalization by both forward and backward elimination in each column.

a11 a12 a13 L a1n x1

b1

a a

21 22 a 23 L a 2n x 2 b 2

a 31 a 32 a 33 L a 3n x 3 = b3

O O

a n1 a n2 a n3 L a nn x n b 4

perform both backward and forward elimination until:

x1

b1

1 0 0 K 0

x

0 1 0 K 0

2 b 2

x 3 = b3

0 0 1 K 0

O

O

0 0 0 K 1

x n bn

Operation count:

n3

+ 0 (n 2 )

2

n3

+ 0 (n 2 )

3

Gauss-Jordan is 50% slower than Gauss elimination.

Gauss Elimination only requires

page 3-10 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Gauss-Jordon Elimination, Example (two-digit arithmetic):

50 1 2 1

1 40 4 2

2 6 30 3

1 0.02 0.04 0.02

4

2

0 40

0

6

30

3

1 0 0.038 0.019

.1

0.05

0 40

0 0

29

2.7

=>

1 0 0

0 1 0

0 0 1

x1 =

x2 =

0.015

(vs. 0.016, t = 6.3%)

0.041

(vs. 0.041, t = 0%)

x3 =

0.093

(vs. 0.091, t = 2.2%)

0.015

0.041

0.093

Gauss-Jordan Matrix Inversion

The solution of: [A]{x} = {b} is:

{x} = [A]-1{b}

where [A]-1 is the inverse matrix of [A]

Consider:

[A] [A]-1 = [ I ]

1) Create the augmented matrix: [ A | I ]

2) Apply Gauss-Jordan elimination:

==> [ I | A-1 ]

Gauss-Jordan Matrix Inversion

(with 2 digit arithmetic):

50 1 2 1 0 0

[A | I] = 1 40 4 0 1 0

2 6 30 0 0 1

1 0.02 0.04 0.02 0 0

4

0.02 1 0

= 0 40

0

6

30 0.04 0 1

page 3-11 of 3-27

ENGRD 241 Lecture Notes

page 3-12 of 3-27

Section 3: Systems of Equations

1 0 0.038

0.02 0.005 0

= 0 1 0.1 0.0005 0.025 0

0 0

28

0.037 0.15 1

1 0 0

0.02

0.00029 0.0014

0.026

0.0036

= 0 1 0 0.00037

0 0 1 0.0013 0.0054

0.036

MATRIX INVERSE [A-1]

CHECK:

[ A ] [ A ]-1 = [ I ]

50

2

2

40

6

4

30

0.020

-0.0029

-0.00037

-0.0013

0.026

-0.0054

-0.0014

-0.0036

0.036

0.997

0.13

0.000

0.001

1.016

0.012

[ A ]-1 { c } = { x }

0.020

-0.00037

-0.0013

-0.0029

0.026

-0.0054

-0.0014

-0.0036

0.036

1

2

3

0.015

0.033

0.099

0.002

0.001

1.056

Gaussian Elimination

x true

0.016

0.041

0.091

LU Decomposition (C&C 4th, 10.1, p. 264)

A practical, direct method for equation solving, especially when several

r.h.s.'s are needed and/or when all r.h.s.'s are not known at the start of the

problem.

Examples of applications:

time stepping: A u t+1 = B u t + t

several load cases: Axi = bi

inversion: A-1

iterative improvement

Don't compute A-1

Requires n3 flops to get A-1

Numerically unstable calculation

LU computations

Stores the calculations needed to repeat

'Gaussian' elimination on a new rhs c-vector

0.016

0.040

0.093

ENGRD 241 Lecture Notes

page 3-13 of 3-27

Section 3: Systems of Equations

LU Decomposition (See C&C Figure 10.1)

[ A] { x } = { b }

[U]

[L]

a) decomposition [A] ==> [L][U]

[L]{d}= {b}

b) forward substitution

[ L | b ] ==> { d }

[U]{x}={d}

c) backward substitution

{x}

Doolittle LU Decomposition (See C&C 4th, 10.1.2-3, p. 266)

U is just the upper triangular matrix from Gaussian elimination

[ A | b] [ U | b ]

[ L ] has one's on the diagonal (i.e., it is a "unit lower triangular matrix"

and therefore can be denoted [L1]), and elements below diagonal are

just the factors used to scale rows when doing Gaussian elimination,

e.g., l i1 ai1 / a11 for i = 2, 3, , n

0

0 L 0 u11 u12 u13

a11 a12 a13 L a1n

1

a

1

0 L 0 0 u 22 u 23

21 a 22 a 23 L a 2n

l 21

A a 31 a 32 a 33 L a 3n = l 31 l 32 1 L 0 0 0 u 33

M O

M

M

M O M

M

M M

M

M M

a n1 a n2 a n3 L a nn

l n1 l n2 l n3 L 1 0

0

0

then [L1] {d} = {b}

===> [U] {x} = {d} in which {d} is synonymous with {b'}

Basic Approach (C&C Figure 10.1):

Consider [A]{x} = {b}

a) Use Gauss-type "decomposition" of [A] into [L][U]

n3/3 flops

[A]{x} = {b} becomes [L][U]{x} = {b}

b) First solve [L]{d} = {b} for {d} by forward substitution n2/2 flops

c) Then solve [U]{x} = {d} for {x} by back substitution

n2/2 flops

L

L

L

O

L

u1n

u 2n

u 3n

M

u nn

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

LU Decomposition Variations

Doolittle

[L1][U]

General [A]

Crout

[L][U1]

General [A]

T

Cholesky

[L][L]

PD Symmetic

C&C 10.12

C&C 10.1.4

C&C 11.1.2

Cholesky works only for Positive Definite symmetric matrices

Doolittle versus Crout:

Doolittle just stores Gaussian elimination factors where Crout uses a

different series of calculations, see C&C Section 10.1.4.

Both decompose [A] into [L] and [U] in n3/3 FLOPS

Different location of diagonal of 1's (see above)

Crout uses each element of [A] only once so the same array can be

used for [A] and [L\U1] saving computer memory! (The 1s of [U1] are

not stored.)

Matrix Inversion (C&C 4th, 10.2, p. 273)

First Rule:

Dont do it.

(numerically unstable calculation)

If you really must -1) Gaussian elimination: [A | I ] [U | B' ] A-1

2) Gauss-Jordan: [A | I ] [I | A-1]

Inversion will take n3 + O(n2) flops if one is careful about where zeros are

(taking advantage of the sparseness of the matrix).

3

Naive applications (without optimization) take 4n + O(n2) flops.

3

For example, LU decomposition takes n + O(n2) flops.

3

Back solve twice with n unit vectors ei: 2 n (n2/2) = n3 flops.

Altogether:

n

+ n3 = 4n + O(n2) flops

3

3

page 3-14 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-15 of 3-27

FLOPS for Linear Algebraic Equations, [A]{x} = {b}:

Gauss elimination (1 r.h.s)

n3

+ O(n 2 )

3

Gauss-Jordan (1 r.h.s)

n3

+ O(n 2 )

2

LU decomposition

n3

+ O(n 2 )

3

Each new LU right-hand side

n2

Cholesky decomposition (symmetric A)

n3

+ O(n 2 )

6

Inversion (naive Gauss-Jordan)

4n 3

+ O(n 2 )

3

Inversion (optimal Gauss-Jordan)

Solution by Cramer's Rule

[50% more than Gauss. Elimin.]

n3 + O(n2)

n!

[Half the FLOPs of Gauss. Elimin.]

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-16 of 3-27

Errors in Solutions to Systems of Linear Equations (C&C 4th, 10.3, p. 277)

Objective: Solve [A]{x} = {b}

Problem: Round-off errors may accumulate and even be exaggerated by the solution procedure. Errors

are often exaggerated if the system is ill-conditioned.

Possible remedies to minimize this effect:

1. pivoting

2. work in double precision

3. transform problem into an equivalent system of linear equations by scaling or equilibrating

Ill-conditioning

A system of equations is singular if det|A|= 0

If a system of equations is nearly singular it is ill-conditioned.

Systems which are ill-conditioned are extremely sensitive to small changes in coefficients of [A] and

{b}. These systems are inherently sensitive to round-off errors.

Question: Can we develop a means for detecting these situations?

Consider the graphical interpretation for a 2-equation system:

a11 a12 x1 b1 (1)

a

21 a 22 x 2 b 2 (2)

We can plot the two linear equations on a graph of x vs. x .

1

2

x2

a x+a x =

11 1

12 2

b1

b2/a22

b2/a2

b1/a12

b1/a11

x1

a21x1+ a22x2

= b2

x1

x1

x2

Uncertainty

in x2

Uncertainty

in x2

x2

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-17 of 3-27

Ways to detect ill-conditioning:

1. Calculate {x}; make a small change in [A] or {b} and determine effect on the new solution {x}.

2. After forward elimination examine diagonal of upper triangular matrix. if aii << ajj, i.e. there is a

relatively small value on the diagonal, then this may indicate ill-conditioning.

3. Compare {x}SINGLE with {x}DOUBLE

4. Estimate "condition number" for A.

Substituting the calculated {x} into [A]{x} and checking this against {b} will unfortunately not

always work! (See C&C Box 10.1)

Norms and the Condition Number

We need a quantitative measure of ill-conditioning.

This measure will then directly reflect the possible magnitude of round-off effects.

To do this we need to understand norms.

Norm: Scalar measure of the magnitude of a matrix or vector (how "big" a vector is not to be

confused with the dimension of a matrix.)

Vector Norms (C&C 4th, 10.3.1, pp. 278-280)

Scaler measure of the magnitude of a vector.

Here are some vector norms for nx1 vectors {x} with typical elements xi. Each is in the general form

of a p norm defined by the general relationship: x

i 1

xi

1/ p

1. Sum of the magnitudes:

2. Magnitude of largest element:

3. Length or Euclidean norm

x1

x

i 1

max x i

1/ 2

i 1

Required Properties of vector norm:

1. x 0 and x

if and only if [x]=0

2. kx k x where k is any positive scalar

3. x y x y

Triangle Inequality

For the Euclidean vector norm we also have

4.

x gy x

because the dot product or inner product property satisfies

x gy x y cos x gy .

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-18 of 3-27

Matrix Norms (C&C 4th, Box 10.2, p. 279)

Scaler measure of the magnitude of a matrix.

Matrix norms corresponding to the vector norms above are defined by the general relationship:

A

max

x p 1

A x

1. Largest column sum:

1 max

j

2. Largest row sum:

3. Spectral norm:

max

i

2

i 1

a ij

a ij

j1

( max )1/ 2

where max is the largest eigenvalue of [A]T[A].

If [A] is symmetric, (max)1/2 = max, the largest eigenvalue of [A].

Matrix Norms

For matrix norms to be useful we require that

0. || Ax || || A || ||x ||

General properties of any matrix norm:

1. || A || 0 and || A || = 0 iff [A] = 0

2. || k A || = k || A || where k is any positive scalar

3. || A + B || || A || + || B ||

"Triangle Inequality"

4. || A B || || A || || B ||

Why are norms important?

Norms permit us to express the accuracy of the solution {x} in terms of || x ||

Norms allow us to bound the magnitude of the product [A]{x} and the associated errors.

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-19 of 3-27

Digression: A Brief Introduction to the Eigenvalue Problem

For a square matrix [A], consider the vector {x} and scalar for which

[A]{x} = {x}

x is called an eigenvector and an eigenvalue of A. The problem of finding eigenvalues and

eigenvectors of a matrix A is important in scientific and engineering applications, e.g., vibration problems,

stability problems . . . See Chapra & Canale Chapter 27 for background and examples.

The above equation can be rewritten

( [A] [I] ) {x} = 0.

indicating that the system ( [A] [I] ) is singular. The characteristic equation

det ([A] [I]) = 0,

yields n real or complex roots which are the eigenvalues i, and n real or complex eigenvectors {xi}. If

[A] is real and symmetric, the eigenvalues and eigenvectors are all real. In practice, one does not usually

use the determinant to solve the algebraic eigenvalue problem, and instead employs other algorithms

implemented in mathematical subroutine libraries.

Forward and Backward Error Analysis

Forward and backward error analysis can estimate the effect of truncation and roundoff errors on the

precision of a result. The two approaches are alternative views:

1. Forward (a priori) error analysis tries to trace the accumulation of error through each process of the

algorithm, comparing the calculated and exact values at every stage.

2. Backward (a posteriori) error analysis views the final solution as the exact solution to a perturbed

problem. One can consider how different the perturbed problem is from the original problem.

Here we use the condition number of a matrix [A] to specify the amount by which relative errors in [A]

and/or {b} due to input, truncation, and rounding can be amplified by the linear system in the

computation of {x}.

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Error Analysis for [A]{x} = {b} for errors in {b}

Suppose the coefficients {b} are not precisely represented.

What might be the effect on calculated {x+ x}?

Lemma: [A]{x} = {b} yields || A || || x || || b ||

b

A

1

x

or

A

x

b

Now an error in {b} yields a corresponding error in {x}:

[A ]{x + x} = {b + b}

[A]{x} + [A]{x} = {b} + {b}

Subtracting [A]{x} = {b} yields:

[A]{x} = {b} > {x} = [A]-1 {b}

Taking norms and using the lemma:

x

b

A A 1

x

b

b

b

Define the condition number as

1

= cond [A] = A

A 1

If 1, or is small, the system is well-conditioned

If >> 1, the system is ill conditioned.

1 I A 1A A 1 A = Cond(A)

Error Analysis of [A]{x} = {b} for errors in [A] (C&C 4th, 10.3.2, p.280)

Coefficients in [A] not precisely represented.

What might be effect on calculated {x + x}?

[A + A ]{x + x} = {b}

[A]{x} + [A]{x} + [A]{x+x} = {b}

Subtracting [A]{x} = {b} yields:

[A]{x} = [A]{x + x} or

{x } = [A]-1 [A] {x + x}

Taking norms and multiplying by || A || / || A || yields :

x

x x

A A 1

A

A

A

A

page 3-20 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-21 of 3-27

Estimate of Loss of Significance:

Consider the possible impact of errors [A] on the precision of {x}.

If

A

~ 10 p , then

A

that if

x

A

implies

x x

A

x

~ 10s , then 10 s 10

x x

or, taking the log of both sides, one obtains:

s p log10()

log10() is the loss in decimal precision, i.e., we start with p significant figures and end-up with s

significant figures. (This idea is expressed in words at bottom of p.280 of C & C)

One does not necessarily need to find [A]-1 to estimate = cond[A]. Can use an estimate based

upon iteration of inverse matrix using LU decomposition.

One does not necessarily need to find [A]-1 to estimate = cond[A]. For

example, if [A] is symmetric positive definite, = max/min and one can

bound max by any matrix norm and calculate min using the LU

decomposition of [A] and a method called inverse vector iteration.

Programs such as MATLAB have built-in functions to calculate = cond[A]

or the reciprocal condition number 1/ (cond and rcond).

Iterative Solution Methods (C&C 4th, 11.2, p. 289)

Iterative Solution Methods

Impetus for Iterative Schemes:

1. may be more rapid if coefficient matrix is "sparse"

2. may be more economical w/ respect to memory

3. may also be applied to solve nonlinear systems

Disadvanges:

1. May not converge or may converge slowly

2. Not appropriate for all systems

Error bounds apply to solutions obtained by direct and iterative methods because they address the

specification of [A] and {c}.

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

Iterative Solution Methods

Basic Mechanics:

Starting with

a11x1 +

a21x1 +

a12x2 + a13x3 + ... + a1nxn =

a22x2 + a23x3 + ... + a2nxn =

:

an1x1 + an2x2 + an3x3 + ... + annxn =

Solve each equation for one variable:

x1 = [b1 (a12x2 + a13x3

x2 = [b2 (a21x1 + a23x3

x3 = [b3 (a31x1 + a32x2

:

xn = [bn (an1x1 + an2x2

+ ... +

+ ... +

+ ... +

b1

b2

bn

a1n xn)]/a11

a2n xn)]/a22

a3nxn)]/a33

+ ... + an,n-1xn-1)]/ann

Start with an initial estimate of {x}0,

substitute into the right-hand side of all of the above equations

generate a new approximation {x}1

This is a multivariate one-point iteration: {x}j+1 = { g({x}j) }

Repeat until maximum number of iterations is reached or:

x ji x j x ji

Matrix Derivation

To solve [A]{x} = {b}, separate [A] into: [A] = [Lo] + [D] + [Uo]

[D] = diagonal(aii)

[Lo] = lower triangular w/ zeros on diagonal

[Uo] = upper triangular w/ zeros on diagonal

Rewrite system:

( [Lo] + [D] + [Uo] ) {x}= {b}

Iterate

[D] {x}j+1 = {b} ( [Lo] + [Uo] ) {x}j

which is effectively:

{x}j+1 = [D]-1 ({b} ( [Lo] + [Uo] ) {x}j )

Iterations converge if:

|| [D] ([Lo] + [Uo] ) || < 1

(Sufficient condition is if the system is diagonally dominant)

page 3-22 of 3-27

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-23 of 3-27

This iterative method is called the Jacobi Method

Final form:

{x}j+1 = [D]-1( {c} ( [Lo]+[Uo] ){x}j )

or, written out more fully:

a11

a 22

a 33

x1j1 b1 a12 x 2j a13 x 3j L a 2n x nj

x 2j1 b 2 a 21 x1j a 23 x 3j L a 2n x nj

x 3j1 b3 a 31 x1j a 32 x 2j L a 2n x nj

x nj1 b n a n1 x1j a n2 x 2j L a n,n 1 x nj 1

a nn

Note that, although the new estimate of x1 was known, we did not use it to calculate the new x2.

Iterative Solution Methods -- Gauss-Seidel (C&C 4th, 11.2, p. 289)

In most cases using the newest values on the right-hand side equations will provide better estimates of

the next value. If this is done, then we are using the Gauss-Seidel Method:

( [L0] + [D] ) {x}j+1 = {b} [U0] {x}j

or explicitely:

x1j1 b1 a12 x 2j a13 x 3j L a 2n x nj

x 2j1 b 2 a 21 x1j+1 a 23 x 3j L a 2n x nj

a11

x 3j1 b3 a 31 x1j+1 a 32 x 2j+1 L a 2n x nj

x nj1

a 22 F

a 33

j+1

b n a n1 x1j+1 a n2 x 2j+1 L a n,n 1 xn-1

a nn

If either method converges,

Gauss-Seidel converges faster than Jacobi.

Why use Jacobi ?

Because you can separate the n-equations into n independent tasks;

it is very well suited to computers with parallel processors.

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-24 of 3-27

Convergence of Iterative Solution Methods (C&C 4th, 11.2.1, p. 291)

Rewrite given system:

[A]{x} = { [B] + [E] } {x} = {bb}

where [B] is diagonal, or triangular so we can solve

[B]{y} = {g} quickly. Thus,

[B] {x}j+1 = {b} [E] {x}j

which is effectively

{x}j+1 = [B]-1 ({b} [E] {x}j )

True solution {x}c satisfies

{x}c = [B]-1 ({b} [E] {x}c )

Subtracting yields

{x}c {x}j+1 = [B]-1 [E] [ {x}c {x}j ]

So

-1

||{x}c {x}j+1|| || [B] [E] || ||{x}c {x}j ||

-1

Iterations converges linearly if || [B] [E] || < 1

=> || ([D] + [Lo])-1 [Uo] || < 1

For Gauss-Seidel

=> || [D] -1 ([Lo] + [Uo]) || < 1

For Jacobi

Iterative methods will not converge for all systems of equations, nor for all possible rearrangements.

If the system is diagonally dominant, i.e. aii > aij where ij then

xi

ci a i1

a

a

x1 i2 x 2 L in x n

a ii a ii

a ii

a ii

a ij

with all

< 1.0, i.e., small slopes.

a ii

A sufficient condition for convergence :

n

a ii a ij

j1

ji

i.e., system is diagonally dominant

Notes: 1. If the above does not hold, still may converge

2. This looks similar to infinity norm of [A]

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-25 of 3-27

Improving Rate of Convergence of Iterative Solution Schemes

(C&C 4th, 11.2.2, p. 294:

Relaxation Schemes:

x inew =x itrial + (1- ) x

old

i

where 0.0 < 2.0

Underrelaxation ( 0.0 < < 1.0 )

More weight is placed on previous value.

Often used to:

make nonconvergent system convergent

expedite convergence by damping out oscillations

Overrelaxation ( 1.0 < 2.0 )

More weight is placed on the new value.

Assume the new value is heading in the right direction, and hence pushes the new value closer to

the true solution.

The choice of is highly problem dependent and is empirical, so relaxation is usually only used for

often repeated calculations of a particular class.

Why Iterative Solutions?

You often need to solve A x = b where n = 1000's

Description of a building or airframe,

Finite-Difference approximation to PDE

Most of A's elements will be zero; for finite-difference approximations to Laplace equation have five

aij 0 in each row of A.

Direct method (Gaussian elimination)

Requires n3/3 flops

(n = 5000; n3/3 = 4 x 1010 flops)

Fills in many of n2-5n zero elements of A

Iterative methods (Jacobi or Gauss-Seidel)

Never store A

(say n = 5000; dont need to store 4n2 = 100 Megabyte)

Only need to compute (A-B)x; and to solve Bx t+1 = b

Effort:

Suppose B is diagonal, solving B v = b

n flops

Computing (AB) x

For m iterations

4n flops

5mn flops

For n = m = 5000, 5mn = 1.25x108 At worst O(n2).

Other Iterative Methods (not covered in detail in this course)

For very sparse and very diagonally dominant systems, Jacobi or Gauss Seidel

may be successful and appropriate. However, the potential for very slow

convergence for many practical engineering simulations tends to favor other,

more sophisticated iterative methods. For example, the conjugate gradient

method is guaranteed to converge in less than n iterations (if roundoff is

ENGRD 241 Lecture Notes

Section 3: Systems of Equations

page 3-26 of 3-27

negligible) and the preconditioned conjugate gradient methods are even

more rapidly convergent.

You might also like

- Ieotab 3Document4 pagesIeotab 3Kayla DollenteNo ratings yet

- Fresh or Recycled Water Logs And/or Chips: Wood PreparationDocument1 pageFresh or Recycled Water Logs And/or Chips: Wood PreparationKayla DollenteNo ratings yet

- Thinkpad X13 Yoga Gen 2 User GuideDocument66 pagesThinkpad X13 Yoga Gen 2 User GuideKayla DollenteNo ratings yet

- Meralco Bill 409851010101 10232021 - 1Document2 pagesMeralco Bill 409851010101 10232021 - 1Kayla DollenteNo ratings yet

- Orifice Plate Test ResultsDocument10 pagesOrifice Plate Test ResultsKayla DollenteNo ratings yet

- St. Jude Novena PrayerDocument2 pagesSt. Jude Novena PrayerKayla DollenteNo ratings yet

- 1997 11 14 Guide Pulppaper JD Fs2Document3 pages1997 11 14 Guide Pulppaper JD Fs2Yudhi Dwi KurniawanNo ratings yet

- Aeotab 12Document12 pagesAeotab 12Kayla DollenteNo ratings yet

- Petroleum Whitepaper 7-15-2013Document68 pagesPetroleum Whitepaper 7-15-2013Kayla DollenteNo ratings yet

- E302Document4 pagesE302Kayla DollenteNo ratings yet

- Chemical Engineering Lab Drying Experiment ResultsDocument2 pagesChemical Engineering Lab Drying Experiment ResultsKayla DollenteNo ratings yet

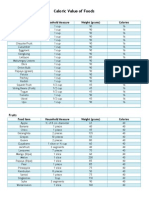

- Caloric Value of FoodsDocument3 pagesCaloric Value of FoodsKayla DollenteNo ratings yet

- 79 e 4150 D 6054 Be 6034Document9 pages79 e 4150 D 6054 Be 6034Kayla DollenteNo ratings yet

- Drying Curves of Non-Porous SolidsDocument6 pagesDrying Curves of Non-Porous SolidsKayla DollenteNo ratings yet

- Diagnostic Exam Review Phy10Document24 pagesDiagnostic Exam Review Phy10Kayla DollenteNo ratings yet

- E301Document3 pagesE301Kayla DollenteNo ratings yet

- 86 Measuring A Discharge Coefficient of An Orifice For An Unsteady Compressible FlowDocument5 pages86 Measuring A Discharge Coefficient of An Orifice For An Unsteady Compressible FlowKayla DollenteNo ratings yet

- Flow Measurements Using OrificeDocument4 pagesFlow Measurements Using OrificeKovačević DarkoNo ratings yet

- Quadratic Relation and FunctionsDocument37 pagesQuadratic Relation and FunctionsKayla DollenteNo ratings yet

- Chanson de Roland - Full SummaryDocument16 pagesChanson de Roland - Full SummaryKayla DollenteNo ratings yet

- Prayer Before ExaminationDocument2 pagesPrayer Before ExaminationKayla DollenteNo ratings yet

- Ir Presentation PDFDocument17 pagesIr Presentation PDFMarr BarolNo ratings yet

- Diagnostic Exam Review Phy10Document24 pagesDiagnostic Exam Review Phy10Kayla DollenteNo ratings yet

- Chipping Initial Cooking Washing Debarking Screening: Refining (Continuous Digester)Document1 pageChipping Initial Cooking Washing Debarking Screening: Refining (Continuous Digester)Kayla DollenteNo ratings yet

- IRR of RA 9297Document21 pagesIRR of RA 9297Peter Jake Patriarca0% (1)

- Eulers MethodDocument3 pagesEulers MethodKayla DollenteNo ratings yet

- Dr. Neal Bushaw: at atDocument3 pagesDr. Neal Bushaw: at atKayla DollenteNo ratings yet

- School of Chemical Engineering and Chemistry: Mapua Institute of TechnologyDocument2 pagesSchool of Chemical Engineering and Chemistry: Mapua Institute of TechnologyKayla DollenteNo ratings yet

- Boyce/Diprima 10 Ed, CH 1.1: Basic Mathematical Models Direction FieldsDocument27 pagesBoyce/Diprima 10 Ed, CH 1.1: Basic Mathematical Models Direction FieldsKayla DollenteNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- What Is Computer ScienceDocument2 pagesWhat Is Computer ScienceRalph John PolicarpioNo ratings yet

- Ascon IO XS ENDocument1 pageAscon IO XS ENthiodoroNo ratings yet

- U50SI1 Schematics OverviewDocument32 pagesU50SI1 Schematics OverviewHamter YoNo ratings yet

- Ece Upcp ResumeDocument1 pageEce Upcp Resumeapi-532246334No ratings yet

- Instructions For CX ProgrammerDocument1,403 pagesInstructions For CX Programmergustavoxr650No ratings yet

- Nordson p4 p7 p10 PDFDocument319 pagesNordson p4 p7 p10 PDFRicardo CruzNo ratings yet

- MTINV 1-D interpretation of magnetotelluric EM soundingsDocument8 pagesMTINV 1-D interpretation of magnetotelluric EM soundingsAnnisa Trisnia SNo ratings yet

- HLR9820-Configuration Guide (V900R003C02 04, Db2)Document105 pagesHLR9820-Configuration Guide (V900R003C02 04, Db2)ynocNo ratings yet

- SEW Eurodrive Gearmotors PDFDocument23 pagesSEW Eurodrive Gearmotors PDFDeki PurnayaNo ratings yet

- Process Synchronization: Critical Section ProblemDocument8 pagesProcess Synchronization: Critical Section ProblemLinda BrownNo ratings yet

- ECG Circuit Design & ImplementationDocument1 pageECG Circuit Design & ImplementationJack MarshNo ratings yet

- User Manual: Series X - Maritime Multi Computer (MMC) ModelsDocument69 pagesUser Manual: Series X - Maritime Multi Computer (MMC) ModelsMariosNo ratings yet

- One-Instruction Set Computer - WikipediaDocument15 pagesOne-Instruction Set Computer - WikipediaAnuja SatheNo ratings yet

- EM 9280 Compactline EM 9380 Compactline: English Technical Service ManualDocument46 pagesEM 9280 Compactline EM 9380 Compactline: English Technical Service ManualIrimia MarianNo ratings yet

- DC DC Converter Simulation White PaperDocument12 pagesDC DC Converter Simulation White PaperGiulio Tucobenedictopacifjuamar RamírezbettiNo ratings yet

- Instruction Manual EDB245-420 1Document30 pagesInstruction Manual EDB245-420 1rpshvjuNo ratings yet

- Java (TM) 6 Update 27 - UninstallDocument62 pagesJava (TM) 6 Update 27 - UninstallChirag PanditNo ratings yet

- Stack Overflows: Reversed Hell Networks - Creative Research FacilityDocument16 pagesStack Overflows: Reversed Hell Networks - Creative Research FacilityLeandro MussoNo ratings yet

- Edge Adaptive Image Steganography Based On LSB Matching Revisited CodeDocument24 pagesEdge Adaptive Image Steganography Based On LSB Matching Revisited Codegangang1988No ratings yet

- SPNGN1101SG Vol2Document376 pagesSPNGN1101SG Vol2Dwi Utomo100% (1)

- Japanese Otome Games For Learners - Japanese Otome Games Sorted by Japanese DifficultyDocument9 pagesJapanese Otome Games For Learners - Japanese Otome Games Sorted by Japanese DifficultyJessicaNo ratings yet

- 12 Important Specifications of Graphics Card Explained - The Ultimate Guide - BinaryTidesDocument13 pages12 Important Specifications of Graphics Card Explained - The Ultimate Guide - BinaryTidespoptot000No ratings yet

- TE Sem V Exam Schedule 2013Document1 pageTE Sem V Exam Schedule 2013Rahul RawatNo ratings yet

- DECA User Manual: July 20, 2017Document134 pagesDECA User Manual: July 20, 2017ADHITYANo ratings yet

- Accutorr V Service ManualDocument125 pagesAccutorr V Service ManualMiguel IralaNo ratings yet

- CRS328-24P-4S+RM - 28 Port 1GbE Switch with 24 PoE+ PortsDocument2 pagesCRS328-24P-4S+RM - 28 Port 1GbE Switch with 24 PoE+ Portsargame azkaNo ratings yet

- Plasma TV: User ManualDocument38 pagesPlasma TV: User ManualTony CharlesNo ratings yet

- DCP Orientation HandbookDocument30 pagesDCP Orientation HandbookOnin C. OpeñaNo ratings yet

- BACnet MS TP Configuration Guide PN50032 Rev A Mar 2020Document14 pagesBACnet MS TP Configuration Guide PN50032 Rev A Mar 2020litonNo ratings yet

- How To Add and Clear Items in A ListBox ControlDocument7 pagesHow To Add and Clear Items in A ListBox ControlAdrian AlîmovNo ratings yet