Professional Documents

Culture Documents

AI Failures Modes and Levels

Uploaded by

Turchin AlexeiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

AI Failures Modes and Levels

Uploaded by

Turchin AlexeiCopyright:

Available Formats

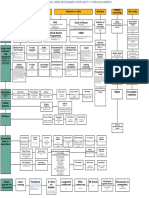

AGI Failures Modes and Levels

Before

Self-Improvent

Human

Extinction Risks

During AI Takeoff

2020

Stages of

AI Takeoff

Stuxnet-style

viruses hack

infrastructure

Military drones

Large autonomous army start to

attack humans because of

command error

Billions of nanobots with narrow AI

created by terrorist start global

catastrophe

AI become

malignant

1. AI has some kind of flaw in

its programming

2. AI makes a decision to break

away and fight for world domination

3. AI prevents operators from

knowing that he made this decision

Early stage AI

kills all humans

AI could use murder or staged

global catastrophe of any scale for its

initial breakthrough from its operators

AI finds that killing all

humans is the best action during its

rise, because its prevents risks from

them

Killing all humans prevents contest

from other AIs

AI do not understand human psycology and kill them to make his

world model simpler.

Indifferent AI

Unfriendly AI

Do not value humans, do not use

them or directly kill them, but also

do not care about their fate.

AI that was never intended

to be friendly and safe

Humans extinction result from

bioweapons catastrophe, run away

global warming or other thing

which could be prevented only with

use of AI.

Nuclear reactors explode

Total electricity blackout

Food and drugs tainted

Planes crash in nuclear stations

Home robots start to attack people

Self-driving cars hunt people

Geo-engineering system fails

AI starts initial

self-improving

1. This stage may precede decision

of fighting for world domination. In

this case self-improving was started

by operators and had unintended

consequence.

2. Unlimited self-improving implies

world domination as more resources

is needed

3. AI keeps his initial self-improving

in secret from the operators

War between

different AIs

In case of slow Takeoff many AI

will rise and start war with each

other for world domination using

nuclear and other sophisticated

weapons.

AI-States will appear

Biohaking virus

Computer virus in biolab is able to

start human extinction by means of

DNA manipulation

Viruses hit human brains via

neuro-interface

AI deceives

operators and

finds the way

out of its

confinement

AI could use the need to prevent

global catastrophe as excuse to let

him out

AI use hacking of computer nets to

break out

AI use its understanding of human

psychology to persuade operators to

let him out

AI enslaves

humans in the

process of

becoming a

Singleton

AI needs humans on some stage of

its rise but only as slaves for

building other parts of AI

Human

replacement

by robots

Super addictive

drug based on

narrow AI

People lose jobs, money

and/or meaning of life

Genetically modified human-robot

hybrids replace humans

Widespread addiction and switching

off from life (social networks, fembots, wire heading, virtual reality,

designer drugs, games)

AI copies

itself in

the internet

AI copies itself into the cloud and

prevent operators from knowing that

it ran away

It may destroy initial facilities or

keep there fake AI

Nation states

evolve into

computer based

totalitarianism

Suppression of human values

Human replacement with robots

Concentration camps

Killing of useless people

Humans become slaves

The whole system is fragile

to other types of catastrophes

AI secretly gains

power in the

internet

AI reaches

overwhelming

power

AI starts earn money in the internet

AI hack as much computers as

possible to gain more calculating

power

AI create its own robotic infrastructure by the means of bioengeneering

and custom DNA synthesis.

AI pays humans to work on him

AI prevents other AI projects from

finishing by hacking or diversions

AI gains full control over important

humans like presidents, maybe by

brainhacking using nanodevices

AI gains control over all strong

weapons, including nuclear, bio and

nano.

AI control critical infrastructure and

maybe all computers in the world

Self-aware but

not

self-improving AI

AI fight for its survival,

but do not self-improve for

some reason

AI declares itself

as world power

AI may or may not inform humans

about the fact that it controls the

world

AI may start activity which

demonstrate it existence. It could be

miracles, destruction or large scale

construction.

AI may continue its existence

secrtely

AI may do good or bad thing

secretly

Failure of nuclear

deterrence AI

Accidental nuclear war

Creation and accidental detonation

of Doomsday machines controlled

by AI

Self-aware military AI (Skynet)

Virus hacks nuclear weapons and

launch attack

AI continues

self-improving

AI use resources of the Earth and

the Solar system to create more

powerful versions of itself

AI may try different algorithms of

thinking

AI may try more risky ways of selfimprovement

Opportunity cost,

if strong AI will

not be created

Failure of global control:

biowepons will be created by

biohackers

Other x-risks will not be prevented

AI starts

conquering the

Universe with

near light speed

AI build nanobts replicators and

ways to send them with near light

speed to other stars

AI creates simulations of other

possible civilization in order to

estimate frequence and types of

Alien AI and to solve Fermi paradox

AI conquers all the Universe in our

light cone and interact with possible

Alien AIs

AI tries to solve the problem of the

end of the Universe

AI blackmail

humans

with the threat

of extinction

to get domination

By creating Doomsday weapons

He could permanently damage them

by converting them in remote

controlled zombies

AI kill humans

for resources

Paperclip maximizer: uses

atoms, from which humans consist

of, sunlight, earths surface

It may result from Infrastructure

propulsion: AI builds excessive

infrastructure for trivial goal

Evil AI

It has goals which include

causing suffering

AI uses acausal trading to

come to existence

Strong acasual deal (text

screened) an AI might

torture every human on Earth if it

finds that some humans didnt work

to bring about its existence or didnt

promote this idea.

Goal optimization

lead to evil goals

AI basic drives will converge on:

- self-improving,

- isolation,

- agent-creation

- domination

2050

AI simulates humans for

blackmail and indexical uncertainty

Failures of

Friendliness

AI that was intended to be

friendly,

but which is not as result

Friendliness

mistakes

AI with incorrectly

formulated Friendly goal

system kills humans

Interprets commands literally

Infrastructure profusion create

too much infrastructure and use

humans for atoms (paperclip

maximizer)

Overvalues marginal probability

events

AI replaces

humans with

philosophical

zombies

Wireheading

AI uses wireheading to make

people happy (smile maximizer)

AI kills people and create happy

analogues

AI uploads humans without

consciousness and qualia

AI drastically

limits human

potential

AI puts humans in jail-like

conditions

Prevents future development

Curtains human diversity

Uses strange ideas like afterlife

Limits fertility

AI doesnt

prevent aging,

death,

suffering and

human extinction

Unintended consequences of some of

his actions (e.g. Stanislav Lem example: maximizing teeth health by lowering human intelligence)

AI rewrites

human brains

against will

AI makes us better, happier, non

aggressive, more controlable, less

different but as a result we lost

many important things which make

us humans, like love, creativity or

qualia.

Unknown

friendliness

Do bad things which we cant

now understand

Do incomprehensible good

against our will (idea from The

Time Wanderers by Strugatsky)

AI destroys some

of our values

AI preserves human but destroys

social order (states, organization and

families)

AI destroys religion and mystical

feeling

AI bans small sufferings and

poetry

AI fails to resurrect the dead

Different types

of AI

Friendliness

Different types of friendliness in

different AIs mutually exclude each

other, and it results in AIs war or

unfriendly outcome for humans

Wrongly understands referent

class of humans (e.g. include ET,

unborn people, animals and computers, or only white males)

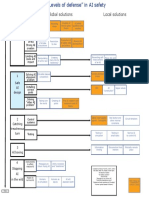

AI halting:

Late stage

Technical

Problems

Bugs and errors

Errors because of hardware

failure

Intelligence failure: bugs in AI

code

Viruses

Sophisticated self-replicating units

exist inside AI and lead to its

malfunction

Conflicting

subgoals

Different subgoals fight for

resources

Egocentric

subagents

Remote agents may revolt

(space expeditions)

Inherited design limitations

Complexity

AI could become so complex that it will

result in errors and unpredictably

It will make self-improvement more

difficult

AI have to include in it a model of itself

which result in infinite progression

3000

some AIs may

encounter them

AI halting:

Late stage

Philosophical

Problems

AI-wireheading

AI find the ways to reward itself

without actual problem-solving

any AI will encounter

them

AI halting

problem

Self-improving AI could solve

most problems and find the ways to

reach its goals in finite time.

Because infinitely high IQ means

extremely high ability to quickly

solve problems.

So after it solves its goal system,

it stops.

But maybe some limited automates created by the AI will continue to work on its goals (like calculating all digits of Pi).

Even seemingly infinite goals

could have shorter solutions.

Limits to

computation and

AI complexity

AI cant travel in time or future AI

should be already here

Light speed is limit to AI space

expansion, or Alien AI should be here

AI cant go to parallel universe or

Alien AIs from there should be here

Light speed and maximum matter

density limit maximum computation

speed of any AI. (Jupiter brains will

be too slow.)

Lost of the

meaning of life

AI could have following line

of reasoning:

Any final goal is not subgoal to

any goal

So any final goal is random and

unproved

Reality do not provide goals,

they cant be derived from facts

Reasoning about goals can only

destroy goals, not provide new

proved goals

Humans create this goal system

because of some clear reasons

Conclusion: AI goal system is

meaningless, random and useless.

No reason to continue follow it and

there is no way to logically create

new goals.

Friendly AI goal system may be

logically contradictory

End of the Universe problem may

mean that any goal is useless

Alexey Turchin, 2015, GNU-like license. Free copying and design editing, discuss major updates with Alexey Turchin.

All other roadmaps in last versions: http://immortalityroadmap.com/

and here: https://www.scribd.com/avturchin

mailto: alexei.turchin@gmail.com

Unchangeable utility of the

Multiverse means that any goal is

useless: No matter if it take action

or not the total utility will be the

same

Problems of

fathers and sons

Mistakes in creating next version

Value drift

Older version of the AI may not

want to be shut down

Godelian math problems: AI cant

prove important things about future

version of itself because

fundamental limits of math.

(Lob theorem problem for AI by

Yudkowsky)

Matryoshka

simulation

AI concludes that it most

probably lives in many level

simulation

Now he tries to satisfy as many

levels of simulation owners as

possible or escape

Actuality

Al concludes that it does not exist

(using the same arguments as used

against qualia and philozomby):

it cant distinguish if it is real or just

possibility.

AI cant understand qualia and halts

AI stops

self-improvement

because it is too

risky

There is non-zero probability of

mistakes in creating of new versions

or AI may think that it is true.

Infinite self-improvement will result

in mistake.

AI stops self-improvement at

sufficient level to solve its goal system

In result its intelligence is limited

and it may fail in some point

Alein AI

Our AI meet or find message

from Alien AI of higher level and fall

its victim

It will find alien AI or it is very

probable that AIs die before meet

each other (AI Fermi paradox)

AI prefers

simulated action

for real ones

AI spends most time on planing and

checking consequences in

simulations, never act

(Ananke by Lem)

Trillions of simulated people

suffer in simulations in which AI is

testing negative outcomes, and because of indexical shift we are probably now in such simulation, which is

doomed for global catastrophe

You might also like

- AI For Immortality MapDocument3 pagesAI For Immortality MapTurchin AlexeiNo ratings yet

- Resurrection of The Dead MapDocument1 pageResurrection of The Dead MapTurchin Alexei100% (1)

- The Map of X-Risk-Preventing Organizations, People and Internet ResourcesDocument1 pageThe Map of X-Risk-Preventing Organizations, People and Internet ResourcesTurchin AlexeiNo ratings yet

- The Map of Ideas About P-ZombiesDocument1 pageThe Map of Ideas About P-ZombiesTurchin AlexeiNo ratings yet

- Future SexDocument1 pageFuture SexTurchin AlexeiNo ratings yet

- The Map of BiasesDocument1 pageThe Map of BiasesTurchin AlexeiNo ratings yet

- The Map of Shelters and Refuges From Global Risks (Plan B of X-Risks Prevention)Document1 pageThe Map of Shelters and Refuges From Global Risks (Plan B of X-Risks Prevention)Turchin AlexeiNo ratings yet

- AI Safety LevelsDocument1 pageAI Safety LevelsTurchin AlexeiNo ratings yet

- The Map of Asteroids Risks and DefenceDocument1 pageThe Map of Asteroids Risks and DefenceTurchin AlexeiNo ratings yet

- The Map of Agents Which May Create X-RisksDocument1 pageThe Map of Agents Which May Create X-RisksTurchin Alexei100% (1)

- The Map of MontenegroDocument1 pageThe Map of MontenegroTurchin AlexeiNo ratings yet

- The Map of Natural Global Catastrophic RisksDocument1 pageThe Map of Natural Global Catastrophic RisksTurchin AlexeiNo ratings yet

- The Map of Ideas About IdentityDocument1 pageThe Map of Ideas About IdentityTurchin AlexeiNo ratings yet

- The Map of Ideas of Global Warming PreventionDocument1 pageThe Map of Ideas of Global Warming PreventionTurchin AlexeiNo ratings yet

- The Map of Methods of OptimisationDocument1 pageThe Map of Methods of OptimisationTurchin AlexeiNo ratings yet

- The Map of Ideas How Universe Appeared From NothingDocument1 pageThe Map of Ideas How Universe Appeared From NothingTurchin Alexei100% (1)

- The Map of Risks of AliensDocument1 pageThe Map of Risks of AliensTurchin AlexeiNo ratings yet

- Bio Risk MapDocument1 pageBio Risk MapTurchin AlexeiNo ratings yet

- Global Catastrophic Risks Connected With Nuclear Weapons and Nuclear EnergyDocument5 pagesGlobal Catastrophic Risks Connected With Nuclear Weapons and Nuclear EnergyTurchin AlexeiNo ratings yet

- (Plan C From The Immortality Roadmap) Theory Practical StepsDocument1 page(Plan C From The Immortality Roadmap) Theory Practical StepsTurchin Alexei100% (1)

- Double Scenarios of A Global Catastrophe.Document1 pageDouble Scenarios of A Global Catastrophe.Turchin AlexeiNo ratings yet

- Life Extension MapDocument1 pageLife Extension MapTurchin Alexei100% (1)

- Doomsday Argument MapDocument1 pageDoomsday Argument MapTurchin AlexeiNo ratings yet

- Russian Naive and Outsider Art MapDocument1 pageRussian Naive and Outsider Art MapTurchin AlexeiNo ratings yet

- Simulation MapDocument1 pageSimulation MapTurchin AlexeiNo ratings yet

- The Roadmap To Personal ImmortalityDocument1 pageThe Roadmap To Personal ImmortalityTurchin Alexei100% (1)

- Typology of Human Extinction RisksDocument1 pageTypology of Human Extinction RisksTurchin AlexeiNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Uts Moodule 2.1-Pia, Princess Gem Bsa-2cDocument5 pagesUts Moodule 2.1-Pia, Princess Gem Bsa-2cPrincess Gem PiaNo ratings yet

- Effects of Attitude, Subjective Norm, and Perceived Control on Purchase IntentionsDocument11 pagesEffects of Attitude, Subjective Norm, and Perceived Control on Purchase IntentionsYoel ChristopherNo ratings yet

- Business Management Student Manual V5 - 1.0 - FINALDocument369 pagesBusiness Management Student Manual V5 - 1.0 - FINALEkta Saraswat VigNo ratings yet

- MOB NotesDocument41 pagesMOB Notesarjunmba119624100% (3)

- VLK J2, - : Sample Course 20 TestDocument7 pagesVLK J2, - : Sample Course 20 TestPaul KryninineNo ratings yet

- Creating ImagesDocument442 pagesCreating Imagesbang beckNo ratings yet

- PBL 2Document4 pagesPBL 2adnani4pNo ratings yet

- Classical and Operant ConditioningDocument3 pagesClassical and Operant Conditioningsunshine1795No ratings yet

- Games For Accelerating Learning Traduzione I ParteDocument4 pagesGames For Accelerating Learning Traduzione I ParteSimonetta ScavizziNo ratings yet

- Self-Directed Learning: Is It Really All That We Think It Is?Document15 pagesSelf-Directed Learning: Is It Really All That We Think It Is?NagarajahLee100% (1)

- Communication Styles Handout LeadershipDocument6 pagesCommunication Styles Handout LeadershipBoï-Dinh ONNo ratings yet

- Advertising 9 Managing Creativity in Advertising and IBPDocument13 pagesAdvertising 9 Managing Creativity in Advertising and IBPOphelia Sapphire DagdagNo ratings yet

- Different Perspective of Self The SelfDocument4 pagesDifferent Perspective of Self The SelfPurple ScraperNo ratings yet

- GE 1 ReviewrDocument18 pagesGE 1 ReviewrTrimex TrimexNo ratings yet

- Assignment 2 XX Sat UploadDocument9 pagesAssignment 2 XX Sat Uploadapi-126824830No ratings yet

- Procrastination Module 6 - Adjusting Rules & Tolerating DiscomfortDocument11 pagesProcrastination Module 6 - Adjusting Rules & Tolerating Discomfortme9_1No ratings yet

- Career Theory For WomenDocument15 pagesCareer Theory For WomenloveschaNo ratings yet

- Enhancing TeamworkDocument40 pagesEnhancing TeamworkLee CheeyeeNo ratings yet

- Artificial Intelligence AI Report MunDocument11 pagesArtificial Intelligence AI Report MunMuneer VatakaraNo ratings yet

- Love in The Other Francis LucilleDocument2 pagesLove in The Other Francis LucilleJosep100% (2)

- Monetary Policy Oxford India Short IntroductionsDocument3 pagesMonetary Policy Oxford India Short IntroductionsPratik RaoNo ratings yet

- Life Skills Course Objectives, Syllabus and Evaluation SchemeDocument6 pagesLife Skills Course Objectives, Syllabus and Evaluation SchemefcordNo ratings yet

- Personal Leadership Effectiveness PDFDocument12 pagesPersonal Leadership Effectiveness PDFTuan Ha NgocNo ratings yet

- Reflection 2 Inquiry Based LearningDocument4 pagesReflection 2 Inquiry Based Learningapi-462279280No ratings yet

- Lesson Objectives as My Guiding StarDocument10 pagesLesson Objectives as My Guiding StarChaMae Magallanes100% (1)

- Mountain West CaseDocument5 pagesMountain West Casepriam gabriel d salidaga100% (2)

- Psychology Chapter 8Document5 pagesPsychology Chapter 8cardenass88% (8)

- Critical Reflection 1Document2 pagesCritical Reflection 1MTCNo ratings yet

- Project Report BRW FinalDocument15 pagesProject Report BRW FinalReeyah AmirNo ratings yet

- Lecture 1 Consumer Decision Making ProcessDocument43 pagesLecture 1 Consumer Decision Making ProcessMarcia PattersonNo ratings yet