Professional Documents

Culture Documents

Touchless Touchscreen SEMINAR REPORT

Uploaded by

GopiKrishnaCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Touchless Touchscreen SEMINAR REPORT

Uploaded by

GopiKrishnaCopyright:

Available Formats

Touchless touchscreen technology Seminar Report 2017

ACKNOWLEDGEMENT

First and foremost, I acknowledge the abiding presence and abounding grace of Almighty

God right through the execution of the seminar presentation.

I would like to take this opportunity to express my sincere thanks to our beloved Principal Dr.

T.G.Ansalam Raj for providing the needed provisions and lab facilities for a tidy presentation

session.

I also express my sincere gratitude to Mr. Pramod S Das , Head of Electronics &

Communication Department for the valuable guidance & moral support to make this seminar a

success.

I express my deep sense of gratitude and obligation to my seminar guide Mrs.Shilphy P

Gonzalvez and our seminar coordinator Mrs.Vinaya Mohan for her valuable assistance

provided for my seminar.

Finally my heartfelt thanks go to all those concerns especially to the staff & students of

Electronics & Communication Department for the indication of their support. I am grateful to my

family for their constant inspiration and encouragement.

AMAL.S

Dept ofECE ,VKCET Trivandrum 1

Touchless touchscreen technology Seminar Report 2017

ABSTRACT

It was the touch screens which initially created great furore.Gone are the days when you

have to fiddle with the touch screens and end scratching up. Touch screen displays are ubiquitous

worldwide.Frequent touching a touchscreen display with a pointing device such as a finger can

result in the gradual de-sensitization of the touchscreen to input and can ultimately lead to failure

of the touchscreen. To avoid this a simple user interface for Touchless control of electrically

operated equipment is being developed. EllipticLabs innovative technology lets you control your

gadgets like Computers, MP3 players or mobile phones without touching them. A simple user

interface for Touchless control of electrically operated equipment. Unlike other systems which

depend on distance to the sensor or sensor selection this system depends on hand and or finger

motions, a hand wave in a certain direction, or a flick of the hand in one area, or holding the

hand in one area or pointing with one finger for example. The device is based on optical pattern

recognition using a solid state optical matrix sensor with a lens to detect hand motions. This

sensor is then connected to a digital image processor, which interprets the patterns of motion and

outputs the results as signals to control fixtures, appliances, machinery, or any device

controllable through electrical signals.

Dept ofECE ,VKCET Trivandrum 2

Touchless touchscreen technology Seminar Report 2017

CONTENTS

Chapter no: Title Page no:

1 INTRODUCTION TO TOUCHSCREEN 4

2 HISTORY OF TOUCHSCREEN 6

3 WORKING OF TOUCHSCREEN 8

4 INTRODUCTION TO TOUCHLESS TOUCHSCREEN 10

5 WORKING OF TOUCHLESS TOUCHSCREEN 14

6 TOUCHLESS UI 18

7 MINORITY REPORT INSPIRED TOUCHLESS 23

TECHNOLOGY

8 ADVANTAGES 28

9 APPLICATIONS 29

10 CONCLUSION 30

Dept ofECE ,VKCET Trivandrum 3

Touchless touchscreen technology Seminar Report 2017

CHAPTER 1

INTRODUCTION TO TOUCHSCREEN

A touchscreen is an important source of input device and output device normally layered on

the top of an electronic visual display of an information processing system. A user can give input

or control the information processing system through simple or multi-touch gestures by touching

the screen with a special stylus and/or one or more fingers. Some touchscreens use ordinary or

specially coated gloves to work while others use a special stylus/pen only. The user can use the

touchscreen to react to what is displayed and to control how it is displayed; for example,

zooming to increase the text size. The touchscreen enables the user to interact directly with what

is displayed, rather than using a mouse, touchpad, or any other intermediate device (other than a

stylus, which is optional for most modern touchscreens).

Touchscreens are common in devices such as game consoles, personal computers, tablet

computers, electronic voting machines, point of sale systems, and smartphones. They can also be

attached to computers or, as terminals, to networks. They also play a prominent role in the design

of digital appliances such as personal digital assistants (PDAs) and some e-readers.

The popularity of smartphones, tablets, and many types of information appliances is driving

the demand and acceptance of common touchscreens for portable and functional electronics.

Touchscreens are found in the medical field and in heavy industry, as well as for automated teller

machines (ATMs), and kiosks such as museum displays or room automation, where keyboard

and mouse systems do not allow a suitably intuitive, rapid, or accurate interaction by the user

with the display's content.

Historically, the touchscreen sensor and its accompanying controller-based firmware have

been made available by a wide array of after-market system integrators, and not by display, chip,

or motherboard manufacturers. Display manufacturers and chip manufacturers worldwide have

acknowledged the trend toward acceptance of touchscreens as a highly desirable user interface

Dept ofECE ,VKCET Trivandrum 4

Touchless touchscreen technology Seminar Report 2017

component and have begun to integrate touchscreens into the fundamental design of their

products.

Optical touchscreens are a relatively modern development in touchscreen technology, in which

two or more image sensors are placed around the edges (mostly the corners) of the screen.

Infrared backlights are placed in the camera's field of view on the opposite side of the screen. A

touch blocks some lights from the cameras, and the location and size of the touching object can

be calculated. This technology is growing in popularity due to its scalability, versatility, and

affordability for larger touchscreens.

Dept ofECE ,VKCET Trivandrum 5

Touchless touchscreen technology Seminar Report 2017

CHAPTER 2

HISTORY OF TOUCHSCREEN

E.A. Johnson of the Royal Radar Establishment, Malvern described his work on capacitive

touchscreens in a short article published in 1965 and then more fully—with photographs and

diagrams in an article published in 1967. The applicability of touch technology for air traffic

control was described in an article published in 1968. Frank Beck and Bent Stumpe, engineers

from CERN, developed a transparent touchscreen in the early 1970s, based on Stumpe's work at

a television factory in the early 1960s. Then manufactured by CERN, it was put to use in 1973.

A resistive touchscreen was developed by American inventor George Samuel Hurst, who

received US patent #3,911,215 on October 7, 1975. The first version was produced in 1982. In

1972, a group at the University of Illinois filed for a patent on an optical touchscreen that became

a standard part of the Magnavox Plato IV Student Terminal. Thousands were built for the

PLATO IV system.

These touchscreens had a crossed array of 16 by 16 infrared position sensors, each composed

of an LED on one edge of the screen and a matched phototransistor on the other edge, all

mounted in front of a monochrome plasma display panel. This arrangement can sense any

fingertip-sized opaque object in close proximity to the screen. A similar touchscreen was used on

the HP-150 starting in 1983; this was one of the world's earliest commercial touchscreen

computers. HP mounted their infrared transmitters and receivers around the bezel of a 9" Sony

Cathode Ray Tube (CRT). In 1984, Fujitsu released a touch pad for the Micro 16, to deal with

the complexity of kanji characters, which were stored as tiled graphics. In 1985, Sega released

the Terebi Oekaki, also known as the Sega Graphic Board, for the SG-1000 video game console

and SC-3000 home computer. It consisted of a plastic pen and a plastic board with a transparent

window where the pen presses are detected. It was used primarily for a drawing software

application.

Dept ofECE ,VKCET Trivandrum 6

Touchless touchscreen technology Seminar Report 2017

A graphic touch tablet was released for the Sega AI Computer in 1986. Touch-sensitive

Control-Display Units (CDUs) were evaluated for commercial aircraft flight decks in the early

1980s. Initial research showed that a touch interface would reduce pilot workload as the crew

could then select waypoints, functions and actions, rather than be "head down" typing in

latitudes, longitudes, and waypoint codes on a keyboard. An effective integration of this

technology was aimed at helping flight crews maintain a high-level of situational awareness of

all major aspects of the vehicle operations including its flight path, the functioning of various

aircraft systems, and moment-to-moment human interactions.

Most user interface books would state that touchscreens selections were limited to targets

larger than the average finger. At the time, selections were done in such a way that a target was

selected as soon as the finger came over it, and the corresponding action was performed

immediately. Errors were common, due to parallax or calibration problems, leading to

frustration.A new strategy called "lift-off strategy" was introduced by researchers at the

University of Maryland Human – Computer Interaction Lab and is still used today. As users

touch the screen, feedback is provided as to what will be selected, users can adjust the position of

the finger, and the action takes place only when the finger is lifted off the screen.

This allowed the selection of small targets, down to a single pixel on a VGA screen (standard

best of the time). Sears et al. (1990) gave a review of academic research on single and multi-

touch human–computer interaction of the time, describing gestures such as rotating knobs,

adjusting sliders, and swiping the screen to activate 8 a switch (or a U-shaped gesture for a

toggle switch). The University of Maryland Human – Computer Interaction Lab team developed

and studied small touchscreen keyboards (including a study that showed that users could type at

25 wpm for a touchscreen keyboard compared with 58 wpm for a standard keyboard), thereby

paving the way for the touchscreen keyboards on mobile devices. They also designed and

implemented multitouch gestures such as selecting a range of a line, connecting objects, and a

"tap-click" gesture to select while maintaining location with another finger.

Dept ofECE ,VKCET Trivandrum 7

Touchless touchscreen technology Seminar Report 2017

CHAPTER 3

WORKING OF TOUCHSCREEN

Figure 3.1 Working of touchless touchscreen

A resistive touchscreen panel comprises several layers, the most important of which are two

thin, transparent electrically resistive layers separated by a thin space. These layers face each

other with a thin gap between. The top screen (the screen that is touched) has a coating on the

underside surface of the screen. Just beneath it is a similar resistive layer on top of its substrate.

One layer has conductive connections along its sides, the other along top and bottom.

Dept ofECE ,VKCET Trivandrum 8

Touchless touchscreen technology Seminar Report 2017

A voltage is applied to one layer, and sensed by the other. When an object, such as a fingertip

or stylus tip, presses down onto the outer surface, the two layers touch to become connected at

that point: The panel then behaves as a pair of voltage dividers, one axis at a time. By rapidly

switching between each layer, the position of a pressure on the screen can be read.

A capacitive touchscreen panel consists of an insulator such as glass, coated with a

transparent conductor such as indium tin oxide (ITO).As the human body is also an electrical

conductor, touching the surface of the screen results in a distortion of the screen's electrostatic

field, measurable as a change in capacitance. Different technologies may be used to determine

the location of the touch. The location is then sent to the controller for processing.

Unlike a resistive touchscreen, one cannot use a capacitive touchscreen through most types of

electrically insulating material, such as gloves. This disadvantage especially affects usability in

consumer electronics, such as touch tablet PCs and capacitive smartphones in cold weather. It

can be overcome with a special capacitive stylus, or a special-application glove with an

embroidered patch of conductive thread passing through it and contacting the user's fingertip.

3.1 Disadvantage Of Touchscreen

1. Low precision by using finger.

2. User has to sit or stand closer to the screen.

3. The screen may be covered more by using hand

4. No direct activation to the selected function.

Dept ofECE ,VKCET Trivandrum 9

Touchless touchscreen technology Seminar Report 2017

CHAPTER 4

INTRODUCTION TO TOUCHLESS TOUCHSCREEN

Touch less control of electrically operated equipment is being developed by Elliptic Labs.

This system depends on hand or finger motions, a hand wave in a certain direction. The sensor

can be placed either on the screen or near the screen. The touchscreen enables the user to interact

directly with what is displayed, rather than using a mouse, touchpad, or any other intermediate

device (other than a stylus, which is optional for most modern touchscreens).Touchscreens are

common in devices such as game consoles, personal computers, tablet computers, electronic

voting machines, point of sale systems ,and smartphones. They can also be attached to computers

or, as terminals, to networks. They also play a prominent role in the design of digital appliances

such as personal digital assistants (PDAs) and some e-readers.

The popularity of smartphones, tablets, and many types of information appliances is driving

the demand and acceptance of common touchscreens for portable and functional electronics.

Touchscreens are found in the medical field and in heavy industry, as well as for automated teller

machines(ATMs), and kiosks such as museum displays or room automation, where keyboard and

mouse systems do not allow a suitably intuitive, rapid, or accurate interaction by the user with

the display's content. Historically, the touchscreen sensor and its accompanying controller-based

firmware have been made available by a wide array of after-market system integrators, and not

by display, chip, or motherboard manufacturers. Display manufacturers and chip manufacturers

worldwide have acknowledged the trend toward acceptance of touchscreens as a highly desirable

user interface component and have begun to integrate touchscreens into the fundamental design

of their products.

The touch less touch screen sounds like it would be nice and easy, however after closer

examination it looks like it could be quite a workout. This unique screen is made by TouchKo,

White Electronics Designs, and Groupe 3D. The screen resembles the Nintendo Wii without the

Wii Controller. With the touchless touch screen your hand doesn’t have to come in contact with

Dept ofECE ,VKCET Trivandrum 10

Touchless touchscreen technology Seminar Report 2017

the screen at all, it works by detecting your hand movements in front of it. This is a pretty unique

and interesting invention, until you break out in a sweat. Now this technology doesn’t compare

to the hologram-like IO2 Technologies Heliodisplay M3, but thats for anyone that has $18,100

laying around.

Figure 4.1 Touchless touchscreen

You probably wont see this screen in stores any time soon. Everybody loves a touch screen

and when you get a gadget with touch screen the experience is really exhilarating. When the I-

phone was introduced,everyone felt the same.But gradually,the exhilaration started fading. While

using the phone with the finger tip or with the stylus the screen started getting lots of finger

prints and scratches. When we use a screen protector; still dirty marks over such beautiful glossy

screen is a strict no-no. Same thing happens with I-pod touch. . Most of the time we have to wipe

the screen to get a better unobtrusive view of the screen

Thanks to EllipticLabs innovative technology that lets you control your gadgets like Computers,

MP3 players or mobile phones without touching them. Simply point your finger in the air

towards the device and move it accordingly to control the navigation in the device. They term

this as “Touchless human/machine user interface for 3D navigation ”.

Dept ofECE ,VKCET Trivandrum 11

Touchless touchscreen technology Seminar Report 2017

Figure4.2 3D Navigation of Hand Movements in Touchless Screen

4.1 TOUCHLESS MONITOR

Sure, everybody is doing touchscreen interfaces these days, but this is the first time I’ve seen

a monitor that can respond to gestures without actually having to touch the screen. The monitor,

based on technology from TouchKo was recently demonstrated by White Electronic Designs and

Tactyl Services at the CeBIT show. Designed for applications where touch may be difficult, such

as for doctors who might be wearing surgical gloves, the display features capacitive sensors that

can read movements from up to 15cm away from the screen. Software can then translate gestures

into screen commands.

Dept ofECE ,VKCET Trivandrum 12

Touchless touchscreen technology Seminar Report 2017

Touchscreen interfaces are great, but all that touching, like foreplay, can be a little bit of a

drag. Enter the wonder kids from Elliptic Labs, who are hard at work on implementing a

touchless interface. The input method is, well, in thin air. The technology detects motion in 3D

and requires no special worn-sensors for operation. By simply pointing at the screen,users can

manipulate the object being displayed in 3D. Details are light on how this actually functions, but

what we do know is this:

It obviously requires a sensor but the sensor is neither hand mounted nor present on the

screen. The sensor can be placed either onthe table or near the screen. And the hardware setup is

so compact that it can be fitted into a tiny device like a MP3 player or a mobile phone. It

recognizes the position of an object from as 5 feet.

Dept ofECE ,VKCET Trivandrum 13

Touchless touchscreen technology Seminar Report 2017

CHAPTER 5

WORKING OF TOUCHLESS TOUCHSCREEN

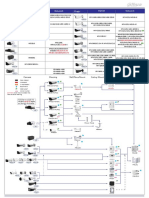

5.1 Block diagram

The system is capable of detecting movements in 3-dimensions without ever having to put

your fingers on the screen. Sensors are mounted around the screen that is being used, by

interacting in the line-of-sight of these sensors the motion is detected and interpreted into on-

screen movements. The device is based on optical pattern recognition using a solid state optical

matrix sensor with a lens to detect hand motions.

Dept ofECE ,VKCET Trivandrum 14

Touchless touchscreen technology Seminar Report 2017

Figure.5.1 Touchless touchscreen

This sensor is then connected to a digital image processor, which interprets the patterns of

motion and outputs the results as signals to control fixtures, appliances, machinery, or any device

controllable through electrical signals. You just point at the screen (from as far as 5 feet away),

and you can manipulate objects in 3D.It consists of three infrared lasers which scan a surface. A

camera notes when something breaks through the laser line and feed that information back to the

Plex software.

The Leap Motion controller sensor device that aims to translate hand movements into

computer commands. The controller itself is an eight by three centimeter unit that plugs into the

USB on a computer. Placed face up on surface, the controller senses the area above it and is

sensitive to a range of approximately one meter. To date it has been used primarily in

conjunction with apps developed specifically for the controller. One factor contributing to the

control issues is a lack of given gestures, or meanings for different motion Controls when using

the device, this means that different motion controls will be used in different apps for the same

action, such as selecting an item on the screen. Leap Motion are aware of some of the interaction

issues with their controller, and are planning solutions. This includes the development of

standardized motions for specific actions, and an improved skeletal model of the hand and

fingers.

Dept ofECE ,VKCET Trivandrum 15

Touchless touchscreen technology Seminar Report 2017

5.2 Optical matrix sensor

This is based on optical pattern recognition using a solid state optical matrix sensor. This

sensor is then connected to a digital image processor, which interprets the patterns of motion and

outputs the results as signals. In each of these sensors there is a matrix of pixels. Each pixel is

coupled to photodiodes incorporating charge storage regions

5.3 GBUI (Gesture-Based Graphical User Interface)

A movement of part of the body, especially a hand or the head, to express an idea or meaning

Based graphical user interphase.

Dept ofECE ,VKCET Trivandrum 16

Touchless touchscreen technology Seminar Report 2017

Figure 5.3 GBUI Symbols

A Leap Motion controller was used by two members in conjunction with a laptop and the

Leap Motion software development kit. Initial tests were conducted to establish how the

controller worked and to understand basic interaction. The controller is used to tested for the

recognition of sign language. The finger spelling alphabet was used to test the functionality of

the controller. The alphabet was chosen for the relative simplicity of individual signs, and for the

diverse range of movements involved in the alphabet. The focus of these tests is to evaluate the

capabilities and accuracy of the controller to recognize hand movements. This capability can

now be discussed in terms of the strengths and weaknesses of the controller.

Dept ofECE ,VKCET Trivandrum 17

Touchless touchscreen technology Seminar Report 2017

CHAPTER 6

TOUCHLESS UI

The basic idea described in the patent is that there would be sensors arrayed around the

perimeter of the device capable of sensing finger movements in 3-D space.

Figure 6.1 Touchless UI

UI in their Redmond headquarters and it involves lots of gestures which allow you to take

applications and forward them on to others with simple hand movements. The demos included

the concept of software understanding business processes and helping you work. So after reading

a document - you could just push it off the side of your screen and the system would know to

post it on an intranet and also send a link to a specific group of people

Dept ofECE ,VKCET Trivandrum 18

Touchless touchscreen technology Seminar Report 2017

.6.1 Touch-less SDK

The Touchless SDK is an open source SDK for .NET applications. It enables developers to

create multi-touch based applications using a webcam for input. Color based markers defined by

the user are tracked and their information is published through events to clients of the SDK.

Figure.6.2. Touchless SDK

In a nutshell, the Touchless SDK enables touch without touching. Well, Microsoft Office

Labs has just released “Touchless,” a webcam-driven multi-touch interface SDK that enables

“touch without touching.” Using the SDK lets developers offer users “a new and cheap way of

experiencing multi-touch capabilities, without the need of expensive hardware or software. All

the user needs is a camera,” to track the multi-colored objects as defined by the developer. Using

the SDK lets developers offer users “a new and cheap way of experiencing multi-touch

capabilities, without the need of expensive hardware or software. All the user needs is a camera,”

to track the multi-colored objects as defined by the developer. Just about any webcam will

work.Using the SDK lets developers offer users “a new and cheap way of experiencing multi-

touch capabilities, without the need of expensive hardware or software. All the user needs is a

camera,” to track the multi-colored objects as defined by the developer.

Dept ofECE ,VKCET Trivandrum 19

Touchless touchscreen technology Seminar Report 2017

Figure.6.3.Digital matrix

6.2 Touch-less demo

The Touch less Demo is an open source application that anyone with a webcam can use to

experience multi-touch, no geekiness required.

Figure 6.4 touchless demo

Dept ofECE ,VKCET Trivandrum 20

Touchless touchscreen technology Seminar Report 2017

The demo was created using the Touch less SDK and Windows Forms with C#. There are 4 fun

demos: Snake - where you control a snake with a marker, Defender up to 4 player version of a

pong-like game, Map - where you can rotate, zoom, and move a map using 2 markers, and Draw

the marker is used to guess what…. draw! Mike demonstrated Touch less at a recent Office

Labs’ Productivity Science Fair where it was voted by attendees as “most interesting project.” If

you wind up using the SDK, one would love to hear what use you make of it!

6.3 Touch wall

Touch Wall refers to the touch screen hardware setup itself; the corresponding software to run

Touch Wall, which is built on a standard version of Vista, is called Plex. Touch Wall and Plex

are superficially similar to Microsoft Surface, a multi-touch table computer that was introduced

in 2007 and which recently became commercially available in select AT&T stores.

Figure 6.5 Touch wall

It is a fundamentally simpler mechanical system, and is also significantly cheaper to produce.

While Surface retails at around $10,000, the hardware to “turn almost anything into a multi-

touch interface” for Touch Wall is just “hundreds of dollars”.

Dept ofECE ,VKCET Trivandrum 21

Touchless touchscreen technology Seminar Report 2017

Touch Wall consists of three infrared lasers that scan a surface. A camera notes when

something breaks through the laser line and feeds that information back to the Plex software.

Early prototypes, say Pratley and Sands, were made, simply, on a cardboard screen. A projector

was used to show the Plex interface on the cardboard, and a the system worked fine. Touch Wall

certainly isn’t the first multi-touch product we’ve seen (see iPhone). In addition to Surface, of

course, there are a number of early prototypes emerging in this space. But what Microsoft has

done with a few hundred dollars worth of readily available hardware is stunning.

It’s also clear that the only real limit on the screen size is the projector, meaning that entire

walls can easily be turned into a multi touch user interface. Scrap those white boards in the

office, and make every flat surface into a touch display instead. You might even save some

money.

Dept ofECE ,VKCET Trivandrum 22

Touchless touchscreen technology Seminar Report 2017

CHAPTER 7

MINORITY REPORT INSPIRED TOUCHLESS TECHNOLOGY

i. Tobii Rex

Tobii Rex is an eye-tracking device from Sweden which works with any computer running on

Windows 8. The device has a pair of infrared sensors built in that will track the user’s eyes.

Figure.7.1. Tobii Rex

ii. Elliptic Labs

Elliptic Labs allows you to operate your computer without touching it with the Windows 8

Gesture Suite.

Dept ofECE ,VKCET Trivandrum 23

Touchless touchscreen technology Seminar Report 2017

Figure.7.2. Elliptic Lab

iii. Airwirting

Airwriting is a technology that allows you to write text messages or compose emails by

writing in the air.

Figure.7.3. Airwriting

Dept ofECE ,VKCET Trivandrum 24

Touchless touchscreen technology Seminar Report 2017

iv. Eyesight

EyeSight is a gesture technology which allows you to navigate through your devices by just

pointing at it.

Figure.7.4. EyeSight

v. MAUZ

MAUZ is a third party device that turns your iPhone into a trackpad or mouse.

Figure.7.5. MAUZ

Dept ofECE ,VKCET Trivandrum 25

Touchless touchscreen technology Seminar Report 2017

vi. Point Grab

Point Grab is something similar to eyeSight, in that it enables users to navigate on their

computer just by pointing at it.

Figure.7.6. Point Grab

vii. Leap Motion

Leap Motion is a motion sensor device that recognizes the user’s fingers with its infrared

LEDs and cameras.

Figure.7.7. Leap Motion

Dept ofECE ,VKCET Trivandrum 26

Touchless touchscreen technology Seminar Report 2017

viii. Myoelectric armband

Myoelectric armband or MYO armband is a gadget that allows you to control your other

bluetooth enabled devices using your finger or your hands.

Figure 7.8 Myoelectric armband

ix. Microsoft Kinect

It detects and recognizes a user’s body movement and reproduces it within the video

game that is being played.

Figure7.9 Microsoft kinect

Dept ofECE ,VKCET Trivandrum 27

Touchless touchscreen technology Seminar Report 2017

CHAPTER 8

ADVANTAGES

No de-sensitization of screen.

Can be controlled from a distance.

Usefull for physically handicapped people.

Easier and satisfactory experience.

Gesturing and cursor positioning.

No drivers required

Dept ofECE ,VKCET Trivandrum 28

Touchless touchscreen technology Seminar Report 2017

CHAPTER 9

APPLICATIONS

i. Touch less monitor

ii. Touch less user interface

iii. Touch less SDK

iv. Touch less demo

v. Touch wall

Dept ofECE ,VKCET Trivandrum 29

Touchless touchscreen technology Seminar Report 2017

CHAPTER 10

CONCLUSION

Touchless Technology is still developing. Many Future Aspects. With this in few years our

body can become a input device. The Touch less touch screen user interface can be used

effectively in computers, cell phones, webcams and laptops. May be few years down the line, our

body can be transformed into a virtua l mouse, virtual keyboard ,Our body may be turned in to an

input device. It appears that while the device has potential, the API supporting the device is not

yet ready to interpret the full range of sign language. At present, the controller can be used with

significant work for recognition of basic signs, However it is not appropriate for complex signs,

especially those that require significant face or body contact. As a result of the significant

rotation and line-of sight obstruction of digits during conversational signs become inaccurate and

indistinguishable making the controller (at present) unusable for conversational However, when

addressing signs as single entities there is potential for them to be trained into Artificial Neural

Networks.

Dept ofECE ,VKCET Trivandrum 30

Touchless touchscreen technology Seminar Report 2017

REFERENCES

A.K. Jain, A. Ross, K. Nandakumar, Introduction to Biometrics, Springer, 2011.

K. O'Hara et al., "Touchless interaction in surgery", Communications of the

ACM, vol. 57, no. 1, pp. 70-77, 2014.

J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith, J. Handler, "Real-

Time Hand Gesture Interface for Browsing Medical Images", IC-MED, vol. 1, no.

3, pp. 175-185.

P. Peltonen, E. Kurvinen, A. Salovaara, G. Jacucci, T. Ilmonen, J. Evans, A.

Oulasvirta, P. Saarikko, "It's mine don't touch!: interactions at a large multi-touch

display in a city centre", CHI '08: Proceeding of the twenty-sixth annual SIGCHI

conference on Human factors in computing systems, ACM, pp. 1285-1294, 2008.

Dept ofECE ,VKCET Trivandrum 31

You might also like

- Touchscreen Evolution and Touchless Technology AdvancementsDocument31 pagesTouchscreen Evolution and Touchless Technology AdvancementsVaahila ReddyNo ratings yet

- Touchless TouchscreenDocument2 pagesTouchless TouchscreenEditor IJTSRDNo ratings yet

- Touchless Touch ScreenDocument22 pagesTouchless Touch ScreenKalyan Reddy AnuguNo ratings yet

- Touchless Touch Screen TechnologyDocument23 pagesTouchless Touch Screen TechnologyIndupriya KatariNo ratings yet

- Touchless Touch Screen User Interface PresentationDocument33 pagesTouchless Touch Screen User Interface Presentationcpprani50% (2)

- Touchless Touch Screen Technology for Hands-Free Device ControlDocument15 pagesTouchless Touch Screen Technology for Hands-Free Device Controlshaik zaheerNo ratings yet

- Technical Seminar Report On Smart Note TakerDocument28 pagesTechnical Seminar Report On Smart Note TakerRahul Garg77% (13)

- Touchless Touchscreen Tech SeminarDocument28 pagesTouchless Touchscreen Tech SeminarPravallika Potnuru100% (2)

- Abstract For Touchless Touchscreen TechnologyDocument1 pageAbstract For Touchless Touchscreen TechnologyBhargav RudrapakaNo ratings yet

- Touchless TechnologyDocument16 pagesTouchless TechnologyKongara Suraj80% (5)

- Technical Seminar ReportDocument28 pagesTechnical Seminar ReportChandu Chandana67% (9)

- Screen Less Display: A Seminar Report OnDocument10 pagesScreen Less Display: A Seminar Report OnShubhPatel100% (1)

- Report On Smart AntennaDocument34 pagesReport On Smart Antennabharath26123486% (22)

- Biometric Atm Seminar ReportDocument25 pagesBiometric Atm Seminar ReportsaRAth asgaRdian54% (13)

- Embedded Design and Development GuideDocument39 pagesEmbedded Design and Development GuideAyushNo ratings yet

- Seminar Report Edge ComputingDocument28 pagesSeminar Report Edge Computingsanket hosalli56% (16)

- Smart Note Taker Documentation1Document20 pagesSmart Note Taker Documentation1Sharma Rahul0% (1)

- Smart Note TakerDocument35 pagesSmart Note TakerSama Venkatreddy78% (9)

- Seminar Topics for Computer ScienceDocument5 pagesSeminar Topics for Computer Sciencemanjari0950% (8)

- The Worst Experience That I Have Ever HadDocument4 pagesThe Worst Experience That I Have Ever Hadstanleylee80% (5)

- Internship Report On Embedded System and IoT Technology.Document72 pagesInternship Report On Embedded System and IoT Technology.manish80% (10)

- E Paper Seminar ReportDocument27 pagesE Paper Seminar Reportvrushali kadam100% (1)

- Seminar Report On 5G TechnologyDocument31 pagesSeminar Report On 5G TechnologyPriyanshu Mangal100% (3)

- IOT Based Weather Monitoring System Sends Data to WebsiteDocument9 pagesIOT Based Weather Monitoring System Sends Data to WebsiteNihal Al Rafi100% (9)

- 1MV19CS092 Final Internship ReportDocument30 pages1MV19CS092 Final Internship ReportRehan Nadeemulla100% (3)

- Biometric ATM Security: Fingerprint and Multimodal AuthenticationDocument22 pagesBiometric ATM Security: Fingerprint and Multimodal AuthenticationsaRAth asgaRdian60% (5)

- Technical Seminar ReportDocument20 pagesTechnical Seminar ReportSunandha Sunandha89% (9)

- E-Paper Technology DocumentDocument32 pagesE-Paper Technology DocumentZuber Md54% (13)

- Silent Sound TechnologyDocument20 pagesSilent Sound TechnologySuman Savanur100% (3)

- Pavi Internship Report 2 PDFDocument55 pagesPavi Internship Report 2 PDFSuhas Naik100% (1)

- GI FI DocumentationDocument14 pagesGI FI DocumentationSunil Syon86% (7)

- Smart Note Taker: A Helpful Product for Busy LivesDocument2 pagesSmart Note Taker: A Helpful Product for Busy LivesSruthi Reddy67% (3)

- Touchless TouchscreenDocument5 pagesTouchless Touchscreenshubham100% (1)

- Fog ComputingDocument31 pagesFog ComputingPranjali100% (2)

- Wireless Usb Full Seminar Report Way2project - inDocument22 pagesWireless Usb Full Seminar Report Way2project - inmanthapan0% (1)

- A Seminar Report On Screenless Display Bachelor of Technology in Computer Science & Engineering Submitted by T.Anusha 16SS1A0555Document24 pagesA Seminar Report On Screenless Display Bachelor of Technology in Computer Science & Engineering Submitted by T.Anusha 16SS1A0555Anonymous ZowLVytSFNo ratings yet

- FINALDocument16 pagesFINALDhruv Rajput83% (12)

- Eskin Seminar ReportDocument17 pagesEskin Seminar Reportsai kiran83% (6)

- 174M1A0431-PPT ON 6G TechnologyDocument17 pages174M1A0431-PPT ON 6G TechnologyApple Balu77% (13)

- Silent Sound TechnologyDocument20 pagesSilent Sound Technologysowji75% (16)

- Seminar Report 6G Technology MerajDocument19 pagesSeminar Report 6G Technology MerajShaikh faisalNo ratings yet

- E PaperDocument22 pagesE PaperSrajit Saxena100% (6)

- DYNAMIC SORTING ALGORITHM VISUALIZER USING OPENGL ReportDocument35 pagesDYNAMIC SORTING ALGORITHM VISUALIZER USING OPENGL ReportSneha Sivakumar88% (8)

- Seminar Report Bluetooth Based Smart Sensor NetworksDocument21 pagesSeminar Report Bluetooth Based Smart Sensor NetworksVivek Singh100% (1)

- Artificial PassengerDocument38 pagesArtificial PassengerAkshay Agrawal57% (7)

- Smart Blind Stick Helps Visually Impaired Navigate SafelyDocument12 pagesSmart Blind Stick Helps Visually Impaired Navigate SafelyAdarsh Chintu100% (2)

- Final Intrenship ReportDocument32 pagesFinal Intrenship Report046 KISHORE33% (3)

- Iot Mini Project MainDocument19 pagesIot Mini Project MainNikesh niki100% (1)

- Touchless Touch Screen Tech SeminarDocument11 pagesTouchless Touch Screen Tech SeminarShreya SinghNo ratings yet

- Technical Seminar Report On Extended RealityDocument30 pagesTechnical Seminar Report On Extended Reality1HK17CS102 NEHA MITTAL100% (3)

- MAD Lab Mini ProjectDocument17 pagesMAD Lab Mini Projectsantosh kumar100% (1)

- Image Steganography ProjectDocument5 pagesImage Steganography ProjectRajesh KumarNo ratings yet

- Touchless Touchscreen Seminar ReportDocument31 pagesTouchless Touchscreen Seminar ReportPriyanshu MangalNo ratings yet

- Final Seminar ReportDocument29 pagesFinal Seminar ReportIsmail Rohit RebalNo ratings yet

- Touchless Touchscreen Technology: A SeminarreportonDocument37 pagesTouchless Touchscreen Technology: A SeminarreportonAbhishek YedeNo ratings yet

- Technical Seminar Documentation (1) D8Document42 pagesTechnical Seminar Documentation (1) D8Boddu NikithaNo ratings yet

- Index: Pageno NumberDocument17 pagesIndex: Pageno NumbervidyaNo ratings yet

- Touchless Touch Screen: Submitted in Partial Fulfillment of Requirement For The Award of The Degree ofDocument68 pagesTouchless Touch Screen: Submitted in Partial Fulfillment of Requirement For The Award of The Degree ofpavan kumarNo ratings yet

- Touchless Touchscreen Seminar by Kayode Damilola SamsonDocument25 pagesTouchless Touchscreen Seminar by Kayode Damilola SamsonBobby ZackNo ratings yet

- Akhil MainDocument26 pagesAkhil Mainibrahim mohammedNo ratings yet

- Specifications Sheet ReddyDocument4 pagesSpecifications Sheet ReddyHenry CruzNo ratings yet

- Synopsis - AVR Based Realtime Online Scada With Smart Electrical Grid Automation Using Ethernet 2016Document19 pagesSynopsis - AVR Based Realtime Online Scada With Smart Electrical Grid Automation Using Ethernet 2016AmAnDeepSinghNo ratings yet

- Gerovital anti-aging skin care product guideDocument10 pagesGerovital anti-aging skin care product guideכרמן גאורגיהNo ratings yet

- Fund. of EnterpreneurshipDocument31 pagesFund. of EnterpreneurshipVarun LalwaniNo ratings yet

- Presentation 123Document13 pagesPresentation 123Harishitha ManivannanNo ratings yet

- Elements of Plane and Spherical Trigonometry With Numerous Practical Problems - Horatio N. RobinsonDocument228 pagesElements of Plane and Spherical Trigonometry With Numerous Practical Problems - Horatio N. RobinsonjorgeNo ratings yet

- 2 - Alaska - WorksheetsDocument7 pages2 - Alaska - WorksheetsTamni MajmuniNo ratings yet

- المحاضرة الرابعة المقرر انظمة اتصالات 2Document31 pagesالمحاضرة الرابعة المقرر انظمة اتصالات 2ibrahimNo ratings yet

- Physics SyllabusDocument85 pagesPhysics Syllabusalex demskoyNo ratings yet

- NitrocelluloseDocument7 pagesNitrocellulosejumpupdnbdjNo ratings yet

- Vishwabhanu Oct '18 - Jan '19Document26 pagesVishwabhanu Oct '18 - Jan '19vedicvision99100% (3)

- Buddhism Beyond ReligionDocument7 pagesBuddhism Beyond ReligionCarlos A SanchesNo ratings yet

- Dahua Pfa130 e Korisnicko Uputstvo EngleskiDocument5 pagesDahua Pfa130 e Korisnicko Uputstvo EngleskiSaša CucakNo ratings yet

- Overlord - Volume 01 - The Undead KingDocument223 pagesOverlord - Volume 01 - The Undead KingPaulo FordheinzNo ratings yet

- Technote Torsional VibrationDocument2 pagesTechnote Torsional Vibrationrob mooijNo ratings yet

- Datasheet Optris XI 410Document2 pagesDatasheet Optris XI 410davidaldamaNo ratings yet

- Personality Types and Character TraitsDocument5 pagesPersonality Types and Character TraitspensleepeNo ratings yet

- Binge-Eating Disorder in AdultsDocument19 pagesBinge-Eating Disorder in AdultsJaimeErGañanNo ratings yet

- LOD Spec 2016 Part I 2016-10-19 PDFDocument207 pagesLOD Spec 2016 Part I 2016-10-19 PDFzakariazulkifli92No ratings yet

- Etoh Membrane Seperation I&ec - 49-p12067 - 2010 - HuangDocument7 pagesEtoh Membrane Seperation I&ec - 49-p12067 - 2010 - HuangHITESHNo ratings yet

- 4 Ideal Models of Engine CyclesDocument23 pages4 Ideal Models of Engine CyclesSyedNo ratings yet

- Common Herbs and Foods Used As Galactogogues PDFDocument4 pagesCommon Herbs and Foods Used As Galactogogues PDFHadi El-MaskuryNo ratings yet

- Gps Vehicle Tracking System ProjectDocument3 pagesGps Vehicle Tracking System ProjectKathrynNo ratings yet

- ME 2141 - Complete ModuleDocument114 pagesME 2141 - Complete ModuleNICOLE ANN MARCELINONo ratings yet

- LOGARITHMS, Exponentials & Logarithms From A-Level Maths TutorDocument1 pageLOGARITHMS, Exponentials & Logarithms From A-Level Maths TutorHorizon 99No ratings yet

- Product CataloguepityDocument270 pagesProduct CataloguepityRaghuRags100% (1)

- EVOLUTION Class Notes PPT-1-10Document10 pagesEVOLUTION Class Notes PPT-1-10ballb1ritikasharmaNo ratings yet

- Flame Configurations in A Lean Premixed Dump Combustor With An Annular Swirling FlowDocument8 pagesFlame Configurations in A Lean Premixed Dump Combustor With An Annular Swirling Flowعبدالله عبدالعاطيNo ratings yet

- The Study of 220 KV Power Substation Equipment DetailsDocument90 pagesThe Study of 220 KV Power Substation Equipment DetailsAman GauravNo ratings yet

- Allen Bradley Power Monitor 3000 Manual PDFDocument356 pagesAllen Bradley Power Monitor 3000 Manual PDFAndrewcaesar100% (1)