Professional Documents

Culture Documents

An Ova

Uploaded by

chagzguptaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

An Ova

Uploaded by

chagzguptaCopyright:

Available Formats

When we have only two samples we can use the t-test to compare the means oI the samples

but it might become unreliable in case oI more than two samples. II we only compare two

means, then the t-test (independent samples) will give the same results as the ANOVA.

It is used to compare the means oI more than two samples. This can be understood better with

the help oI an example.

ONE WAY ANOVA

EXAMPLE: Suppose we want to test the eIIect oI Iive diIIerent exercises. For this, we recruit

20 men and assign one type oI exercise to 4 men (5 groups). Their weights are recorded aIter

a Iew weeks.

We may Iind out whether the eIIect oI these exercises on them is signiIicantly diIIerent or not

and this may be done by comparing the weights oI the 5 groups oI 4 men each.

The example above is a case oI one-way balanced ANOVA.

It has been termed as one-way as there is only one category whose eIIect has been studied

and balanced as the same number oI men has been assigned on each exercise. Thus the basic

idea is to test whether the samples are all alike or not.

WHY NOT MULTIPLE T-TESTS?

As mentioned above, the t-test can only be used to test diIIerences between two means. When

there are more than two means, it is possible to compare each mean with each other mean

using many t-tests.

But conducting such multiple t-tests can lead to severe complications and in such

circumstances we use ANOVA. Thus, this technique is used whenever an alternative

procedure is needed Ior testing hypotheses concerning means when there are several

populations.

ONE WAY AND TWO WAY ANOVA

Now some questions may arise as to what are the means we are talking about and why

variances are analyzed in order to derive conclusions about means. The whole procedure can

be made clear with the help oI an experiment.

Let us study the eIIect oI Iertilizers on yield oI wheat. We apply Iive Iertilizers, each oI

diIIerent quality, on Iour plots oI land each oI wheat. The yield Irom each plot oI land is

recorded and the diIIerence in yield among the plots is observed. Here, Iertilizer is a Iactor

and the diIIerent qualities oI Iertilizers are called levels.

This is a case oI one-way or one-Iactor ANOVA since there is only one Iactor, Iertilizer. We

may also be interested to study the eIIect oI Iertility oI the plots oI land. In such a case we

would have two Iactors, Iertilizer and Iertility. This would be a case oI two-way or two-Iactor

ANOVA. Similarly, a third Iactor may be incorporated to have a case oI three-way or three-

Iactor ANOVA.

HANE AUSE AND ASSIGNABLE AUSE

In the above experiment the yields obtained Irom the plots may be diIIerent and we may be

tempted to conclude that the diIIerences exist due to the diIIerences in quality oI the

Iertilizers.

But this diIIerence may also be the result oI certain other Iactors which are attributed to

chance and which are beyond human control. This Iactor is termed as 'error. Thus, the

diIIerences or variations that exist within a plot oI land may be attributed to error.

Thus, estimates oI the amount oI variation due to assignable causes (or variance between the

samples) as well as due to chance causes (or variance within the samples) are obtained

separately and compared using an F-test and conclusions are drawn using the value oI F.

ASSUMPTIONS

There are Iour basic assumptions used in ANOVA.

O the expected values oI the errors are zero

O the variances oI all errors are equal to each other

O the errors are independent

O they are normally distributed

Read more: http://www.experiment-resources.com/anova-test.html#ixzz1ciFVUyul

Wby not |ust use tbe `test?

1he tLesL Lells us lf Lhe varlaLlon beLween Lwo groups ls slgnlflcanL Why noL [usL do tLesLs for all

Lhe palrs of locaLlons Lhus flndlng for example LhaL leaves from medlan sLrlps are slgnlflcanLly

smaller Lhan leaves from Lhe pralrle whereas shade/pralrle and shade/medlan sLrlps are noL

slgnlflcanLly dlfferenL MulLlple tLesLs are noL Lhe answer because as Lhe number of groups grows

Lhe number of needed palr comparlsons grows qulckly lor 7 groups Lhere are 21 palrs lf we LesL 21

palrs we should noL be surprlsed Lo observe Lhlngs LhaL happen only 3 of Lhe Llme 1hus ln 21

palrlngs a l03 for one palr cannoL be consldered slgnlflcanL AnCvA puLs all Lhe daLa lnLo one

number (l) and glves us ooe l for Lhe null hypoLhesls

O aslc ldeas

4 1he arLlLlonlng of Sums of Squares

4 MulLllacLor AnCvA

4 lnLeracLlon LffecLs

O omplex ueslgns

4 eLweenCroups and 8epeaLed Measures

4 lncompleLe (nesLed) ueslgns

O Analysls of ovarlance (AnCvA)

4 llxed ovarlaLes

4 hanglng ovarlaLes

O MulLlvarlaLe ueslgns MAnCvA/MAnCvA

4 eLweenCroups ueslgns

4 8epeaLed Measures ueslgns

4 Sum Scores versus MAnCvA

O onLrasL Analysls and osL hoc 1esLs

4 Why ompare lndlvldual SeLs of Means?

4 onLrasL Analysls

4 osL hoc omparlsons

O AssumpLlons and LffecLs of vlolaLlng AssumpLlons

4 uevlaLlon from normal ulsLrlbuLlon

4 omogenelLy of varlances

4 omogenelLy of varlances and ovarlances

4 SpherlclLy and ompound SymmeLry

O MeLhods for Analysls of varlance

A general introduction to ANOVA and a discussion oI the general topics in the analysis oI

variance techniques, including repeated measures designs, ANCOVA, MANOVA,

unbalanced and incomplete designs, contrast eIIects, post-hoc comparisons, assumptions, etc.

For related inIormation, see also Variance Components (topics related to estimation oI

variance components in mixed model designs), Experimental Design/DOE (topics related to

specialized applications oI ANOVA in industrial settings), and Repeatability and

Reproducibility Analysis (topics related to specialized designs Ior evaluating the reliability

and precision oI measurement systems).

See also, General Linear Models and General Regression Models; to analyze nonlinear

models, see Generalized Linear Models.

Basic Ideas

be Purpose of nalysis of ariance

In general, the purpose oI analysis oI variance (ANOVA) is to test Ior signiIicant diIIerences

between means. Elementary Concepts provides a brieI introduction to the basics oI statistical

signiIicance testing. II we are only comparing two means, ANOVA will produce the same

results as the t test Ior independent samples (iI we are comparing two diIIerent groups oI

cases or observations) or the t test Ior dependent samples (iI we are comparing two variables

in one set oI cases or observations). II you are not Iamiliar with these tests, you may want to

read Basic Statistics and Tables.

Why the name analysis of variance? It may seem odd that a procedure that compares means

is called analysis oI variance. However, this name is derived Irom the Iact that in order to test

Ior statistical signiIicance between means, we are actually comparing (i.e., analyzing)

variances.

O 1he arLlLlonlng of Sums of Squares

O MulLllacLor AnCvA

O lnLeracLlon LffecLs

More introductory topics:

O omplex ueslgns

O Analysls of ovarlance (AnCvA)

O MulLlvarlaLe ueslgns MAnCvA/MAnCvA

O onLrasL Analysls and osL hoc 1esLs

O AssumpLlons and LffecLs of vlolaLlng AssumpLlons

See also, Methods Ior Analysis oI Variance, Variance Components and Mixed Model

ANOVA/ANCOVA, and Experimental Design (DOE).

be Partitioning of Sums of Squares

At the heart oI ANOVA is the Iact that variances can be divided, that is, partitioned.

Remember that the variance is computed as the sum oI squared deviations Irom the overall

mean, divided by n-1 (sample size minus one). Thus, given a certain n, the variance is a

Iunction oI the sums oI (deviation) squares, or SS Ior short. Partitioning oI variance works as

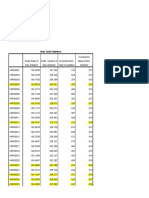

Iollows. Consider this data set:

Group 1 Group 2

Cbservat|on 1

Cbservat|on 2

Cbservat|on 3

2

3

1

6

7

3

Mean

Sums of Squares (SS)

2

2

6

2

Cvera|| Mean

1ota| Sums of Squares

4

28

1he means for Lhe Lwo groups are qulLe dlfferenL (2 and 6 respecLlvely) 1he sums of squares wltblo

each group are equal Lo 2 Addlng Lhem LogeLher we geL 4 lf we now repeaL Lhese compuLaLlons

lgnorlng group membershlp LhaL ls lf we compuLe Lhe LoLal 55 based on Lhe overall mean we geL

Lhe number 28 ln oLher words compuLlng Lhe varlance (sums of squares) based on Lhe wlLhlngroup

varlablllLy ylelds a much smaller esLlmaLe of varlance Lhan compuLlng lL based on Lhe LoLal varlablllLy

(Lhe overall mean) 1he reason for Lhls ln Lhe above example ls of course LhaL Lhere ls a large

dlfference beLween means and lL ls Lhls dlfference LhaL accounLs for Lhe dlfference ln Lhe 55 ln facL

lf we were Lo perform an AnCvA on Lhe above daLa we would geL Lhe followlng resulL

MAIN LIILC1

SS df MS I p

Lffect

Lrror

240

40

1

4

240

10

240

008

As can be seen ln Lhe above Lable Lhe LoLal 55 (28) was parLlLloned lnLo Lhe 55 due Lo wltblogroup

varlablllLy (2+24) and varlablllLy due Lo dlfferences beLween means (28(2-2)24)

SS Error and SS Effect. The within-group variability (SS) is usually reIerred to as Error

variance. This term denotes the Iact that we cannot readily explain or account Ior it in the

current design. However, the SS Effect we can explain. Namely, it is due to the diIIerences in

means between the groups. Put another way, group membership explains this variability

because we know that it is due to the diIIerences in means.

Significance testing. The basic idea oI statistical signiIicance testing is discussed in

Elementary Concepts, which also explains why very many statistical tests represent ratios oI

explained to unexplained variability. ANOVA is a good example oI this. Here, we base this

test on a comparison oI the variance due to the between-groups variability (called Mean

Square Effect, or MS

effect

) with the within-group variability (called Mean Square Error, or

Ms

error

; this term was Iirst used by Edgeworth, 1885). Under the null hypothesis (that there

are no mean diIIerences between groups in the population), we would still expect some minor

random Iluctuation in the means Ior the two groups when taking small samples (as in our

example). ThereIore, under the null hypothesis, the variance estimated based on within-group

variability should be about the same as the variance due to between-groups variability. We

can compare those two estimates oI variance via the F test (see also F Distribution), which

tests whether the ratio oI the two variance estimates is signiIicantly greater than 1. In our

example above, that test is highly signiIicant, and we would in Iact conclude that the means

Ior the two groups are signiIicantly diIIerent Irom each other.

Summary of the basic logic of ANOVA. To summarize the discussion up to this point, the

purpose oI analysis oI variance is to test diIIerences in means (Ior groups or variables) Ior

statistical signiIicance. This is accomplished by analyzing the variance, that is, by

partitioning the total variance into the component that is due to true random error (i.e.,

within-group SS) and the components that are due to diIIerences between means. These latter

variance components are then tested Ior statistical signiIicance, and, iI signiIicant, we reject

the null hypothesis oI no diIIerences between means and accept the alternative hypothesis

that the means (in the population) are diIIerent Irom each other.

Dependent and independent variables. The variables that are measured (e.g., a test score)

are called dependent variables. The variables that are manipulated or controlled (e.g., a

teaching method or some other criterion used to divide observations into groups that are

compared) are called factors or independent variables. For more inIormation on this

important distinction, reIer to Elementary Concepts.

ultiactor

In the simple example above, it may have occurred to you that we could have simply

computed a t test Ior independent samples to arrive at the same conclusion. And, indeed, we

would get the identical result iI we were to compare the two groups using this test. However,

ANOVA is a much more Ilexible and powerIul technique that can be applied to much more

complex research issues.

Multiple factors. The world is complex and multivariate in nature, and instances when a

single variable completely explains a phenomenon are rare. For example, when trying to

explore how to grow a bigger tomato, we would need to consider Iactors that have to do with

the plants' genetic makeup, soil conditions, lighting, temperature, etc. Thus, in a typical

experiment, many Iactors are taken into account. One important reason Ior using ANOVA

methods rather than multiple two-group studies analyzed via t tests is that the Iormer method

is more efficient, and with Iewer observations we can gain more inIormation. Let's expand on

this statement.

ontrolling for factors. Suppose that in the above two-group example we introduce another

grouping Iactor, Ior example, ender. Imagine that in each group we have 3 males and 3

Iemales. We could summarize this design in a 2 by 2 table:

Lxper|menta|

Group 1

Lxper|menta|

Group 2

Ma|es

2

3

1

6

7

3

Mean 2 6

Iema|es

4

3

3

8

9

7

Mean 4 8

efore performlng any compuLaLlons lL appears LhaL we can parLlLlon Lhe LoLal varlance lnLo aL leasL

3 sources (1) error (wlLhlngroup) varlablllLy (2) varlablllLy due Lo experlmenLal group membershlp

and (3) varlablllLy due Lo gender (noLe LhaL Lhere ls an addlLlonal source lotetoctloo LhaL we wlll

dlscuss shorLly) WhaL would have happened had we noL lncluded qeoJet as a facLor ln Lhe sLudy buL

raLher compuLed a slmple t LesL? lf we compuLe Lhe 55 lgnorlng Lhe qeoJet facLor (use Lhe wlLhln

group means lqootloq or collopsloq octoss qeoJet Lhe resulL ls 5510+1020) we wlll see LhaL Lhe

resulLlng wlLhlngroup SS ls larger Lhan lL ls when we lnclude gender (use Lhe wlLhln group wlLhln

gender means Lo compuLe Lhose 55 Lhey wlll be equal Lo 2 ln each group Lhus Lhe comblned SS

wlLhln ls equal Lo 2+2+2+28) 1hls dlfference ls due Lo Lhe facL LhaL Lhe means for moles are

sysLemaLlcally lower Lhan Lhose for femoles and Lhls dlfference ln means adds varlablllLy lf we

lgnore Lhls facLor onLrolllng for error varlance lncreases Lhe senslLlvlLy (power) of a LesL 1hls

example demonsLraLes anoLher prlnclpal of AnCvA LhaL makes lL preferable over slmple Lwogroup L

LesL sLudles ln AnCvA we can LesL each facLor whlle conLrolllng for all oLhers Lhls ls acLually Lhe

reason why AnCvA ls more sLaLlsLlcally powerful (le we need fewer observaLlons Lo flnd a

slgnlflcanL effecL) Lhan Lhe slmple t LesL

nteraction Effects

There is another advantage oI ANOVA over simple t-tests: with ANOVA, we can detect

interaction eIIects between variables, and, thereIore, to test more complex hypotheses about

reality. Let's consider another example to illustrate this point. (The term interaction was Iirst

used by Fisher, 1926.)

Main effects, two-way interaction. Imagine that we have a sample oI highly achievement-

oriented students and another oI achievement "avoiders." We now create two random halves

in each sample, and give one halI oI each sample a challenging test, the other an easy test.

We measure how hard the students work on the test. The means oI this (Iictitious) study are

as Iollows:

Ach|evement

or|ented

Ach|evement

avo|ders

Cha||eng|ng 1est

Lasy 1est

10

3

3

10

ow can we summarlze Lhese resulLs? ls lL approprlaLe Lo conclude LhaL (1) challenglng LesLs make

sLudenLs work harder (2) achlevemenLorlenLed sLudenLs work harder Lhan achlevemenL avolders?

nelLher of Lhese sLaLemenLs capLures Lhe essence of Lhls clearly sysLemaLlc paLLern of means 1he

approprlaLe way Lo summarlze Lhe resulL would be Lo say LhaL challenglng LesLs make only

achlevemenLorlenLed sLudenLs work harder whlle easy LesLs make only achlevemenL avolders

work harder ln oLher words Lhe Lype of achlevemenL orlenLaLlon and LesL dlfflculLy lotetoct ln Lhelr

effecL on efforL speclflcally Lhls ls an example of a twowoy lotetoctloo beLween achlevemenL

orlenLaLlon and LesL dlfflculLy noLe LhaL sLaLemenLs 1 and 2 above descrlbe socalled molo effects

Higher order interactions. While the previous two-way interaction can be put into words

relatively easily, higher order interactions are increasingly diIIicult to verbalize. Imagine that

we had included Iactor ender in the achievement study above, and we had obtained the

Iollowing pattern oI means:

Iema|es

Ach|evement

or|ented

Ach|evement

avo|ders

Cha||eng|ng 1est

Lasy 1est

10

3

3

10

Ma|es

Ach|evement

or|ented

Ach|evement

avo|ders

Cha||eng|ng 1est

Lasy 1est

1

6

6

1

ow can we now summarlze Lhe resulLs of our sLudy? Craphs of means for all effecLs greaLly

faclllLaLe Lhe lnLerpreLaLlon of complex effecLs 1he paLLern shown ln Lhe Lable above (and ln Lhe

graph below) represenLs a tbteewoy lnLeracLlon beLween facLors

Thus, we may summarize this pattern by saying that Ior Iemales there is a two-way

interaction between achievement-orientation type and test diIIiculty: Achievement-oriented

Iemales work harder on challenging tests than on easy tests, achievement-avoiding Iemales

work harder on easy tests than on diIIicult tests. For males, this interaction is reversed. As

you can see, the description oI the interaction has become much more involved.

A general way to express interactions. A general way to express all interactions is to say

that an eIIect is modiIied (qualiIied) by another eIIect. Let's try this with the two-way

interaction above. The main eIIect Ior test diIIiculty is modiIied by achievement orientation.

For the three-way interaction in the previous paragraph, we can summarize that the two-way

interaction between test diIIiculty and achievement orientation is modiIied (qualiIied) by

ender. II we have a Iour-way interaction, we can say that the three-way interaction is

modiIied by the Iourth variable, that is, that there are diIIerent types oI interactions in the

diIIerent levels oI the Iourth variable. As it turns out, in many areas oI research Iive- or

higher- way interactions are not that uncommon.

You might also like

- Consolidated Financial Statements: Accounting Standard (AS) 21Document12 pagesConsolidated Financial Statements: Accounting Standard (AS) 21Vikas TirmaleNo ratings yet

- Interview QuestionsDocument4 pagesInterview QuestionschagzguptaNo ratings yet

- Unit-3 Organisational Buying BehaviourDocument14 pagesUnit-3 Organisational Buying Behaviourbhar4tp100% (1)

- Mfi 01Document280 pagesMfi 01chagzguptaNo ratings yet

- Work Experience: Zilch Graduation: B.Sc. Agriculture: WHY IT????? Trust Me Any Non-Engineer Has To Answer This QuestionDocument3 pagesWork Experience: Zilch Graduation: B.Sc. Agriculture: WHY IT????? Trust Me Any Non-Engineer Has To Answer This QuestionchagzguptaNo ratings yet

- Actual BisleriDocument14 pagesActual BislerichagzguptaNo ratings yet

- Work Experience: Zilch Graduation: B.Sc. Agriculture: WHY IT????? Trust Me Any Non-Engineer Has To Answer This QuestionDocument3 pagesWork Experience: Zilch Graduation: B.Sc. Agriculture: WHY IT????? Trust Me Any Non-Engineer Has To Answer This QuestionchagzguptaNo ratings yet

- Iridium StoryDocument5 pagesIridium StoryKanishka SharmaNo ratings yet

- History and Production Process of Bisleri Bottled WaterDocument3 pagesHistory and Production Process of Bisleri Bottled WaterchagzguptaNo ratings yet

- A Calculated RiskDocument3 pagesA Calculated RiskchagzguptaNo ratings yet

- Survey - DesignDocument16 pagesSurvey - DesignchagzguptaNo ratings yet

- Survey - DesignDocument16 pagesSurvey - DesignchagzguptaNo ratings yet

- Survey - DesignDocument16 pagesSurvey - DesignchagzguptaNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Critical Review of Nursing Research AssignmentDocument6 pagesCritical Review of Nursing Research AssignmentAru VermaNo ratings yet

- Descriptive ResearchDocument20 pagesDescriptive ResearchCecile V. GuanzonNo ratings yet

- Statistics Concepts and Controversies 9th Edition Moore Notz Test BankDocument10 pagesStatistics Concepts and Controversies 9th Edition Moore Notz Test Banksteven100% (22)

- Etymology of ResearchDocument18 pagesEtymology of ResearchmnmrznNo ratings yet

- CH 3Document14 pagesCH 3Wudneh AmareNo ratings yet

- Statistical Intervals For A Single SampleDocument31 pagesStatistical Intervals For A Single SampleUzoma Nnaemeka TeflondonNo ratings yet

- Course Notes - Basic ProbabilityDocument6 pagesCourse Notes - Basic ProbabilityAnshulNo ratings yet

- CH12. F-Test and One Way AnovaDocument5 pagesCH12. F-Test and One Way AnovaHazell DNo ratings yet

- List of Transportation Sub-Sector Companies Listed on the Indonesia Stock Exchange in 2017-2018Document16 pagesList of Transportation Sub-Sector Companies Listed on the Indonesia Stock Exchange in 2017-2018tutik sutikNo ratings yet

- BRM 2021 FinalDocument7 pagesBRM 2021 FinalIqbal Shan LifestyleNo ratings yet

- Assistant Normal Capability AnalysisDocument47 pagesAssistant Normal Capability AnalysisSrinivasaRaoNo ratings yet

- Power Analysis and Sample Size - RichardDocument22 pagesPower Analysis and Sample Size - RichardCharan Teja Reddy AvulaNo ratings yet

- Bartlett's Test - Definition and Examples - Statistics How ToDocument3 pagesBartlett's Test - Definition and Examples - Statistics How ToAnand PandeyNo ratings yet

- A. Chapter 11 (SD) - UDocument112 pagesA. Chapter 11 (SD) - UErasmo IñiguezNo ratings yet

- Research Study DesignsDocument12 pagesResearch Study DesignsDr Deepthi GillaNo ratings yet

- ENGDAT1 Module1 PDFDocument34 pagesENGDAT1 Module1 PDFLawrence BelloNo ratings yet

- Cronbach's Alpha Reliability Analysis of 44 Survey ItemsDocument5 pagesCronbach's Alpha Reliability Analysis of 44 Survey Itemsyola amanthaNo ratings yet

- Descriptive StatisticsDocument195 pagesDescriptive StatisticssammyyankeeNo ratings yet

- R.design Chap 5Document24 pagesR.design Chap 5muneerppNo ratings yet

- Capability Analysis Using Statgraphics Centurion: Neil W. Polhemus, Cto, Statpoint Technologies, IncDocument32 pagesCapability Analysis Using Statgraphics Centurion: Neil W. Polhemus, Cto, Statpoint Technologies, Inchuynh dungNo ratings yet

- Practical Research 1 Final ExamDocument8 pagesPractical Research 1 Final ExamJohn Jayson Magtalas96% (55)

- Introductory Statistics, Shafer Zhang-AttributedDocument684 pagesIntroductory Statistics, Shafer Zhang-AttributedAlfonso J Sintjago100% (2)

- Choosing The Correct Statistical Test in SAS, Stata, SPSS and R - IDRE StatsDocument5 pagesChoosing The Correct Statistical Test in SAS, Stata, SPSS and R - IDRE StatsMinh MinhNo ratings yet

- Faktor Faktor Yang Berhubungan Dengan Kepatuhan Penggunaan Alat Pelindung Diri Pada Pekerja Rekanan (Pt. X) Di PT Indonesia Power Up SemarangDocument12 pagesFaktor Faktor Yang Berhubungan Dengan Kepatuhan Penggunaan Alat Pelindung Diri Pada Pekerja Rekanan (Pt. X) Di PT Indonesia Power Up SemarangAsriadi S.Kep.Ns.No ratings yet

- Data Visualization With PythonDocument19 pagesData Visualization With PythonAakash RanjanNo ratings yet

- CMTH380 F18 Test 2 ReviewDocument6 pagesCMTH380 F18 Test 2 ReviewAmit VirkNo ratings yet

- Statistical Analysis of Accounting Data at Urmia UniversityDocument17 pagesStatistical Analysis of Accounting Data at Urmia UniversityjhonNo ratings yet

- GLM Multivariate Analysis (Presentation 4) Adv StatDocument84 pagesGLM Multivariate Analysis (Presentation 4) Adv StatjpcagphilNo ratings yet

- Kelompok 6 - Evidence Based PracticeDocument37 pagesKelompok 6 - Evidence Based PracticeRiska Eldyani100% (1)

- 7 Steps of Hypothesis Testing Basic StatisticsDocument2 pages7 Steps of Hypothesis Testing Basic StatisticsOno MasatoshiNo ratings yet