Professional Documents

Culture Documents

Characteristics of A Good Psychological Test-Santos

Uploaded by

Blessed Coloma SantosOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Characteristics of A Good Psychological Test-Santos

Uploaded by

Blessed Coloma SantosCopyright:

Available Formats

What

is the difference? A.Criterion-related validity B.Construct Validity C.Content Validity D.Face Validity What are the factors that influence test validity and reliability

This

is the degree to which the test actually measures what it purpots to measure. It provides a direct check oh how well the test fulfills its functions Example: The PUPCET is check with external criteria like rating of teachers, success or failure, grades or training.

When

test show consistent scores but it is not useful then it is not valid or if it is not consistent in whatever it is measuring then it cannot be valid for any purpose.

CONTENT VALIDITY The extent to which the content of test provides an adequate representative of the conceptual domain it is designed to cover, test of achievement and ability are of this type of examples It is extablished through logical analysis. There is a careful and critical examination of the test items based on the objectives of instruction.

CRITERION RELATED VALIDITY Is established statistically through correlation between the set of scores revealed by a test and some other predictor or data collected by other test or external measured Evidence tells us just how a test corresponds with particular criterion. Such evidence is provided by high correlations between a test and well defined criterion measures

A.Predictive

Validity Evidence tells us the forecasting functions of test. The criterion evidence are obtained in the future generally months or years after the test scores are known Example: SAT serves as predictive evidence as college admission test it is forecast how wel high school students do well in their academic performance

B.Concurrent-related evidence Comes from assessments of simulateous relationship between the test and school performance;in industrial setting job samples to correlate with performance in the job. Appropriate for licensure examination, achievement test and diagnostic test.

CONSTRUCT VALIDITY Is determined by analyzing the psychological qualities traits, or factors measured by a test Psychological constructs include Intelligence, Mechanical ability, Perceptual ability and critical thinking. Construct is defined by showing relationship between test

D.T. Campbell and Fiske (Kaplan, 2001) Introduced an important set of logical considerations for constructing evidence of construct validity A.Convergent Evidence-when a measure correlate well with other test believe to measure the same construct. B.Discriminant Evidence-indicates that the measure does not represent other than the one for which it was devised

FACE

VALIDITY Is the extent to which items on test appear to be meaningful and relevant. It is merely the appearance that a measure has validity

A.

Appropriateness of test items B.Direction C.Reading vocabulary and sentence structures D. Difficulty of items E.Construction of test items-no ambiguous items or leading items G. Length of the test-Sufficient length H. Arrangement of items-no patterns T-T;FF; or A-B,A-B;C-d,C-D and so on.

This

refers to consistency of scores by the same person when reexamined with the same test on different occasions

Scores

Inconsistency Scoring should be objective by providing a scoring key so that correctors biases donot effect examinees scores Limited sampling of behavior This happens with too much accidental inclusion of certain items and the inclusion of other. Instability of examinees performance Fatigue practice, and examinees mood are among the factors that may affect performance.

A. Measures of stability-often called test-retest estimate or reliability and is obtained by administering a test to a group of individuals, re-administering the same test to the same individual at a later date, and correlating the two sets of scores. The correlation must be high, .80 is a min figure. To be trustworthy two testing administrations should be separated by a least a three months gap subjects should be large

B.

Measures of Equivalence The equivalent forms of reliability is obtained by giving two forms (with equal content, means, and variance) of a test to the same group of individuals on the same day and correlate the results. We can generalize a persons score as to what to receive taking similar test but different questions.

Measures of Equivalence and Stability Could he obtained by giving one form of the test and after a period of time, administering the other form and correlate the results. This procedure allows for both changes in scores due to trait instability and changes in scores due to item specifity. Measures of Internal Consistency To be reliable internal consistency must be high, coefficient alpha is bet index of internal consistency a simple approximation to alpha is the split half reliabilty

Split

half Is a method of estimating reliability that is theoretically the same as the equivalent forms method. It is ordinarily considered as a measure of internal consistency because the two equivalent forms are contained within a single test. One test is administered in estimating reliability, one obtains a sub-score for each of the two halves and two subscores are correlated using Speaman-Brown prophecy formula

Length

of test of the test

Difficulty

Objectivity

The

administration, scoring, and interpretation of scores are objective insofar as they are independent of the subjective judgement of the particular examiner Objectivity of the examiner can be achieved if a qualified test examiner who is competent and responsible handles the test. Major way of objective test is administration of difficulty level of an item or of a whole test based on objective, empirical procedues employed by test developer.

Thank You!

You might also like

- You Have To See These Pictures. IDocument20 pagesYou Have To See These Pictures. Iapi-27439927No ratings yet

- DID YOU KNOW by AEM.Document21 pagesDID YOU KNOW by AEM.Blessed Coloma SantosNo ratings yet

- Personality Test Development: Introduction To Clinical Psychology Discussion Section #8 and #9Document41 pagesPersonality Test Development: Introduction To Clinical Psychology Discussion Section #8 and #9Blessed Coloma SantosNo ratings yet

- Coffee Chats On Money - 1Document25 pagesCoffee Chats On Money - 1Blessed Coloma SantosNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Measurement System Analysis LabDocument32 pagesMeasurement System Analysis LabAnonymous 3tOWlL6L0U100% (1)

- Slope Monitoring Using Total StationDocument13 pagesSlope Monitoring Using Total StationIamEm B. MoNo ratings yet

- Exercise CH 08Document10 pagesExercise CH 08Nurshuhada NordinNo ratings yet

- (Analysis of Variance) : AnovaDocument22 pages(Analysis of Variance) : AnovaJoanne Bernadette IcaroNo ratings yet

- COR 006 ReviewerDocument5 pagesCOR 006 ReviewerMargie MarklandNo ratings yet

- About Digital SAT ExamDocument13 pagesAbout Digital SAT Examvanshika.testprepkartNo ratings yet

- Npar Tests: Descriptive StatisticsDocument58 pagesNpar Tests: Descriptive StatisticssuzanalucasNo ratings yet

- DADM Assignment 2 - NYReformDocument14 pagesDADM Assignment 2 - NYReformMohammed RafiqNo ratings yet

- UGCDocument217 pagesUGCkumkumjoNo ratings yet

- t-Test AnalysisDocument22 pagest-Test AnalysisMj EndozoNo ratings yet

- Pavzi Media Model Papers Download for Competitive ExamsDocument15 pagesPavzi Media Model Papers Download for Competitive ExamsGeethanjali.p100% (1)

- ISTQB Exam-Structure-Tables v1 6Document22 pagesISTQB Exam-Structure-Tables v1 6Mayank GaurNo ratings yet

- Assignment 2 QuestionDocument4 pagesAssignment 2 QuestionYanaAlihadNo ratings yet

- Armed Force Test Preparation ResourceDocument7 pagesArmed Force Test Preparation ResourceJunaid AhmadNo ratings yet

- Seven Questions About The WAIS-III Regarding Differences in Abilities Across The 16 To 89 Year Life SpanDocument27 pagesSeven Questions About The WAIS-III Regarding Differences in Abilities Across The 16 To 89 Year Life SpanfernandakNo ratings yet

- Hypothesis Testing Using P-Value ApproachDocument16 pagesHypothesis Testing Using P-Value ApproachB12 Bundang Klarence Timothy P.No ratings yet

- Module 3 - Lesson 4Document112 pagesModule 3 - Lesson 4ProttoyNo ratings yet

- Chisquare in Spss 1209705384088874 8Document10 pagesChisquare in Spss 1209705384088874 8Jayashree ChatterjeeNo ratings yet

- Elementary Statistics A Step by Step Approach 7th Edition Bluman Test BankDocument14 pagesElementary Statistics A Step by Step Approach 7th Edition Bluman Test Bankfelicitycurtis9fhmt7100% (31)

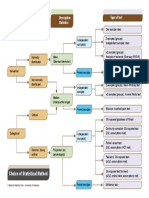

- Choice of Statistical Method Flow DiagramDocument1 pageChoice of Statistical Method Flow DiagramAhmed Adel RagabNo ratings yet

- Ostrovok Ua030: Key For Schools Writing Candidate Answer Sheet For Parts 6 and 7Document2 pagesOstrovok Ua030: Key For Schools Writing Candidate Answer Sheet For Parts 6 and 7Nataliya TunikNo ratings yet

- Agenda Lashista Chicas PesadasDocument100 pagesAgenda Lashista Chicas PesadasSHARON ROQUENo ratings yet

- SPSS Assignment 3 1.: Paired Samples TestDocument2 pagesSPSS Assignment 3 1.: Paired Samples TestDaniel A Pulido RNo ratings yet

- One-Sample Kolmogorov-Smirnov TestDocument2 pagesOne-Sample Kolmogorov-Smirnov TestAnanta MiaNo ratings yet

- 5 Test of Population Variance WorkbookDocument5 pages5 Test of Population Variance WorkbookJohn SmithNo ratings yet

- Implementasi ETL (Extract, Transform, Load) Pada Data: Warehouse Penjualan Menggunakan Tools PentahoDocument8 pagesImplementasi ETL (Extract, Transform, Load) Pada Data: Warehouse Penjualan Menggunakan Tools PentahoabdulhafidzramadhanNo ratings yet

- Chapter 10. Two-Sample TestsDocument51 pagesChapter 10. Two-Sample Tests1502 ibNo ratings yet

- Statistics Assignment 3 SummaryDocument8 pagesStatistics Assignment 3 SummaryAnonymous na314kKjOANo ratings yet

- Significance TestsDocument43 pagesSignificance TestsTruong Giang VoNo ratings yet

- Doon Indian Defence Academy Nda Gat 2020 Question Paper Answer KeyDocument20 pagesDoon Indian Defence Academy Nda Gat 2020 Question Paper Answer KeyStylish SarathiNo ratings yet