Professional Documents

Culture Documents

405 Econometrics: Domodar N. Gujarati

Uploaded by

Kashif KhurshidOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

405 Econometrics: Domodar N. Gujarati

Uploaded by

Kashif KhurshidCopyright:

Available Formats

405 ECONOMETRICS

Chapter # 4: CLASSICAL NORMAL LINEAR

REGRESSION MODEL (CNLRM)

Domodar N. Gujarati

Prof. M. El-Sakka

Dept of Economics: Kuwait University

the classical theory of statistical inference consists of two branches, namely,

estimation and hypothesis testing. We have thus far covered the topic of

estimation.

Under the assumptions of the CLRM, we were able to show that the

estimators of these parameters, 1, 2, and 2, satisfy several desirable

statistical properties, such as unbiasedness, minimum variance, etc. Note

that, since these are estimators, their values will change from sample to

sample, they are random variables.

In regression analysis our objective is not only to estimate the SRF, but also

to use it to draw inferences about the PRF. Thus, we would like to find out

how close 1 is to the true 1 or how close 2 is to the true 2. since 1, 2,

and 2 are random variables, we need to find out their probability

distributions, otherwise, we will not be able to relate them to their true values.

THE PROBABILITY DISTRIBUTION OF DISTURBANCES ui

consider 2. As showed in Appendix 3A.2,

2 = kiYi

(4.1.1)

Where ki = xi/ xi2. But since the Xs are assumed fixed, Eq. (4.1.1) shows

that 2 is a linear function of Yi, which is random by assumption. But since

Yi = 1 + 2Xi + ui , we can write (4.1.1) as

2 = ki(1 + 2Xi + ui)

(4.1.2)

Because ki, the betas, and Xi are all fixed, 2 is ultimately a linear function of

ui, which is random by assumption. Therefore, the probability distribution of

2 (and also of 1) will depend on the assumption made about the

probability distribution of ui .

OLS does not make any assumption about the probabilistic nature of ui.

This void can be filled if we are willing to assume that the us follow some

probability distribution.

THE NORMALITY ASSUMPTION FOR ui

The classical normal linear regression model assumes that each ui is

distributed normally with

Mean:

E(ui) = 0

(4.2.1)

Variance:

E[ui E(ui)]2 = Eu2i = 2

(4.2.2)

cov (ui, uj):

The assumptions given above can be more compactly stated as

ui N(0, 2)

(4.2.4)

The terms in the parentheses are the mean and the variance. ui and uj are

not only uncorrelated but are also independently distributed. Therefore, we

can write (4.2.4) as

ui NID(0, 2)

(4.2.5)

where NID stands for normally and independently distributed.

E{[(ui E(ui)][uj E(uj )]} = E(ui uj ) = 0 i j

(4.2.3)

Why the Normality Assumption? There are several reasons:

1. ui represent the influence omitted variables, we hope that the influence of

these omitted variables is small and at best random. By the central limit

theorem (CLT) of statistics, it can be shown that if there are a large number

of independent and identically distributed random variables, then, the

distribution of their sum tends to a normal distribution as the number of such

variables increase indefinitely.

2. A variant of the CLT states that, even if the number of variables is not

very large or if these variables are not strictly independent, their sum may

still be normally distributed.

3. With the normality assumption, the probability distributions of OLS

estimators can be easily derived because one property of the normal

distribution is that any linear function of normally distributed variables is

itself normally distributed. OLS estimators 1 and 2 are linear functions of

ui . Therefore, if ui are normally distributed, so are 1 and 2, which makes

our task of hypothesis testing very straightforward.

4. The normal distribution is a comparatively simple distribution involving

only two parameters (mean and variance).

5. Finally, if we are dealing with a small, or finite, sample size, say data of

less than 100 observations, the normality not only helps us to derive the

exact probability distributions of OLS estimators but also enables us to use

the t, F, and 2 statistical tests for regression models.

PROPERTIES OF OLS ESTIMATORS UNDER THE

NORMALITY ASSUMPTION

With ui follow the normal distribution, OLS estimators have the following

properties;.

1. They are unbiased.

2. They have minimum variance. Combined with 1., this means that they are

minimum-variance unbiased, or efficient estimators.

3. They have consistency; that is, as the sample size increases indefinitely,

the estimators converge to their true population values.

4. 1 (being a linear function of ui) is normally distributed with

Mean:

E(1) = 1

(4.3.1)

var (1):

21 = ( X2i/n x2i)2 = (3.3.3)

(4.3.2)

Or more compactly,

1 N (1, 2 11)

then by the properties of the normal distribution the variable Z, which is

defined as:

Z = (1 1 )/ 1

(4.3.3)

follows the standard normal distribution, that is, a normal distribution with

zero mean and unit ( = 1) variance, or

Z N(0, 1)

5. 2 (being a linear function of ui) is normally distributed with

Mean:

E(2) = 2

(4.3.4)

var (2):

2 2 =2 / x2i = (3.3.1)

(4.3.5)

Or, more compactly,

2 N(2, 22)

Then, as in (4.3.3),

Z = (2 2 )/2

(4.3.6)

also follows the standard normal distribution.

Geometrically, the probability distributions of 1 and 2 are shown in

Figure 4.1.

6. (n 2)( 2/ 2) is distributed as the 2 (chi-square) distribution with (n

2)df.

7. (1, 2) are distributed independently of 2.

8. 1 and 2 have minimum variance in the entire class of unbiased

estimators, whether linear or not. This result, due to Rao, is very powerful

because, unlike the GaussMarkov theorem, it is not restricted to the class

of linear estimators only. Therefore, we can say that the least-squares

estimators are best unbiased estimators (BUE); that is, they have minimum

variance in the entire class of unbiased estimators.

To sum up: The important point to note is that the normality assumption

enables us to derive the probability, or sampling, distributions of 1 and 2

(both normal) and 2 (related to the chi square). This simplifies the task of

establishing confidence intervals and testing (statistical) hypotheses.

In passing, note that, with the assumption that ui N(0, 2), Yi , being a

linear function of ui, is itself normally distributed with the mean and variance

given by

E(Yi)

= 1 + 2Xi

(4.3.7)

var (Yi)

= 2

(4.3.8)

More neatly, we can write

Yi N(1 + 2Xi , 2)

(4.3.9)

You might also like

- Two-Variable Regression Model EstimationDocument66 pagesTwo-Variable Regression Model EstimationKashif KhurshidNo ratings yet

- How To Make A Decision The Analytic Hier PDFDocument18 pagesHow To Make A Decision The Analytic Hier PDFRogelio Lazo ArjonaNo ratings yet

- On January 1 2014 Paxton Company Purchased A 70 Interest PDFDocument1 pageOn January 1 2014 Paxton Company Purchased A 70 Interest PDFDoreenNo ratings yet

- Case study group analyzes ERP implementation challengesDocument2 pagesCase study group analyzes ERP implementation challengesMythes JicaNo ratings yet

- Assignment 1 - Revisi 1 - Keleompok 20Document53 pagesAssignment 1 - Revisi 1 - Keleompok 20Kameliya Hani MillatiNo ratings yet

- ch03 TADocument31 pagesch03 TARyan E YusufNo ratings yet

- Walmart Financial AnalysisDocument3 pagesWalmart Financial AnalysisNaimul KaderNo ratings yet

- Econometrics ch6Document51 pagesEconometrics ch6muhendis_8900No ratings yet

- 06-07. Facility Location ModelsDocument48 pages06-07. Facility Location ModelsMuhamad RidzwanNo ratings yet

- TA MidtestDocument157 pagesTA MidtestSophie Louis LaurentNo ratings yet

- Panera Bread's Success in Fast-Casual IndustryDocument25 pagesPanera Bread's Success in Fast-Casual IndustrySujit Kumar ShahNo ratings yet

- Measurement Theory: Godfrey Hodgson Holmes TarcaDocument27 pagesMeasurement Theory: Godfrey Hodgson Holmes Tarcaarda321No ratings yet

- Gas or Grouse AnswersDocument6 pagesGas or Grouse AnswersSatrio Haryoseno100% (1)

- TASS Management ReportDocument22 pagesTASS Management Reportdwarven444No ratings yet

- 1935510219+edwin Thungari Macpal+ Experiment2Document14 pages1935510219+edwin Thungari Macpal+ Experiment2EDWIN THUNGARINo ratings yet

- DATABASE MANAGEMENT SYSTEMS NORMALIZATION SOLUTIONSDocument26 pagesDATABASE MANAGEMENT SYSTEMS NORMALIZATION SOLUTIONSdeepakNo ratings yet

- Dummy RegDocument68 pagesDummy RegidfisetyaningrumNo ratings yet

- Case Study Questions 2018Document6 pagesCase Study Questions 2018ari0% (2)

- Burbage Limited Sales System ControlsDocument3 pagesBurbage Limited Sales System ControlsYudha Tama BayurindraNo ratings yet

- Discretionary Revenues As A Measure of Earnings ManagementDocument22 pagesDiscretionary Revenues As A Measure of Earnings ManagementmaulidiahNo ratings yet

- Modelling Long-Run Relationship in FinanceDocument82 pagesModelling Long-Run Relationship in Financederghal100% (1)

- Aggregate Loss ModelsDocument11 pagesAggregate Loss ModelskonicalimNo ratings yet

- Black-Scholes Model PDFDocument16 pagesBlack-Scholes Model PDFthe0MummyNo ratings yet

- Discriminant AnalysisDocument9 pagesDiscriminant AnalysisRamachandranNo ratings yet

- Pengantar Ekonometrika TerapanDocument23 pagesPengantar Ekonometrika TerapanAdi LesmanaNo ratings yet

- Chapter 1-Solution To ProblemsDocument7 pagesChapter 1-Solution To ProblemsawaisjinnahNo ratings yet

- Your Personal Class Notes, Videos, Resources, Homework, LabsDocument10 pagesYour Personal Class Notes, Videos, Resources, Homework, LabsirfanilNo ratings yet

- Chapter 23 - Measuring A Nation's IncomeDocument22 pagesChapter 23 - Measuring A Nation's IncomeDannia PunkyNo ratings yet

- Quantitative and Qualitative Analysis Review QuestionsDocument4 pagesQuantitative and Qualitative Analysis Review QuestionsJohnNo ratings yet

- Credit Risk PT Telkom IndonesiaDocument4 pagesCredit Risk PT Telkom IndonesiaamadilaaNo ratings yet

- Mike She Printed v1Document370 pagesMike She Printed v1Grigoras MihaiNo ratings yet

- Matrix in ComputingDocument33 pagesMatrix in ComputingmisteryfollowNo ratings yet

- Math4424: Homework 4: Deadline: Nov. 21, 2012Document2 pagesMath4424: Homework 4: Deadline: Nov. 21, 2012Moshi ZeriNo ratings yet

- Final Exam S1 2007Document9 pagesFinal Exam S1 2007Mansor ShahNo ratings yet

- SolutionDocument2 pagesSolutionGrapes NovalesNo ratings yet

- Error Correction ModelDocument9 pagesError Correction Modelikin sodikinNo ratings yet

- 39-Article Text-131-1-10-20191111Document9 pages39-Article Text-131-1-10-20191111berlian gurningNo ratings yet

- Classical Normal Linear Regression ModelDocument13 pagesClassical Normal Linear Regression Modelwhoosh2008No ratings yet

- Chapter Iii - Part IIIDocument9 pagesChapter Iii - Part IIIUyên LêNo ratings yet

- Econometrics: Domodar N. GujaratiDocument29 pagesEconometrics: Domodar N. GujaratiSharif JanNo ratings yet

- 1965 - Shapiro - Analysis Variance NormalityDocument21 pages1965 - Shapiro - Analysis Variance Normalityetejada00No ratings yet

- Basics of The OLS Estimator: Study Guide For The MidtermDocument7 pagesBasics of The OLS Estimator: Study Guide For The MidtermConstantinos ConstantinouNo ratings yet

- Econometrics: Domodar N. GujaratiDocument36 pagesEconometrics: Domodar N. GujaratiHamid UllahNo ratings yet

- Week2 ContinuousProbabilityReviewDocument10 pagesWeek2 ContinuousProbabilityReviewLaljiNo ratings yet

- Notes 5Document11 pagesNotes 5Bharath M GNo ratings yet

- VarianceDocument31 pagesVariancePashmeen KaurNo ratings yet

- TWO-VARIABLE NewDocument19 pagesTWO-VARIABLE NewAbhimanyu VermaNo ratings yet

- Week 3 - The SLRM (2) - Updated PDFDocument49 pagesWeek 3 - The SLRM (2) - Updated PDFWindyee TanNo ratings yet

- Mult Hetero Notes AgdDocument29 pagesMult Hetero Notes AgdkiwiisflyineNo ratings yet

- Chi-Square and F Distributions: Children of The NormalDocument16 pagesChi-Square and F Distributions: Children of The NormalChennai TuitionsNo ratings yet

- Handouts CH 3 (Gujarati)Document5 pagesHandouts CH 3 (Gujarati)Atiq ur Rehman QamarNo ratings yet

- Domodar N. Gujarati: Chapter # 8: Multiple Regression AnalysisDocument41 pagesDomodar N. Gujarati: Chapter # 8: Multiple Regression AnalysisaraNo ratings yet

- MREG-PARTIALDocument54 pagesMREG-PARTIALObaydur RahmanNo ratings yet

- Hypothesis TestingDocument23 pagesHypothesis TestingNidhiNo ratings yet

- Metrics10 Violating AssumptionsDocument13 pagesMetrics10 Violating AssumptionsSmoochie WalanceNo ratings yet

- Sampling Distributions of The OLS EstimatorsDocument27 pagesSampling Distributions of The OLS Estimatorsbrianmfula2021No ratings yet

- 1 Causality: POLS571 - Longitudinal Data Analysis September 25, 2001Document6 pages1 Causality: POLS571 - Longitudinal Data Analysis September 25, 2001vignesh87No ratings yet

- Heteroskedasticity: - Homoskedasticity - Var (U - X)Document8 pagesHeteroskedasticity: - Homoskedasticity - Var (U - X)Ivan CheungNo ratings yet

- Eco 5Document30 pagesEco 5Nigussie BerhanuNo ratings yet

- 11.isca RJRS 2013 412 PDFDocument7 pages11.isca RJRS 2013 412 PDFKashif KhurshidNo ratings yet

- Asian Economic and Financial Review: Toheed AlamDocument11 pagesAsian Economic and Financial Review: Toheed AlamKashif KhurshidNo ratings yet

- The Determinants of Capital Structure: Analysis of Non Financial Firms Listed in Karachi Stock Exchange in PakistanDocument29 pagesThe Determinants of Capital Structure: Analysis of Non Financial Firms Listed in Karachi Stock Exchange in Pakistanxaxif8265No ratings yet

- 501 513 Libre PDFDocument13 pages501 513 Libre PDFKashif KhurshidNo ratings yet

- ECON 5027FG Chu PDFDocument3 pagesECON 5027FG Chu PDFKashif KhurshidNo ratings yet

- Econometrics ch6Document51 pagesEconometrics ch6muhendis_8900No ratings yet

- Moderation Meditation PDFDocument11 pagesModeration Meditation PDFMostafa Salah ElmokademNo ratings yet

- (PAPER2) - Cash Flow and Average Adjustments PDFDocument15 pages(PAPER2) - Cash Flow and Average Adjustments PDFQuan Quỷ QuyệtNo ratings yet

- Structural Equation Modeling: Dr. Arshad HassanDocument47 pagesStructural Equation Modeling: Dr. Arshad HassanKashif KhurshidNo ratings yet

- MREG-PARTIALDocument54 pagesMREG-PARTIALObaydur RahmanNo ratings yet

- Analyzing Impact Leverage Adjustment Costs Firm Performance PakistanDocument11 pagesAnalyzing Impact Leverage Adjustment Costs Firm Performance PakistanKashif KhurshidNo ratings yet

- 405 Econometrics Odar N. Gujarati: Prof. M. El-SakkaDocument27 pages405 Econometrics Odar N. Gujarati: Prof. M. El-SakkaKashif KhurshidNo ratings yet

- Estimatingthe Optimal Capital StructureDocument22 pagesEstimatingthe Optimal Capital StructureKashif KhurshidNo ratings yet

- By: Domodar N. Gujarati: Prof. M. El-SakkaDocument19 pagesBy: Domodar N. Gujarati: Prof. M. El-SakkarohanpjadhavNo ratings yet

- Finance Theory and Financial StrategyDocument13 pagesFinance Theory and Financial StrategyurgeltaNo ratings yet

- 501 513 Libre PDFDocument13 pages501 513 Libre PDFKashif KhurshidNo ratings yet

- By: Domodar N. Gujarati: Prof. M. El-SakkaDocument22 pagesBy: Domodar N. Gujarati: Prof. M. El-SakkaKashif KhurshidNo ratings yet

- Black ScholeDocument45 pagesBlack Scholechintu_thakkar9No ratings yet

- Strategic Financial ManagementDocument12 pagesStrategic Financial ManagementFatin MetassanNo ratings yet

- Strategic Financial ManagementDocument4 pagesStrategic Financial ManagementKashif KhurshidNo ratings yet

- 1140 3399 1 PBDocument9 pages1140 3399 1 PBMKashifKhurshidNo ratings yet

- 491 1437 1 PB Libre PDFDocument8 pages491 1437 1 PB Libre PDFKashif KhurshidNo ratings yet

- Fina Marriott Corporation PDFDocument8 pagesFina Marriott Corporation PDFKashif KhurshidNo ratings yet

- Luhman & Cunliffe Chapter OneDocument6 pagesLuhman & Cunliffe Chapter OneHiển HồNo ratings yet

- Discretionary-Accruals Models and Audit QualificationsDocument41 pagesDiscretionary-Accruals Models and Audit QualificationsErwan Saefullah100% (1)

- UST Debt Policy and Capital Structure AnalysisDocument10 pagesUST Debt Policy and Capital Structure AnalysisIrfan MohdNo ratings yet

- Course Outlines For Fundaments of Corporate Finance: Book: Fundamentals of Corporate Finance Author: Ross, Westerfield and Jordan. 10 EditionDocument1 pageCourse Outlines For Fundaments of Corporate Finance: Book: Fundamentals of Corporate Finance Author: Ross, Westerfield and Jordan. 10 EditionKashif KhurshidNo ratings yet

- Corporate Finance AssignmentDocument5 pagesCorporate Finance AssignmentKashif KhurshidNo ratings yet

- Statistical Modeling For Biomedical ResearchersDocument544 pagesStatistical Modeling For Biomedical ResearchersAli Ashraf100% (1)

- Command STATA Yg Sering DipakaiDocument5 pagesCommand STATA Yg Sering DipakaiNur Cholik Widyan SaNo ratings yet

- Machine LearningDocument19 pagesMachine LearningDakshNo ratings yet

- III Sem BSC Mathematics Complementary Course Statistical Inference On10dec2015Document79 pagesIII Sem BSC Mathematics Complementary Course Statistical Inference On10dec2015Maya SethumadhavanNo ratings yet

- Statistics and ProbabilityDocument5 pagesStatistics and ProbabilityFely VirayNo ratings yet

- Formulate null and alternative hypotheses for significance testsDocument22 pagesFormulate null and alternative hypotheses for significance testsJersey Pineda50% (2)

- MIT Probabilistic Systems Analysis Problem Set SolutionsDocument5 pagesMIT Probabilistic Systems Analysis Problem Set SolutionsDavid PattyNo ratings yet

- Exploratory Factor Analysis (Efa)Document56 pagesExploratory Factor Analysis (Efa)Madhavii PandyaNo ratings yet

- Bba 2 Sem Statistics For Management 21103123 Dec 2021Document4 pagesBba 2 Sem Statistics For Management 21103123 Dec 2021bzcadwaithNo ratings yet

- STK 511 Tugan I Semester Ganjil 2019/2020Document8 pagesSTK 511 Tugan I Semester Ganjil 2019/2020Imam MahriyansahNo ratings yet

- TDC - MSM: An R Library For The Analysis of Multi-State Survival DataDocument10 pagesTDC - MSM: An R Library For The Analysis of Multi-State Survival DataFlorencia FirenzeNo ratings yet

- Final Assessment STA104 - July 2020Document9 pagesFinal Assessment STA104 - July 2020aqilah030327No ratings yet

- Central Limit Theorem; Random Sample; Sampling DistributionDocument12 pagesCentral Limit Theorem; Random Sample; Sampling DistributionYesar Bin Mustafa Almaleki0% (1)

- Quartiles Cumulative FrequencyDocument7 pagesQuartiles Cumulative FrequencychegeNo ratings yet

- Pls ScriptDocument2 pagesPls ScriptNipNipNo ratings yet

- Chapter 3 - Forecasting - EXCEL TEMPLATESDocument14 pagesChapter 3 - Forecasting - EXCEL TEMPLATESKirsten Claire BurerosNo ratings yet

- SPSS LiaDocument8 pagesSPSS Liadilla ratnajNo ratings yet

- Stanford Stats 200Document6 pagesStanford Stats 200Jung Yoon SongNo ratings yet

- Data ProcessingDocument23 pagesData ProcessingMingma TamangNo ratings yet

- CH 07Document40 pagesCH 07Ambreen31No ratings yet

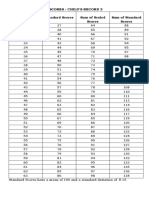

- Table of Standard Scores Child's Record 2Document1 pageTable of Standard Scores Child's Record 2Francis Nicor100% (4)

- MathDocument14 pagesMathunsaarshadNo ratings yet

- Chapter 4 Forecasting and Pro Forma Financial StatementsDocument59 pagesChapter 4 Forecasting and Pro Forma Financial Statementstantiba0% (1)

- Statistical Procedures Formulas and TablesDocument84 pagesStatistical Procedures Formulas and TablesdabNo ratings yet

- Universitas Seminar Hasil Penelitian Formulation by Design Sediaan Tablet Amoksisilin TrihidratDocument24 pagesUniversitas Seminar Hasil Penelitian Formulation by Design Sediaan Tablet Amoksisilin TrihidratZiyad AslamNo ratings yet

- F-Test Using One-Way ANOVA: ObjectivesDocument5 pagesF-Test Using One-Way ANOVA: ObjectiveslianNo ratings yet

- Test of Hypothesis in Statistics and ProbabilityDocument7 pagesTest of Hypothesis in Statistics and ProbabilityMaria Theresa Nartates-EguitaNo ratings yet

- Data Mining 101Document50 pagesData Mining 101karinoyNo ratings yet

- VC-dimension For Characterizing ClassifiersDocument40 pagesVC-dimension For Characterizing ClassifiersRatheesh P MNo ratings yet

- Processing and Interpretation of DataDocument12 pagesProcessing and Interpretation of DataMingma TamangNo ratings yet