Professional Documents

Culture Documents

Concepts and Techniques: Data Mining

Uploaded by

PraveenMynampatiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Concepts and Techniques: Data Mining

Uploaded by

PraveenMynampatiCopyright:

Available Formats

Data Mining:

Concepts and Techniques

— Chapter 11 —

—Additional Theme: RFID Data Warehousing and

Mining and High-Performance Computing—

Jiawei Han and Micheline Kamber

Department of Computer Science

University of Illinois at Urbana-Champaign

www.cs.uiuc.edu/~hanj

©2006 Jiawei Han and Micheline Kamber. All rights reserved.

Acknowledgements: Hector Gonzalez and Shengnan Cong

12/08/21 Data Mining: Concepts and Techniques 1

12/08/21 Data Mining: Concepts and Techniques 2

Outline

Introduction to RFID Technology

Motivation: Why RFID-Warehousing?

RFID-Warehouse Architecture

Performance Study

Linking RFID Data Analysis with HPC

Conclusions

12/08/21 Data Mining: Concepts and Techniques 3

What is RFID?

Radio Frequency Identification (RFID)

Technology that allows a sensor (reader)

to read, from a distance, and without line

of sight, a unique electronic product

code (EPC) associated with a tag

Tag Reader

12/08/21 Data Mining: Concepts and Techniques 4

RFID System

Source: www.belgravium.com

12/08/21 Data Mining: Concepts and Techniques 5

Applications

Supply Chain Management:

real-time inventory tracking

Retail: Active shelves monitor

product availability

Access control: toll collection,

credit cards, building access

Airline luggage management:

(British airways) Implemented to

reduce lost/misplaced luggage (20

million bags a year)

Medical: Implant patients with a

tag that contains their medical

history

Pet identification: Implant RFID

tag with pet owner information

(www.pet-id.net)

12/08/21 Data Mining: Concepts and Techniques 6

Outline

Introduction to RFID Technology

Motivation: Why RFID-Warehousing?

RFID-Warehouse Architecture

Performance Study

Linking RFID Data Analysis with HPC

Conclusions

12/08/21 Data Mining: Concepts and Techniques 7

RFID Warehouse Architecture

12/08/21 Data Mining: Concepts and Techniques 8

Challenges of RFID Data Sets

Data generated by RFID systems is enormous due to redundancy

and low level of abstraction

Walmart is expected to generate 7 terabytes of RFID

data per day

Solution Requirements

Highly compact summary of the data

OLAP operations on multi-dimensional view of the data

Summary should preserve the path structure of RFID

data

It should be possible to efficiently drill down to individual

tags when an interesting pattern is discovered

12/08/21 Data Mining: Concepts and Techniques 9

Why RFID-Warehousing? (1)

Lossless compression

Significantly reduce the size of the RFID data set by

redundancy removal and grouping objects that

move and stay together

Data cleaning: reasoning based on more complete info

Multi-reading, miss-reading, error-reading, bulky

movement, …

Multi-dimensional summary: product, location, time, …

Store manager: Check item movements from the

backroom to different shelves in his store

Region manager: Collapse intra-store movements and

look at distribution centers, warehouses, and stores

12/08/21 Data Mining: Concepts and Techniques 10

Why RFID-Warehousing? (2)

Query Processing

Support for OLAP: roll-up, drill-down, slice, and dice

Path query: New to RFID-Warehouses, about the

structure of paths

What products that go through quality control have

shorter paths?

What locations are common to the paths of a set of

defective auto-parts?

Identify containers at a port that have deviated from their

historic paths

Data mining

Find trends, outliers, frequent, sequential, flow

patterns, …

12/08/21 Data Mining: Concepts and Techniques 11

Example: A Supply Chain Store

A retailer with 3,000 stores, selling 10,000 items a day per store

Each item moves 10 times on average before being sold

Movement recorded as (EPC, location, second)

Data volume: 300 million tuples per day (after redundancy

removal)

OLAP Query

Avg time for outwear items to move from warehouse

to checkout counter in March 2006?

Costly to answer if scanning 1 billion tuples for March

12/08/21 Data Mining: Concepts and Techniques 12

Outline

Introduction to RFID Technology

Motivation: Why RFID-Warehousing?

RFID-Warehouse Architecture

Performance Study

Linking RFID Data Analysis with HPC

Conclusions

12/08/21 Data Mining: Concepts and Techniques 13

Cleaning of RFID Data Records

Raw Data

(EPC, location, time)

Duplicate records due to multiple readings of a

product at the same location

(r1,l1,t1) (r1,l1,t2) ... (r1,l1,t10)

Cleansed Data: Minimal information to store, raw data will

be then removed

(EPC, Location, time_in, time_out)

(r1,l1,t1,t10)

Warehousing can help fill-up missing records and correct

wrongly-registered information

12/08/21 Data Mining: Concepts and Techniques 14

Key Compression Ideas (I)

Bulky object movements

Objects often move and stay together through the supply chain

If 1000 packs of soda stay together at the distribution center,

register a single record

(GID, distribution center, time_in, time_out)

GID is a generalized identifier that represents the 1000 packs that

stayed together at the distribution center

shelf 1

10 pallets store 1

(1000 cases)

Dist. Center 1 shelf 2

store 2

Dist. Center2 …

Factory

…

10 packs

… (12 sodas)

20 cases

(1000 packs)

12/08/21 Data Mining: Concepts and Techniques 15

Key Compression Ideas (II)

Data generalization

Analysis usually takes place at a much higher level of

abstraction than the one present in raw RFID data

Aggregate object movements into fewer records

If interested in time at the day level, merge records

at the minute level into records at the hour level

Merge and/or collapse of path segments

Uninteresting path segments can be ignored or merged

Multiple item movements within the same store may

be uninteresting to a regional manager and thus can

be merged

12/08/21 Data Mining: Concepts and Techniques 16

Path-Independent Generalization

Category level Clothing

Type level Interesting

Outerwear Shoes

Level

SKU level Shirt Jacket …

EPC level Shirt 1 … Shirt n Cleansed RFID

Database Level

12/08/21 Data Mining: Concepts and Techniques 17

Path Generalization

Store View:

Transportation backroom shelf checkout

dist. center truck backroom shelf checkout

Transportation View:

dist. center truck Store

12/08/21 Data Mining: Concepts and Techniques 18

Why Not Using Traditional Data Cube?

Fact Table: (EPC, location, time_in, time_out)

Aggregate: A measure at a single location

e.g., what is the average time that milk stays in the

refrigerator in Illinois stores?

What is missing?

Measures computed on items that travel through a

series of locations

e.g., what is the average time that milk stays at the

refrigerator in Champaign when coming from farm A,

and Warehouse B?

Traditional cubes miss the path structure of the data

12/08/21 Data Mining: Concepts and Techniques 19

RFID-Cube Architecture

12/08/21 Data Mining: Concepts and Techniques 20

RFID-Cuboid Architecture (II)

Stay Table: (GIDs, location, time_in, time_out: measures)

Records information on items that stay together at a given

location

If using record transitions: difficult to answer queries, lots of

intersections needed

Map Table: (GID, <GID1,..,GIDn>)

Links together stages that belong to the same path. Provides

additional: compression and query processing efficiency

High level GID points to lower level GIDs

If saving complete EPC Lists: high costs of IO to retrieve long

lists, costly query processing

Information Table: (EPC list, attribute 1,...,attribute n)

Records path-independent attributes of the items, e.g., color,

manufacturer, price

12/08/21 Data Mining: Concepts and Techniques 21

RFID-Cuboid Example

Cleansed RFID Database Stay Table

epc loc t_in t_out epcs

gids loc t_in t_out

r1 l1 t1 t10 r1,r2,r3

g1 l1 t1 t10

r1 l2 t20 t30 g1.1

r1,r2 l2 t20 t30

r2 l1 t1 t10 r3

g1.2 l4 t15 t20

r2 l3 t20 t30

Map Table

r3 l1 t1 10

gid gids

r3 l4 t15 t20 g1 g1.1,g1.2

g1.1 r1,r2

g1.2 r3

12/08/21 Data Mining: Concepts and Techniques 22

Benefits of the Stay Table (I)

Query: What is the average time that items stay

at location l ?

Transition Grouping l1 ln+1

Retrieve all transitions with destination = l

Retrieve all transitions with origin = l

l2 l ln+2

Intersect results and compute average time

IO Cost: n + m retrievals

… …

Prefix Tree

Retrieve n records ln ln+m

Stay Grouping

Retrieve stay record with location = l

IO Cost: 1

12/08/21 Data Mining: Concepts and Techniques 23

Benefits of the Stay Table (II)

Query: How many boxes of milk traveled through the locations l1, l7, l13?

With Cleansed Database With Stay Table

Strategy: Strategy:

(r1,l1,t1,t2) (g1,l1,t1,t2)

Retrieve itemsets for Retrieve the gids

(r1,l2,t3,t4) (g2,l2,t3,t4)

locations l1, l7, l13 … for l1, l7, l13 …

(r2,l1,t1,t2) Intersect the gids

Intersect itemsets

(r2,l2,t3,t4) IO Cost:

IO Cost: … One IO per GID in

One IO per item in (rk,l1,t1,t2) locations l1, l7, and

locations l1 or l7 or l13 (rk,l2,t3,t4) l13

Observation: Observation:

Retrieve records

Very costly, we retrieve

at the group level

records at the individual

and thus greatly

item level reduce IO costs

12/08/21 Data Mining: Concepts and Techniques 24

Benefits of the Map Table

#EPCs #GIDs

l1 n 1

n 3

l2 l3 l4

l5 l6 l7 l8 l9 l10 n 6

{r1,..,ri} {ri+1,..,rj} {rj+1,..,rk} {rk+1,..,rl} {rl+1,..,rm} {rm+1,..,rn} 3n 10+n

12/08/21 Data Mining: Concepts and Techniques 25

Path-Dependent Naming of GIDs

0.0 0.1

Assign to each GID a unique

l1 l2

identifier that encodes the path

traversed by the items that it 0.0.0 0.1.0

0.1.1

points to l3 l4

Path-dependent name: Makes

0.0.0.0

0.1.0.1

it easy to detect if locations

form a path l5 l6

12/08/21 Data Mining: Concepts and Techniques 26

RFID-Cuboid Construction Algorithm

1. Build a prefix tree for the paths in the cleansed

database

2. For each node, record a separate measure for each

group of items that share the same leaf and information

record

3. Assign GIDs to each node:

GID = parent GID + unique id

4. Each node generates a stay record for each distinct

measure

5. If multiple nodes share the same location, time, and

measure, generate a single record with multiple GIDs

12/08/21 Data Mining: Concepts and Techniques 27

RFID-Cube Construction

Path Tree Stay Table

GIDs loc t_in t_out count

0.0 l1 t1 t10 3

0.0.0 l3 t20 t30 6

0.0 0.1 l2 3

t1,t10: 3 l1 t1,t8: 3 0.1.0

0.0.0.0 l5 t40 t60 5

3

0.1.0.0

0.0.0

l3 0.1.0 l3 0.1.1 l4 0.1 l2 t1 t8 3

t20,t30: 3 t20,t30: 3 t10,t20: 2

{r8,r9} 0.1.0.1 l6 t35 t50 1

0.1.0.0 0.1.0.1

t40,t60: 2 t35,t50: 1

0.1.1 l4 t10 t20 2

0.0.0.0

t40,t60: 3 l5 l5 l6

{r1,r2,r3} {r5,r6} {r7}

12/08/21 Data Mining: Concepts and Techniques 28

RFID-Cube Properties

The RFID-cuboid can be constructed on a single scan of

the cleansed RFID database

The RFID-cuboid provides lossless compression at its level

of abstraction

The size of the RFID-cuboid is smaller than the cleansed

data

In our experiments we get 80% lossless compression

at the level of abstraction of the raw data

12/08/21 Data Mining: Concepts and Techniques 29

Query Processing

Traditional OLAP operations

Roll up, drill down, slice, and dice

Can be implemented efficiently with traditional

optimization techniques, e.g., what is the average time

spent by milk at the shelf

stay.location = 'shelf', info.product = 'milk' (stay gid info)

Path selection (New operation)

Compute an aggregate measure on the tags that

travel through a set of locations and that match a

selection criteria on path independent dimensions

q à < c info,(c stage1, ..., c stagek) >

1 k

12/08/21 Data Mining: Concepts and Techniques 30

12/08/21 Data Mining: Concepts and Techniques 31

Query Processing (II)

Query: What is the average time spent from l3 to l5?

GIDs for l3 <0.0.0>, <0.1.0>

GIDs for l5 <0.0.0.0>, <0.1.0.1>

Prefix pairs: p1: (<0.0.0>,<0.0.0.0>)

p2: (<0.1.0>,<0.1.0.1>)

Retrieve stay records for each pair (including

intermediate steps) and compute measure

Savings: No EPC list intersection, remember that each

EPC list may contain millions of different tags, and

retrieving them is a significant IO cost

12/08/21 Data Mining: Concepts and Techniques 32

From RFID-Cuboids to RFID-Warehouse

Materialize the lowest RFID-

cuboid at the minimum level

of abstraction interested to

a user

Materialize frequently

requested RFID-cuboids

Materialization is done from

the smallest materialized

RFID-Cuboid that is at a

lower level of abstraction

12/08/21 Data Mining: Concepts and Techniques 33

Outline

Introduction to RFID Technology

Motivation: Why RFID-Warehousing?

RFID-Warehouse Architecture

Performance Study

Linking RFID Data Analysis with HPC

Conclusions

12/08/21 Data Mining: Concepts and Techniques 34

RFID-Cube Compression (I)

Compression vs. 350

300

clean

nomap

Size (MBytes)

Cleansed data size 250

200

map

150

P=1000, B=(500,150,40,8,1), k = 5 100

50

Lossless compression, cuboid is at 0

the same level of abstraction as 0.1 0.5 1 5 10

cleansed RFID database Input Stay Records (m illions)

Compression vs. Data 35 clean

Bulkiness 30 nomap

Size (MBytes)

25 map

P=1000, N = 1,000,000, k= 5 20

15

Map gives significant benefits for bulky 10

data 5

0

For data where items move individually a b c d e

we are better off using tag lists Path Bulkiness

12/08/21 Data Mining: Concepts and Techniques 35

RFID-Cube Compression (II)

12

Size (MBytes) 10 nomap

8 map

6

4

2

0

1 2 3 4

Abstraction Level

Compression vs. Abstraction Level

P=1000, B=(500,150,40,8,1), k = 5, N=1,000,000

The map provides significant savings over using tag lists

At very high levels of abstraction the stay table is very small, most of

the space is used in recording RFID tags

12/08/21 Data Mining: Concepts and Techniques 36

RFID-Cube Construction Time

1,800

100K tags

1,600

200K tags

1,400

Time (seconds)

400K tags

1,200

1,000 800K tags

800 1600K tags

600

400

200

0

level 0 level 1 level 2 level 3

Aggregation Level

Construction Time

P=1000, B=(500,150,40,8,1), k = 5, N=1,000,000

Savings by constructing from lower level cuboid 50% to 80%

12/08/21 Data Mining: Concepts and Techniques 37

Query Processing

I/O Operations (log scale)

Time vs. DB Size 10,000

clean

1,000 nomap

P=1000, B=(500,150,40,8,1), k = 5 map

100

Speed up due to stay table 1 order of

10

magnitude

1

Speed up due to stay table and map 1 2 3 4 5

table 2 orders of magnitude Input Stay Records (m illions)

Time vs. Bulkiness I/O Operations (log scale)

100,000 clean

P=1000, k = 5 10,000 nomap

1,000 map

Speed up is most significant for bulky

100

paths

10

For non-bulky paths performance is 1

not worse than using the clean table 1 2 3 4 5

Path Bulkiness

12/08/21 Data Mining: Concepts and Techniques 38

Discussion

Our RFID cube model works well for bulky object

movements

But there are many applications where this assumption is

not true and other models are needed

We have only focused on warehousing RFID data, a

variety of other problems remain open:

Path classification and clustering

Workflow analysis

Trend analysis

Sophisticated RFID data cleaning

12/08/21 Data Mining: Concepts and Techniques 39

Outline

Introduction to RFID Technology

Motivation: Why RFID-Warehousing?

RFID-Warehouse Architecture

Performance Study

Linking RFID Data Analysis with HPC

Conclusions

12/08/21 Data Mining: Concepts and Techniques 40

Linking RFID Data Analysis with HPC

High performance computing will play an

important role in RFID data warehousing and

data analysis

Most of data cleaning process can be done in

parallel and distributed manner

Stay and map tables construction can be

constructed in parallel

Parallel computation and consolidation of multi-

layer and multi-path data cubes

Query and mining can be processed in parallel

12/08/21 Data Mining: Concepts and Techniques 41

Parallel RFID Data Mining: Promising

Parallel computing has been successfully applied to rather

sophisticated data mining algorithms

Parallelizing frequent pattern mining (based on FPgrowth)

Shengnan Cong, Jiawei Han, Jay Hoeflinger, and David Padua, “A

Sampling-based Framework for Parallel Data Mining,” PPOPP’05

(ACM SIGPLAN Symp. on Principles & Practice of Parallel

Programming)

Parallelizing sequential-pattern mining algorithm (based on

PrefixSpan)

Shengnan Cong, Jiawei Han, and David Padua, “Parallel Mining of

Closed Sequential Patterns”, KDD'05

Parallel FRID data analysis is highly promising

12/08/21 Data Mining: Concepts and Techniques 42

Mining Frequent Patterns

Breadth-first search vs. depth-first search

null null

A B C D E A B C D E

AB AC AD AE BC BD BE CD CE DE AB AC AD AE BC BD BE CD CE DE

ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE ABC ABD ABE ACD ACE ADE BCD BCE BDE CDE

ABCD ABCE ABDE ACDE BCDE ABCD ABCE ABDE ACDE BCDE

ABCD ABCD

E E

Depth-first mining algorithm is proved to be more efficient

Depth-first mining algorithm is more convenient to be parallelized

12/08/21 Data Mining: Concepts and Techniques 43

Parallel Frequent-Pattern Mining

Target platform ─ distributed memory system

Framework for parallelization

Step 1:

Each processor scans local portion of the dataset and

accumulate the numbers of occurrence for each items

Reduction to obtain the global numbers of occurrence

Step 2:

Partition the frequent items and assign a subset to each

processor

Each processor makes projections for the assigned items

Step 3:

Each processor mines the local projections independently

12/08/21 Data Mining: Concepts and Techniques 44

Parallel Frequent-Pattern Mining (2)

Load balancing problem

Some projection mining time is too large relative to the

overall mining time

Dataset mushroom connect pumsb pumsb_star T30I0.2D1K T40I10D100K T50I5D500K

Maximal/Overall 7.66% 12.4% 14.7% 47.6% 42.1% 4.15% 3.07%

(a) datasets for frequent-itemset mining

Dataset C10N0.1T8S8I8 C50N10T8S20I2.5 C100N5T2.5S10I1.25 C200N10T2.5S10I1.25 C100N20T2.5S10I1.25

Maximal/Overall 21.4% 13.8% 14.2% 10.1% 11.6%

(b) datasets for sequential-pattern mining

Dataset C100S50N10 C100S100N5 C200S25N9 gazelle

Maximal/Overall 15.3% 5.02% 4.53% 25.9%

(c) datasets for closed-sequential-pattern mining

Solution: The large projections must be partitioned

Challenge: How to identify the large projections?

12/08/21 Data Mining: Concepts and Techniques 45

How to Identify the Large Projections?

To identify the large projections, we need an estimation of

the relative mining time of the projections

Static estimation

Study the correlation with the dataset parameters

Number of items, number of records, width of

records, …

Study the correlation with the characteristics of the

projection

Depth, bushiness, tree size, number of leaves, fan-

out/in, …

Result ─ No rule found with the above parameters for the

projection mining time

12/08/21 Data Mining: Concepts and Techniques 46

Dynamic Estimation

Runtime sampling

Use the relative mining time of a sample to estimate the relative

mining time of the whole dataset.

Accuracy vs. overhead

Random sampling: random select a subset of records.

Not accurate with small

sample size.

e.g. Dataset —pumsb

1% random sampling

Becomes accurate when

sample size > 30%, but

sampling overhead is

over 50% then

12/08/21 Data Mining: Concepts and Techniques 47

Selective Sampling

Selective sampling: for each record, some items are

removed

In frequent-itemset mining:

Discard the infrequent items

Discard a fraction t of the most frequent items

dataset Support threshold =2, t=20% selective sample

1 a, c, d, f, m 1 a, c, m

f :4

2 b, c, f, m b :3 2 b, c, m

3 b, f

c :3

3 b

m :3

4 b, c a: 2 4 b, c

5 a, f, m d: 1 5 a, m

12/08/21 Data Mining: Concepts and Techniques 48

Accuracy of Selective Sampling

12/08/21 Data Mining: Concepts and Techniques 49

Overhead of Selective Sampling

(a) datasets for frequent-itemset mining

(b) datasets for sequential-pattern mining

(c) datasets for closed-sequential-pattern mining

12/08/21 Data Mining: Concepts and Techniques 50

Experimental Setups

Two Linux clusters using up to 64 processors

Cluster A – 1GHz Pentium III processor, 1GB

memory

Cluster B – 1.3GHz Intel Itanium2 processor, 2GB

memory

Implement with C++ using MPI

Dataset generator from IBM

Datasets

12/08/21 Data Mining: Concepts and Techniques 51

Experimental Setups

12/08/21 Data Mining: Concepts and Techniques 52

Speedups with One-Level Task

Partitioning

Parallel frequent-itemset mining

mushroom connect pumsb pumsb_star

100 100 100 100

optimal optimal optimal optimal

Par-FP Par-FP Par-FP

Par-FP

10 10 10 10

1

1 1 1

1 2 4 8 16 32 64

1 2 4 8 16 32 64 1 2 4 8 16 32 64 1 2 4 8 16 32 64

Processor# Processor# Processor# Processor#

T30I0.2D1K T40I10D100K T50I5D500K

100 100 100

optimal optimal

Par-FP Par-FP

optimal

Par-FP

10 10 10

1 1 1

1 2 4 8 16 32 64 1 2 4 8 16 32 64 1 2 4 8 16 32 64

Processor# Processor# Processor#

12/08/21 Data Mining: Concepts and Techniques 53

Effectiveness of Selective Sampling

Multi-level task partitioning

connect pumsb pumsb_star

mushroom 100 100 100

100

optimal

optimal optimal optimal

one-level

one-level one-level

one-level

multi-level multi-level multi-level

multi-level

10 10 10 10

1

1 1 1

1 2 4 8 16 32 64

1 2 4 8 16 32 64 1 2 4 8 16 32 64 1 2 4 8 16 32 64

T30I0.2D1K T40I10D100K T50I5D500K

100 100 100

optimal

optimal optimal

one-level

one-level

one-level

multi-level multi-level

multi-level

10 10 10

1 1 1

1 2 4 8 16 32 64 1 2 4 8 16 32 64 1 2 4 8 16 32 64

12/08/21 Data Mining: Concepts and Techniques 54

Speedups with One-Level Task

Partitioning (Sequential Patterns)

Parallel sequential-pattern mining

C100N5T2.5S10I1.25 C50N10T8S20I2.5

100 C10N0.1T8S8I8

100 100

optimal

optimal optimal

Par-Span

Par-Span

Par-Span

10 10 10

1 1 1

1 2 4 8 16 32 64 1 2 4 8 16 32 64 1 2 4 8 16 32 64

Processor# Processor# Processor#

C100N20T2.5S10I1.25 C200N10T2.5S10I1.25

100 100

optimal

Par-Span optimal

Par-Span

10 10

1 1

1 2 4 8 16 32 64 1 2 4 8 16 32 64

Processor# Processor#

12/08/21 Data Mining: Concepts and Techniques 55

Speedups with One-Level Task Partitioning

(Closed Sequential Pattern)

Parallel closed-sequential-pattern mining

C100S50N10 C100S100N5

100

100

optimal optimal

Par-CSP Par-CSP

10 10

1 1

1 2 4 8 16 32 64 1 2 4 8 16 32 64

Processor# Processor#

C200S25N9 Gazelle

100 100

optimal optimal

Par-CSP Par-CSP

10 10

1 1

1 2 4 8 16 32 64 1 2 4 8 16 32 64

Processor# Processor#

12/08/21 Data Mining: Concepts and Techniques 56

Effectiveness of Selective Sampling

One-level task partitioning with 64 processors

The speedups are improved by more than 50% on

40

average.

without sampling

35

with sampling

30

25

20

15

10

12/08/21 Data Mining: Concepts and Techniques 57

Conclusions

A new RFID warehouse model

allows efficient and flexible analysis of RFID data in

multidimensional space

preserves the structure of the data

compresses data by exploiting bulky movements,

concept hierarchies, and path collapsing

High-performance computing will benefit RFID data

warehousing and data mining tremendously

Efficient and highly parallel algorithms can be developed

for RFID data analysis

12/08/21 Data Mining: Concepts and Techniques 58

12/08/21 Data Mining: Concepts and Techniques 59

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Outdoor Composting Guide 06339 FDocument9 pagesOutdoor Composting Guide 06339 FAdjgnf AANo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Soil Nailing and Rock Anchors ExplainedDocument21 pagesSoil Nailing and Rock Anchors ExplainedMark Anthony Agnes AmoresNo ratings yet

- 91 SOC Interview Question BankDocument3 pages91 SOC Interview Question Bankeswar kumarNo ratings yet

- CASE FLOW AT REGIONAL ARBITRATIONDocument2 pagesCASE FLOW AT REGIONAL ARBITRATIONMichael Francis AyapanaNo ratings yet

- Cis285 Unit 7Document62 pagesCis285 Unit 7kirat5690No ratings yet

- Katie Todd Week 4 spd-320Document4 pagesKatie Todd Week 4 spd-320api-392254752No ratings yet

- Analytic Solver Platform For Education: Setting Up The Course CodeDocument2 pagesAnalytic Solver Platform For Education: Setting Up The Course CodeTrevor feignarddNo ratings yet

- تقرير سبيس فريم PDFDocument11 pagesتقرير سبيس فريم PDFAli AkeelNo ratings yet

- Christmasworld Trend Brochure 2024Document23 pagesChristmasworld Trend Brochure 2024Ольга ffNo ratings yet

- Ex 1-3 Without OutputDocument12 pagesEx 1-3 Without OutputKoushikNo ratings yet

- E85001-0646 - Intelligent Smoke DetectorDocument4 pagesE85001-0646 - Intelligent Smoke Detectorsamiao90No ratings yet

- Balance NettingDocument20 pagesBalance Nettingbaluanne100% (1)

- Tutorial 2 EOPDocument3 pagesTutorial 2 EOPammarNo ratings yet

- 2021 Edelman Trust Barometer Press ReleaseDocument3 pages2021 Edelman Trust Barometer Press ReleaseMuhammad IkhsanNo ratings yet

- 22 Caltex Philippines, Inc. vs. Commission On Audit, 208 SCRA 726, May 08, 1992Document36 pages22 Caltex Philippines, Inc. vs. Commission On Audit, 208 SCRA 726, May 08, 1992milkteaNo ratings yet

- NSTP 1: Pre-AssessmentDocument3 pagesNSTP 1: Pre-AssessmentMaureen FloresNo ratings yet

- Social Vulnerability Index Helps Emergency ManagementDocument24 pagesSocial Vulnerability Index Helps Emergency ManagementDeden IstiawanNo ratings yet

- Coca Cola Concept-1Document7 pagesCoca Cola Concept-1srinivas250No ratings yet

- The Little Book of Deep Learning: An Introduction to Neural Networks, Architectures, and ApplicationsDocument142 pagesThe Little Book of Deep Learning: An Introduction to Neural Networks, Architectures, and Applicationszardu layakNo ratings yet

- COA (Odoo Egypt)Document8 pagesCOA (Odoo Egypt)menams2010No ratings yet

- Railway Reservation System Er DiagramDocument4 pagesRailway Reservation System Er DiagramPenki Sarath67% (3)

- Getting Started With DAX Formulas in Power BI, Power Pivot, and SSASDocument19 pagesGetting Started With DAX Formulas in Power BI, Power Pivot, and SSASJohn WickNo ratings yet

- CCW Armored Composite OMNICABLEDocument2 pagesCCW Armored Composite OMNICABLELuis DGNo ratings yet

- Personal Selling ProcessDocument21 pagesPersonal Selling ProcessRuchika Singh MalyanNo ratings yet

- Hydraulic-Fracture Design: Optimization Under Uncertainty: Risk AnalysisDocument4 pagesHydraulic-Fracture Design: Optimization Under Uncertainty: Risk Analysisoppai.gaijinNo ratings yet

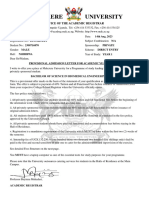

- Makerere University: Office of The Academic RegistrarDocument2 pagesMakerere University: Office of The Academic RegistrarOPETO ISAACNo ratings yet

- 110 TOP Single Phase Induction Motors - Electrical Engineering Multiple Choice Questions and Answers - MCQs Preparation For Engineering Competitive ExamsDocument42 pages110 TOP Single Phase Induction Motors - Electrical Engineering Multiple Choice Questions and Answers - MCQs Preparation For Engineering Competitive Examsvijay_marathe01No ratings yet

- This Study Resource Was: ExercisesDocument1 pageThis Study Resource Was: Exercisesىوسوكي صانتوسNo ratings yet

- Chapter 6 Performance Review and Appraisal - ReproDocument22 pagesChapter 6 Performance Review and Appraisal - ReproPrecious SanchezNo ratings yet

- Break-Even Analysis: Margin of SafetyDocument2 pagesBreak-Even Analysis: Margin of SafetyNiño Rey LopezNo ratings yet